AMD Radeon RX 9060 XT 16 GB Shows Up In Early Time Spy Benchmark With Mixed Conclusions

AMD's upcoming Radeon RX 9060 XT has shown up in the news a number of times leading up to the expected retail launch, from AsRock's announcement to a recent Geekbench leak that put the RDNA 4 GPU ahead of the RX 7600 XT by a fair shout. Now, however, we have a gaming benchmark from 3DMark Time Spy showing the RX 9060 XT nearly matching the RX 7700 XT, and those results could still improve as drivers mature and become more stable. The benchmark results are courtesy of u/uesato_hinata, who got their hands on an XFX Swift AMD Radeon RX 9060 XT 16 GB and posted their results on r/AMD on Reddit.

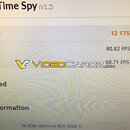

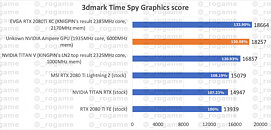

There are a few caveats to these performance figures, though, since the redditor who shared the results was using beta drivers and a moderate GPU overclock and undervolt—cited as "+200mhz clock offset -40mv undervolt +10% power limit, I can get 3.46Ghz at 199 W". With those performance tweaks, however, the RX 9060 XT puts up a respectable result of 14,210 points in 3DMark Time Spy. For comparison, the average RX 7700 XT scores 15,452 points in the same benchmark. However, it should also be noted that the gaming PC used in the RX 9060 XT benchmark in question was powered by a rather old AMD Ryzen 5 5600 paired with mismatched DDR4-2133 RAM, meaning there is likely at least some performance left on the table, even if GPU utilization seems consistently high in the 3DMark monitoring chart, indicating there was little bottlenecking limiting the performance. The redditor went on to benchmark the GPU in Black Myth: Wukong, where it managed a 64 FPS average at stock clocks at 1080p, with most settings set to high. Applying the overclock boosted average FPS to a mere 65 FPS, but increased the minimum FPS from 17 to 23. These numbers also won't be representative of the performance for all RX 9060 XT GPUs, since we know that AMD is launching both 8 and 16 GB versions of the RX 9060 XT with different GPU clock speeds for the different memory variants

There are a few caveats to these performance figures, though, since the redditor who shared the results was using beta drivers and a moderate GPU overclock and undervolt—cited as "+200mhz clock offset -40mv undervolt +10% power limit, I can get 3.46Ghz at 199 W". With those performance tweaks, however, the RX 9060 XT puts up a respectable result of 14,210 points in 3DMark Time Spy. For comparison, the average RX 7700 XT scores 15,452 points in the same benchmark. However, it should also be noted that the gaming PC used in the RX 9060 XT benchmark in question was powered by a rather old AMD Ryzen 5 5600 paired with mismatched DDR4-2133 RAM, meaning there is likely at least some performance left on the table, even if GPU utilization seems consistently high in the 3DMark monitoring chart, indicating there was little bottlenecking limiting the performance. The redditor went on to benchmark the GPU in Black Myth: Wukong, where it managed a 64 FPS average at stock clocks at 1080p, with most settings set to high. Applying the overclock boosted average FPS to a mere 65 FPS, but increased the minimum FPS from 17 to 23. These numbers also won't be representative of the performance for all RX 9060 XT GPUs, since we know that AMD is launching both 8 and 16 GB versions of the RX 9060 XT with different GPU clock speeds for the different memory variants