Wednesday, August 26th 2009

AMD Demos 48-core ''Magny-Cours'' System, Details Architecture

Earlier slated coarsely for 2010, AMD fine-tuned the expected release time-frame of its 12-core "Magny-Cours" Opteron processors to be within Q1 2010. The company seems to be ready with the processors, and has demonstrated a 4 socket, 48 core machine based on these processors. Magny Cours holds symbolism in being one of the last processor designs by AMD before it moves over to "Bulldozer", the next processor design by AMD built from ground-up. Its release will provide competition to Intel's multi-core processors available at that point.

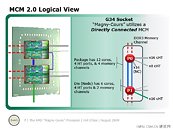

AMD's Pat Conway at the IEEE Hot Chips 21 conference presented the Magny-Cours design that include several key design changes that boost parallelism and efficiency in a high-density computing environment. Key features include: Move to socket G34 (from socket-F), 12-cores, use of a multi-chip module (MCM) package to house two 6-core dies (nodes), quad-channel DDR3 memory interface, and HyperTransport 3 6.4 GT/s with redesigned multi-node topologies. Let's put some of these under the watch-glass.Socket and Package

Loading 12 cores onto a single package and maintaining sufficient system and memory bandwidth would have been a challenge. With the Istanbul six-core monolothic die already measuring 346 mm² with a transistor-load of 904 million, making something monolithic twice the size is inconceivable, at least on the existing 45 nm SOI process. The company finally broke its contemptuous stance on multi-chip modules which it ridiculed back in the days of the Pentium D, and designed one of its own. Since each die is a little more than a CPU (in having a dual-channel memory controller, AMD chooses to call it a "node", a cluster of six processing cores that connects to its neighbour on the same package using one of its four 16-bit HyperTransport links. The rest are available to connect to neighbouring sockets and the system in 2P and 4P multi-socket topologies.

The socket itself gets a revamp from the existing 1,207-pin Socket-F, to the 1,974-pin Socket G34. The high pin-count ensures connections to HyperTransport links, four DDR3 memory connections, and other low-level IO.Multi-Socket Topologies

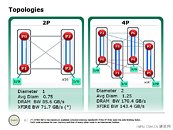

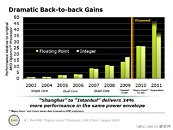

A Magny-Cours Opteron processor can work in 2P and 4P systems for up to 48 physical processing cores. The multi-socket technologies AMD devised ensures high inter-core and inter-node bandwidth without depending on the system chipset IO for the task. In the 2P topology, one node from each socket uses one of its HyperTransport 16-bit links to connect to the system, the other to the neighbouring node on the package, and the remaining links to connect to the nodes of the neighbouring socket. It is indicated that AMD will make use of 6.4 GT/s links (probably generation 3.1). In 4P systems, it uses 8-bit links instead, to connect to three other sockets, but ensures each node is connected to the other directly, on indirectly over the MCM. With a total of 16 DDR3 DCTs in a 4P system, a staggering 170.4 GB/s of cumulative memory bandwidth is achieved.Finally, AMD projects a up to 100% scaling with Magny-Cours compared to Istanbul. Its "future-silicon" projected for 2011 is projected to almost double that.

Source:

INPAI

AMD's Pat Conway at the IEEE Hot Chips 21 conference presented the Magny-Cours design that include several key design changes that boost parallelism and efficiency in a high-density computing environment. Key features include: Move to socket G34 (from socket-F), 12-cores, use of a multi-chip module (MCM) package to house two 6-core dies (nodes), quad-channel DDR3 memory interface, and HyperTransport 3 6.4 GT/s with redesigned multi-node topologies. Let's put some of these under the watch-glass.Socket and Package

Loading 12 cores onto a single package and maintaining sufficient system and memory bandwidth would have been a challenge. With the Istanbul six-core monolothic die already measuring 346 mm² with a transistor-load of 904 million, making something monolithic twice the size is inconceivable, at least on the existing 45 nm SOI process. The company finally broke its contemptuous stance on multi-chip modules which it ridiculed back in the days of the Pentium D, and designed one of its own. Since each die is a little more than a CPU (in having a dual-channel memory controller, AMD chooses to call it a "node", a cluster of six processing cores that connects to its neighbour on the same package using one of its four 16-bit HyperTransport links. The rest are available to connect to neighbouring sockets and the system in 2P and 4P multi-socket topologies.

The socket itself gets a revamp from the existing 1,207-pin Socket-F, to the 1,974-pin Socket G34. The high pin-count ensures connections to HyperTransport links, four DDR3 memory connections, and other low-level IO.Multi-Socket Topologies

A Magny-Cours Opteron processor can work in 2P and 4P systems for up to 48 physical processing cores. The multi-socket technologies AMD devised ensures high inter-core and inter-node bandwidth without depending on the system chipset IO for the task. In the 2P topology, one node from each socket uses one of its HyperTransport 16-bit links to connect to the system, the other to the neighbouring node on the package, and the remaining links to connect to the nodes of the neighbouring socket. It is indicated that AMD will make use of 6.4 GT/s links (probably generation 3.1). In 4P systems, it uses 8-bit links instead, to connect to three other sockets, but ensures each node is connected to the other directly, on indirectly over the MCM. With a total of 16 DDR3 DCTs in a 4P system, a staggering 170.4 GB/s of cumulative memory bandwidth is achieved.Finally, AMD projects a up to 100% scaling with Magny-Cours compared to Istanbul. Its "future-silicon" projected for 2011 is projected to almost double that.

104 Comments on AMD Demos 48-core ''Magny-Cours'' System, Details Architecture

www.tomshardware.com/charts/mobile-cpu-charts/Mainconcept-H.264-Encoder,473.html

It would take approximately 7 hours to do a feature length (90 minutes) film.

here is where the best mobile cpu is www.cpubenchmark.net/cpu_lookup.php?cpu=Intel+Core2+Quad+Q9000+%40+2.00GHz

the best on toms that have been benchmarked are only 1/3 the power

Bah. :(

you don't need the kvm switch my bad a blue tooth setup works great through the house and you can run more than one channel at once for multiple keyboards etc...

and these www.newegg.com/Product/Product.aspx?Item=N82E16815158122 bad example here is one that can do HD www.newegg.com/Product/Product.aspx?Item=N82E16817707107 better yet and cheaper www.newegg.com/Product/Product.aspx?Item=N82E16882754006

We were talking about one large, powerful system to do the encoding - say, a 48 core magny cours system (or a weaker system with GPU encoding), sending the data over the network and then weaker systems doing the DEcoding (with GPU acceleration)

the weak systems dont have to do squat but playback a 'video' with hardware acceleration.

In any case, my point is that CPUs need to get the power of GPUs on a single core instead of multiple cores just to get a fraction of the power of a GPU. Maybe this is a fault with x86. I don't know. Regardless, we need processors with higher IPS, not more cores. Even applications coded back in the 1980s can benefit from higher IPS--they can't benefit from more cores.

we arent talking about playing movies here. we're talking about one main system doing everythning - games, movies, the whole lot, then encoding it and streaming it to multiple cheap ass systems around the house.

DirectX 11 doesn't help. Instead of GPUs continuing their trend of higher IPS, it encourages them to do the same thing as CPUs: multiple cores...

I guess what I am getting at is that AMD and Intel are being lazy and all the programmers are having to work twice as hard to get the same goal accomplished as they would have had to if there was higher IPS and fewer cores. I mean, there's nothing revolutionary about multiple cores but there is in increasing the IPS (e.g. the huge jump between Pentium D and Core 2).

Instead of putting 12 cores in a single processor, they should be focusing on putting the power of 12 cores into a single core.

Unless you think you'll be happy with 4GHz 10 years from now.