Monday, March 19th 2012

Haswell to Use 4th-Level On-Package Cache to Boost Graphics Performance

Intel is making serious efforts to boost CPU-integrated graphics performance using homegrown architectures, without having to borrow/license any technologies from the other two major players in the PC graphics business that have technological edges over Intel, and hence make high-performance discrete-GPUs (NVIDIA and AMD). Intel's architecture that succeeds Ivy Bridge, codenamed Haswell, will be at the receiving-end of a significant advancement in GPU performance.

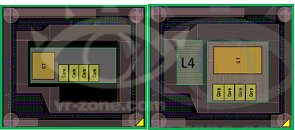

We know from history, that Intel carves out variants of chips using a common silicon, by toggling the amount of L3 cache available, number of cores, and even number of iGPU shaders, apart from other natural handles such as clock speeds, voltages, and feature-set. With Haswell, the highest iGPU configuration will make use of a 4th-level cache (L4 cache), that sits on the package, while not being a part of the Haswell silicon. The Haswell silicon will instead be placed on a multi-chip module (MCM) along with a separate die that holds this L4 cache. The L4 cache will serve as a fast memory for the iGPU, while reducing or completely offloading the iGPU's dependency on the system memory as a frame-buffer (UMA).Such implementations aren't entirely new. IBM has used what's known as the eDRAM (embedded-DRAM), a separate silicon with fast memory and some low-level graphics logic, on some of its its game console processor ASICs. AMD, too, used a technology that's similar in principle, though not in implementation. Certain higher-end 7-series and 8-series graphics chipsets (such as AMD 780G, 790GX, and 890GX) feature what's known as DDR3-Sideport memory, which gives the Radeon IGP access to about 128 MB of fast DDR3 memory, which it can use standalone to offload system memory (UMA), or interleave with it (UMA+Sideport).

Could this be the what Intel is referring to as "Hotham 1.0"?

Source:

VR-Zone

We know from history, that Intel carves out variants of chips using a common silicon, by toggling the amount of L3 cache available, number of cores, and even number of iGPU shaders, apart from other natural handles such as clock speeds, voltages, and feature-set. With Haswell, the highest iGPU configuration will make use of a 4th-level cache (L4 cache), that sits on the package, while not being a part of the Haswell silicon. The Haswell silicon will instead be placed on a multi-chip module (MCM) along with a separate die that holds this L4 cache. The L4 cache will serve as a fast memory for the iGPU, while reducing or completely offloading the iGPU's dependency on the system memory as a frame-buffer (UMA).Such implementations aren't entirely new. IBM has used what's known as the eDRAM (embedded-DRAM), a separate silicon with fast memory and some low-level graphics logic, on some of its its game console processor ASICs. AMD, too, used a technology that's similar in principle, though not in implementation. Certain higher-end 7-series and 8-series graphics chipsets (such as AMD 780G, 790GX, and 890GX) feature what's known as DDR3-Sideport memory, which gives the Radeon IGP access to about 128 MB of fast DDR3 memory, which it can use standalone to offload system memory (UMA), or interleave with it (UMA+Sideport).

Could this be the what Intel is referring to as "Hotham 1.0"?

21 Comments on Haswell to Use 4th-Level On-Package Cache to Boost Graphics Performance

jk :p

Is it actually going to be large enough to serve as dedicated video memory (= 256+ MB) ? IMHO such a solution would make the most sense, provided that there is enough room inside MCM for memory.

I guess 3D-stacking of modules could enable this.. it saves die area.. Ivy Bridge already has incorporated 3D stacking at the transistor level.. so 3D stacking at the die level(chip over chip) might just be the start with Haswell.. so it is not impossible for Intel to have a considerably large L4 cache (i am guessing at least 128MB) with 3D die stacking.. well, just guessing & i could be wrong as more information leaks/releases

this is much more than i expected, i completely forgot about the 22nm process for haswell, this thing might actually end up with some 128mb of L4 cache :twitch:

so, expecting a L4 cache >100MB with Haswell might not be too high..

What this article also states is that the top end Haswell (within the mid-range) is still only going to be a quad core CPU!

By 2013/2014 that is going to be almost 8 years of mid-range CPUs having a maximum of 4 cores, come on people move things along!

if someone needs or think he/she needs more processing power then there are 6 and 8 core CPUs in the market... (and I'm not talking AMD here :shadedshu)

Looks like another reason why the BCLK on mainstream chips will have practically no wiggle room.

With dedicated VRAM the GPU can scale up much more easily with the addition of more fucntional units as it is no longer constrained by the crappy memory bandwidth.

L4 cache approach on the other hand permits rather uniform performance with vastly larger memory pool (borrowed system RAM) but requires far more complicated control logic, even for EDRAM. And if they indeed went with SRAM that would mean more transistors still.

It will be interesting to see which way Intel went with Haswell, the cache way or VRAM way :)

I'll admit it's annoying that laptop manufacturers like to put DDR3-1066/1333 in laptops where the processor supports much faster (so that'll have to be dealt with), but I could imagine an Intel Haswell Ultrabook with HD 5000 GPU and DDR3 2166 speeds (and 2166 modules to go with it) being quite useful.