Tuesday, February 5th 2013

6 GB Standard Memory Amount for GeForce Titan

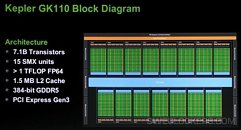

NVIDIA's next high-end graphics card, the GeForce "Titan" 780, is shaping up to be a dreadnought of sorts. It reportedly ships with 6 GB of GDDR5 memory as its standard amount. It's known from GK110 block diagrams released alongside the Tesla K20X GPU compute accelerator, that the chip features a 384-bit wide memory interface. With 4 Gbit memory chips still eluding the mainstream, it's quite likely that NVIDIA could cram twenty four 2 Gbit chips to total up 6,144 MB, and hence the chips could be spread on either sides of the PCB, and the back-plate could make a comeback on NVIDIA's single-GPU lineup.

On its Radeon HD 7900 series single-GPU graphics cards based on the "Tahiti" silicon (which features the same memory bus width), AMD used 3 GB as the standard amount; while 2 GB is standard for the GeForce GTX 680; although non-reference design 4 GB and 6 GB variants of the GTX 680 and HD 7970, respectively, are quite common. SweClockers also learned that NVIDIA preparing to price the new card in the neighborhood of $899.

Source:

SweClockers

On its Radeon HD 7900 series single-GPU graphics cards based on the "Tahiti" silicon (which features the same memory bus width), AMD used 3 GB as the standard amount; while 2 GB is standard for the GeForce GTX 680; although non-reference design 4 GB and 6 GB variants of the GTX 680 and HD 7970, respectively, are quite common. SweClockers also learned that NVIDIA preparing to price the new card in the neighborhood of $899.

106 Comments on 6 GB Standard Memory Amount for GeForce Titan

GEFORCE TITAN 780..Easily Satisfying the Demands of All Your Console Ports!

I'm rather interested in the remaining pieces of the 700 series. Possible gtx 760 = current gtx 670 ?

This place never gets old.

Tough times for nVidia, Tesla got a problem with Xeon Phi, Tegra 4 apparently sucks, next gen consoles use AMD hardware (although a mistake IMHO, absolute performance and perf/Watt wise), GeForce might be next in trouble (I certainly hope so, because of their price politics, PhysX restrictions, 1/24 double precision performance of GTX600 series, they practically invented and killed GPGPU for consumer)...

Now that difference is much smaller while the difference in price still remains a huge gap. $250 console is ready to game with any TV and works as soon as you turn it on without much hassle. PC gaming needs over $1000 and a lot more problems.

From my experience, xbox live seems to have much less server problems than any of the PC titles. There tends to be less hackers and cheaters on xbox live. However, the big downside is there's a lot of screechy 10 year old boys that will curse at anything unfavorable to him.

The answer is clear for the vast majority of the people who wants to game. It's cheaper, it's simpler, it works much much more often, it cheaper, it's much more reliable, it eats less juice, it's cheaper, and............it requires no upgrades so therefore it's cheaper.

-NvidiaWell, you see, it's not as easy to maintain a 64 players server as it is a 24 players server.

;)Under that context all mainstream/performance laptops would be considered the top of economical efficiency.

PCs don't "Require" updates, advanced game engines does. If you are going to stall that, well....

And when you get to certain genres the console has always been superior (fighting and sports comes to mind).

Now, it's difficult to believe that Nvidia will retail a card based on two state-of-the-art/flagship/etc GPUs, and retail it for less than another card with 2 lesser GPUs.

There are no doubts PC has its own advantages and Consoles have their own.

One thing is for sure, you can't put all the passion you put into your PC on a Console.

On topic : If this is a single GPU and will have unlocked voltage then count me in for one, I've been longing for a worthy 6990 upgrade.

To those bitching and moaning about the graphical detail of "console ports", I suggest that software development generally follows hardware development. I think I'd be more concerned with the content (originality) of a game before its textures' ability to saturate the framebuffer, or looping post-process compute function for an end result that yields minimal image quality improvements while heavily decreasing framerate.

also note that yes PC sales have been up the past couple years respectively but that is mainly because the games being developed are not pushing the hardware. developers are not bothering!

On a related note, here's a graph I made (numbers averaged between JPR and Mercury Research where both were available) for an article (still under consideration at another site) tracing the history of graphics from the 1950's military simulators to the present day. The trend is pretty self explanatory.

As someone wrote I'd rather save the $899 and by both PS4 & XBOX720 just so i can play the exclusive titles that i love, tho i wish the exclusive titles were Multi-Platform so i would just use the PC, but its not like that.

if history is any indicator the gpu card is going to way of the sound card, ethernet card and raid card. unless you need the absolute best you simply don't need one.

Just a wild guess on my part, but there could be an outside possibility that someone spending $900 on graphics could possibly be using a 64-bit OS. And I'm not sure that "thousands" of prospective Titan owners would still be tied to a 32-bit operating system...if indeed, thousands of Titan cards are actually produced.