Wednesday, October 16th 2013

Radeon R9 290X Pitted Against GeForce GTX TITAN in Early Review

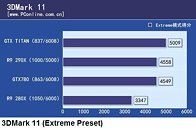

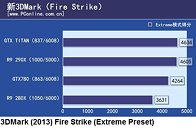

Here are results from the first formal review of the Radeon R9 290X, AMD's next-generation flagship single-GPU graphics card. Posted by Chinese publication PCOnline.com.cn, the it sees the R9 290X pitted against the GeForce GTX TITAN, and GeForce GTX 780. An out-of-place fourth member of the comparison is the $299 Radeon R9 280X. The tests present some extremely interesting results. Overall, the Radeon R9 290X is faster than the GeForce GTX 780, and trades blows, or in some cases, surpasses the GeForce GTX TITAN. The R9 290X performs extremely well in 3DMark: FireStrike, and beats both NVIDIA cards at Metro: Last Light. In other tests, its half way between the GTX 780 and GTX TITAN, leaning closer to the latter in some tests. Power consumption, on the other hand, could either dampen the deal, or be a downright dealbreaker. We'll leave you with the results.More results follow.

Source:

PCOnline.com.cn

121 Comments on Radeon R9 290X Pitted Against GeForce GTX TITAN in Early Review

Don't put to much in Furmark consumption test, unless there's a score saying the amount of "work" that produced it's hardly significant or earth-shattering. The one the it might indicate is the cooler has some headroom.

But these numbers do hold to what I've been saying, can soundly beat a 780, while will spar with Titan depending on the Title. Metro was one Nvidia had owned but not so much anymore.

If AMD hold to what they've indicated and done with re-badge prices they'll have a win!

We wait...

Only enthusiast and score bitching worshiper will look otherwise.Most of steam user consist from pre-build PC's,average joe and regular jane who bought marketing induced product.They only know three thing :

- anything cost more is better.

- any product which hordes the market is always faster.

- and the worst...buy product that had commercial opening scene in a game will make your game stable.

Let me guess,Intel's Havok and nVidia old motto The Way It's Meant To be Played definitely winner here.Heck,some of my colleagues believe AMD processor and graphics doesn't do gaming.They even boasting their i3+650Ti will decimate my FX8350+CF 7970 :wtf:

Still starving for a review of these things.

even when its out,, and mantels out, your still going to be able to find a fair few who would nock it, even if it were 50% faster.

stop trying to figure something thats mathmatically proveable and proven,, its about "application" anyway and more importantly wizzards application of it(R9 290X) that really matters:D

Yup, that's some foot shooting right there.

Secondly, Nvidia have had two salvage parts collecting revenue and dominating the review benchmarks for the same length of time. Bonus point question: When was the last time Nvidia released a Quadro or Tesla card that didn't have a GeForce analogue of equal or higher shader count?*

Quadro K6000(2880 core) released four days ago

Tesla K40(2880 core) imminent

* Answer: Never

I mean if you'd remove that in GK110 it would be the same 400-450w for sure.

Titan $ 999,- (newegg), whereas R9 290X $600 ~650 (expected)

I'm wanting a $550 MSRP.

so true...

Even my father would prefer to choose intel celeron (i'm not sure it's dual cores) and its gpu rather than amd A8-4 series (4 cores) and its igpu.

@casecutter : count me in, i would be happy to get them in cfx and put it under water.

I will stick with my "lackluster" 60Hz 30" Dell and wait for the 4K monitors to come down in price. Does this mean I am in a cave as well? I absolutely love my monitor and see no reason at this point to go to 120Hz.

Not even a year and i already miss everyone in last gath @ bandung :toast:Why would someone spend $600 or $1000 for stupid card that cannot even attract a woman with nice boobs?Yup...that's enthusiast.Might have something to add,no matter how fast your monitor,windows only sees them in 60Hz.It's in the panel,not in OS'es or graphic card.

FYI,I have 240Hz panel and yet still have a severe judder :shadedshu

Although in my early 30's i'm still at shitty job with minimum wages and barely make a living,I still believe God would have pity on me so i could date someone and make my own family someday :)