Wednesday, October 16th 2013

Radeon R9 290X Pitted Against GeForce GTX TITAN in Early Review

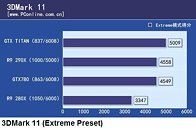

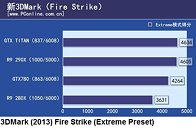

Here are results from the first formal review of the Radeon R9 290X, AMD's next-generation flagship single-GPU graphics card. Posted by Chinese publication PCOnline.com.cn, the it sees the R9 290X pitted against the GeForce GTX TITAN, and GeForce GTX 780. An out-of-place fourth member of the comparison is the $299 Radeon R9 280X. The tests present some extremely interesting results. Overall, the Radeon R9 290X is faster than the GeForce GTX 780, and trades blows, or in some cases, surpasses the GeForce GTX TITAN. The R9 290X performs extremely well in 3DMark: FireStrike, and beats both NVIDIA cards at Metro: Last Light. In other tests, its half way between the GTX 780 and GTX TITAN, leaning closer to the latter in some tests. Power consumption, on the other hand, could either dampen the deal, or be a downright dealbreaker. We'll leave you with the results.More results follow.

Source:

PCOnline.com.cn

121 Comments on Radeon R9 290X Pitted Against GeForce GTX TITAN in Early Review

First I didn't say multi-panels, and sure there will be instances with specific type panels and if a competitive play you'd need some... Über. While, even Far Cry 3, Crysis 3 if you juggle settings a little, a R9-280X you can make it work. And those are perhaps the two exceptions, because even Metro LL on a R9-280X is 60FpS at 1920x1080p. If those are what you intend to play there're always exceptions/concessions for some, but the large bulk of enthusiasts aren't looking at special circumstances, just a normal 1920x1080p. Perhaps 27" they got a year or two back and hoping to hold out perhaps another generation for 4k to become what some might see as "affordable". Till then I see that many have nestled in with what they have.

You could send $650-1000 on the graphic card and then what… a multi-panels on the cheap perhaps, because thinking "it" will give you a path later to get a 4k isn't a good avenue. The smart move for the average person (for that money) step-up from an older 24” 1080p TN and last generation cards (6950/560Ti) and get a descent 2560x1440 monitor and say $310 for a R9-280X and have a fairly enjoyable enthusiast experience. At least not all that much different than those that dropped $650-1000 on just the graphic card.

I believe we were talking about GK110- that is Titan and the 780....but of course, you're argument stands up fairly well if you're ignoring the largest selling parts :banghead:

You also seem to be making some fundamental error in what the Titan in particular was supposed to be. For the consumer, the card was supposed to represent the (fleeting) pinnacle of single-GPU performance with slight incentive of Nvidia not diluting FP64 performance. For Nvidia it represented PR. Every GPU review since (whether Nvidia or AMD) the Titan's launch has featured the card at or near the top of every performance metric. Eight months of constant PR and advertising that hasn't cost Nvidia an additional penny.

If sales of Titan (or the 780 for that matter) were paramount then you bet that Nvidia wouldn't have priced it as they have -in exactly the same way that AMD priced a supply constrained HD 7990 at $999. That hypothesis is all the more credible when the main revenue stream for GK110, the Tesla K20 is known to be supply constrained itself.

You're living in cloud cuckoo land if you believe that taking the muzzle off the AIB's for vendor specials, and lowering prices would have any significant impact of the overall balance sheet. The market for $500+ graphics cards is negligible in the greater scheme of things. Now subtract the percentage of people that if presented with a $500 card wouldn't also pay for a $650 (or more) board. Now subtract the percentage of people that would spend the same amount of cash on two lower specced cards offering better overall performance

Basically you're putting the gaming aspect under the microscope and not really looking at the big picture...the other alternative is that some random internet poster knows more about strategic marketing than the company with the sixteenth highest semiconductor revenue in the world :slap:

LegitReviewsSource: LegitReviews

TomsHardware: First Official AMD Radeon R9 290X BenchmarksHmmm... "R9 290X Quiet Mode"... Promising! :D

Let me guess....you'll be petitioning W1zzard to measure sound and power consumption using "Quiet Mode", and to measure gaming benchmarks using "Noisy As Fuck Mode".

A movie theater sells small popcorn for $3 and a large for $7. People are actually more often shown they'll buy the $3 size having trouble rationalizing the higher price.

Then the Theater introduces a medium that somewhat close to the size of the large, but not as much though folks rationalize that it's just .50 cent more and they get more. The theater actually starts selling more larges while medium and smalls aren't near as popular.

More choice provokes more thought and changes how the brain rationalizes things. In this case it's opposite. Nvidia release Titan and sure everyone salivates over what they’d like, but for large part of the market it's hard to justify, then add a $650 part and… set the hook.

Seriously, be thankful. There's a lot of people out there in our country who's not as lucky as you.

Anyway, from Montreal : imgur.com/a/MEXNo

i believe he had took 90% of his wages for months or years maybe to buy shinny hardware...:) It because the same shit also happened to me.. :laugh:wow, that's great..

-----

according to videocardz dot com.. videocardz.com/46929/official-amd-radeon-r9-290x-2160p-performance-17-games

I read this thread @ EVGA forum : forums.evga.com/tm.aspx?m=2032492

It seems that 331.40 BETA provides PCI-E 3.0 support for Titan and 780 under X79 platform (but with Ivy Bridge-E processor, don't know about SB-E).

As for Xzibit 's point, yes, that's valid. If an Ivybridge board is used it negates any issues :-)

Like I said, not trolling but AMD's set up isn't 'potentially' using equal specs on both cards. If other reviews use Ivy, then all's cool (if the lane bandwidth is even a problem in the first place!)

Give us some 1920x1200 reso benchmarks.

290X implement a "new" crossfire methods via PCIe bus link.Although looks good on paper,my major concern is 38 PCIe lane 2.0 over my 990FX boards.Two of them could consume 32 lanes,and left 6 lane.It's still unclear to me whether AMD will do full duplex or needed another lane for crossfiring.On the side note,AMD could utilize IOMMU which is available across all 900 series board,perhaps...by creating virtual sideband addressing.we're in the same boat here...the key is 2S ,saving and starve :laugh:How dare you spreading FUD :p

Btw how are you old friend?is your shoulder been recovered?

not 100% but okay, better be, coz i spent pretty much 3 titans for it :shadedshu and now am broke :roll:

Anyway, here's an compiled comparison of another NDA-breaking run...