Saturday, November 2nd 2013

GeForce GTX 780 Ti Pictured in the Flesh

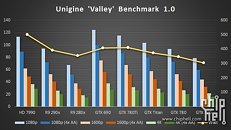

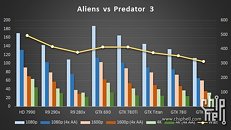

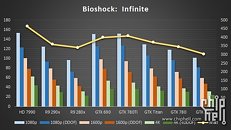

Shortly after its specifications sheet leak, pictures of a reference GeForce GTX 780 Ti (which aren't renders or press-shots) surfaced on ChipHell Forums. The pictures reveal a board design that's practically identical to the GTX TITAN and GTX 780, with the "GTX 780 Ti" marking on the cooler. The folks over at ChipHell Forums also posted five sets of benchmark results, covering various 3DMark tests, Unigine Valley, Aliens vs. Predator 3, Battlefield 3, and Bioshock: Infinite, on a test-bed running Core i7-4960X at 4.50 GHz, and 16 GB of quad-channel DDR3-2933 MHz memory. Given its specifications, it comes as no surprise that the GTX 780 Ti beats both the GTX TITAN, and R9 290X, and goes on to offer performance that's on par with dual-GPU cards such as the GTX 690, and HD 7990. For a single-GPU card, that's a great feat.The benchmark results from ChipHell's run follow.

Source:

ChipHell Forums

92 Comments on GeForce GTX 780 Ti Pictured in the Flesh

.......wow all this science, math, numbers crunching.....boulder dash.!!! You got the fastest gpu ? You claim the rights to what ever price you want! Its the Law!

I love how the way nvidia milking the cash cow :laugh:

And lol to people saying this hasn't overclocking headroom, 2688 cuda Titans can reach 1300/1400 Mhz core with 1.3v.

Wouldn't be surprised to see 1500 mhz core on 1.5v classifieds with this chip.

Subjective is a bliss,ignorance is new logic :laugh:

Titan length: 267mm (10.5")

You also do not need multiple cards, but you do need bandwidth. That's the biggest killer of 4k and multiple monitor setups. You can see this in the benchmarks of the 290x as the resolution scales to 4k.

Now back to the card.. Wow this thing is going to take the record for hottest, highest power usage and probably loudest card ever made. Just the electric bill will double the price on this card within a year.:nutkick:

- cheaper

- faster

- hotter

- more power consumption

then gtx780... most nvidia fanboys reaction about the r9 290x on youtube and review comment : it's too hot, and that power bill is outrageous !! who cares if the r9 290x is cheaper, no way i'm gonna buy the r9 290x ! gtx 780 ftw !then 780ti came out, compared to a r9 290x, the r9 290x is :

- cheaper

- slower

- hotter

- more power consumption

then gtx780ti... i bet most nvidia fanboys reaction on youtube and review comment gonna be : it's faster !! who cares about heat and power bill, even though the r9 290x is cheaper, no way i'm gonna buy the r9 290x ! gtx 780ti ftw !:roll:

www.neogaf.com/forum/showthread.php?t=704836Are you sure they are only fanboys ? Schills ? Social Media marketers ? Focus group members ?Remember nvidia got cought while its hand in cookie jar :slap:

oh yea, 599 for 3gb version and 649 for 6gb or gtfo

hi

see this card with 3GB Vram beats all single and duall GPUS

power is there and cheaper than all GPUS

performance that's on par with dual-GPU cards such as the GTX 690, and HD 7990. For a

:banghead::Dsingle-GPU card, that's a great feat

The beautiful thing we are about to see is the power of hypocrisy. If this thing is hot and noisy then all those blasting the 290X will need to keep their mouths shut or also criticise this card. The power usage isn't an issue. It's on the same node as a GK104 chip, therefore has the same (in)efficiencies. So if this single card matches a GTX690, we should expect it to draw similar power. If it draws lots more than the relative increase over a GTX690 then it is less efficient.

Power usage is only an argument from a performance/watts ratio. Apologies for using the 290X graph but it is relevant and has all the big players.

I also think that finally Nvidia will allow better overclocking of the card, a situation where power consumption and heat will shoot through the roof. But that is to be expected.

Christ most people still don't use a 2560x1440 monitor either. Why don't graphics card manufactures concentrate on something more important .... like I know building a card that doesn't use 350 Watts by itself and doesn't require nuclear facility to cool it. It won't belong before all cards come with water blocks or need a 700 watt PSU to power the card by itself

All of this seems like laziness to me! It's been a long time since any real progress has been made in the video card front! This re-badge card just proves it some more.