Monday, January 27th 2014

GeForce GTX 750 Ti Benchmarked Some More

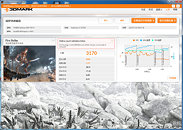

In the run up to its rumored February 18th launch, GeForce GTX 750 Ti, the first retail GPU based on NVIDIA's next-generation "Maxwell" GPU architecture, the card is finding itself in the hands of more leaky PC enthusiasts, this time, members of Chinese PC enthusiast community site PCOnline. The site used an early driver to test the GTX 750 Ti, which it put through 3DMark 11 (performance preset) and 3DMark Fire Strike. In the former, the card scored P4188 points, and 3170 points in the latter. The test-bed details are not mentioned, but one can make out a stock Core i7-4770K from one of the screenshots. Also accompanying the two is an alleged GPU-Z 0.7.5 screenshot of the GTX 750 Ti, which reads out its CUDA core count as 960. Version 0.7.5 doesn't support GTX 750 Ti, but it has fall-backs that help it detect unknown GPUs, particularly from NVIDIA. Its successor, GPU-Z 0.7.6, which we're releasing later today, comes with support for the chip.

Source:

PCOnline.com.cn Forums

22 Comments on GeForce GTX 750 Ti Benchmarked Some More

The 600 series was actually more of a mess with midrangers becoming the high ends and low/mid chips filling in the midrange block. We didn't get the 110s till late. 700 series is finally started getting things back on track but now the results are skewed thanks to NV's bumblings in the 600s. Course, AMD wasn't really mounting any competition so they didn't help much.

The 700 series in general isn't that much higher in performance than the 600 series, so the new 700 series product seems appropriately named for the performance offered.

This is not a high end product. This is not intended to be pushing the boundaries of performance. This is a test bed for the new architecture on a well-known fab process. This is to help them work out any deficiencies in the new design ahead of the new fab processes finally being ready and readily available.

They've done this before. I suppose that's why I'm not surprised. The mid-range part that gets the new design (or the new fab process) has unrealistic expectations heaped upon it and then when it comes out at more or less the performance level assigned it by the very branding it was given (in this case the 750 series), people are disappointed by the new architecture.

The real disappointment is you're unrealistic expectations for a lower than mid-range product.

I'll be curious to see what improvements are present in the product's architecture (ie., mostly the reviews) and I'm still looking forward to the "bigger" versions of Maxwell on the smaller die process, but this card was never going to be worlds better than the existing products given what current fabs are capable of.

Customizing the upcoming 4K resolution monitors! seem a big problem. And next gen Open GL to .Or is it as usual resisting direct X. Difficult to say that they are not prepared Driver for new architecture.

definitely a disappointment ! 1-8 nop !

Now at Wccftech they where saying that we will also see a 750 not Ti with a rumored 75W power consumption. If 750 non ti follows the same logic as 650, with 650 having half the cores compared to 650Ti, then there isn't really any difference in power consumption compared to Kepler. From 64W and 384 cores on 650 non ti you go to 75W and 480 cores on 750 non ti. No big deal I think.

Looking at these facts/rumors, and also considering that both cards are part of 700 series, I still have huge doubts that these cards are Maxwell. I think they are Kepler rebrands.

If it is 960 unified shaders (meaning a smx is comprised of 240sp instead of 192+32 special function units) is will require exactly 25% more bandwidth, even though the actual usefulness versus 896 (768+128) is only a couple to a few percent in games beyond increased compute performance, which granted is important for additional effects/physics/etc. Unified is the best way forward considering gpus are for more than rasterization, but it is not always the most efficient purely for graphics performance. I assume sfus are quite a bit smaller than full shaders, use less power, and were tied to the internal cache (hence not needing extra bw), but granted 240 unified is a good number.

The thing about kepler is while nvidia nailed the ratio needed for special function (which amd does in shaders, perhaps nvidia will now too) the best ratio of total units is around 234-235sp for 4 rops. Nvidia had 224 (192+32) while amd has 256 in most scenarios. This is why often kepler was more efficient, but gcn stronger per clock (when both similarly satiated but not accounting for excessive bandwidth per design). 240 is obviously very close to the ideal mark per 4 rops per one 'core', and realistically the closest an arch can get based on large, compact engines with units based on granularity of multiples of 4/8/16 etc.

That said, I truly expected something clever like 1037-1100/7000, or the outlier chance of something phenomenal, like say 192-bit, up to 1200mhz boost, and 5500mhz-rated (7000mhz 1.5v binned to 1.35v) ram...those would have made sense or been exciting....perhaps in six-or-seven months when we get 20nm parts.

This...this is shit. It basically screams 'wait for the 770-style rebrand we try to sell you in six months with a better bandwidth config that we will hail as a huge efficiency improvement' once gk106 chips exit the channel and no longer competes with it. AMD isn't much better with the 5ghz-> 6ghz ram on 7870->270(x), but at least in that case the they were probably waiting for that specific ram to get cheaper, as well as the fact the initial design wasn't supremely bottle-necked by 5ghz (or most 5ghz overclockable speeds). I suppose there is a chance nvidia could rig future (20nm) maxwell designs up with a larger cache so it is less dependent on external bw and this design got the shaft because of 28nm, but I'm not holding my breath.