Tuesday, February 24th 2015

AMD's Excavator Core is Leaner, Faster, Greener

AMD gave us a technical preview of its next-generation "Carrizo" APU, which is perhaps the company's biggest design leap since "Trinity." Built on the 28 nm silicon fab process, this chip offers big energy-efficiency gains over the current-generation "Kaveri" silicon, thanks to some major under-the-hood changes.

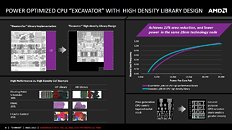

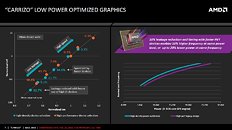

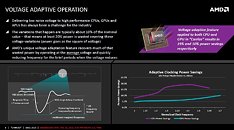

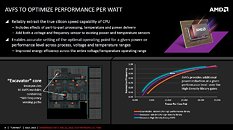

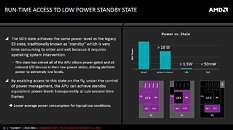

The biggest of these is the "Excavator" CPU module. 23 percent smaller in area than "Steamroller," (same 28 nm process), Excavator features a new high-density library design, which reduces die-area of the module. Most components are compacted. The floating-point scheduler is 38% smaller, fused multiply-accumulate (FMAC) units compacted by 35%, and instruction-cache controller compacted by another 35%. The "Carrizo" silicon itself uses GPU-optimized high-density metal stack, which helps with the compaction. Each "Excavator" module features two x86-64 CPU cores, which are structured much in the same way as AMD's previous three CPU core generations.The compaction in components doesn't necessarily translate into lower transistor-counts over "Kaveri." In fact, Carrizo features 3.1 billion transistors (Haswell-D has 1.4 billion). Other bare-metal energy optimizations include an 18% leakage reduction over previous generation, using faster RVT components, which enables 10% higher clock speeds at the same power-draw (as "Kaveri"). A new adaptive-voltage algorithm reduces CPU power draw by 19%, and iGPU power draw by 10%. AMD introduced a few new low-power states optimized for mobile devices such as >9-inch tablets and ultra-compact notebooks, which reduces overall package power draw to less than 1.5W when idling, and a little over 10W when active. In all, AMD is promising a conservative 5% IPC uplift for Excavator over Steamroller, but at a staggering 40% less power, and 23% less die-area.The integrated-GPU is newer than the one featured on "Kaveri." It features 8 compute units (512 stream processors) based on Graphics CoreNext 1.3 architecture, with Mantle and DirectX 12 API support, and H.265 hardware-acceleration, with more than 3.5 times the video transcoding performance increase over "Kaveri." For notebook and tablet users, AMD is promising "double-digit percentage" improvements in battery life.

Now, if only AMD can put six of these leaner, faster, and greener "Excavator" modules onto an AM3+ chip.

The biggest of these is the "Excavator" CPU module. 23 percent smaller in area than "Steamroller," (same 28 nm process), Excavator features a new high-density library design, which reduces die-area of the module. Most components are compacted. The floating-point scheduler is 38% smaller, fused multiply-accumulate (FMAC) units compacted by 35%, and instruction-cache controller compacted by another 35%. The "Carrizo" silicon itself uses GPU-optimized high-density metal stack, which helps with the compaction. Each "Excavator" module features two x86-64 CPU cores, which are structured much in the same way as AMD's previous three CPU core generations.The compaction in components doesn't necessarily translate into lower transistor-counts over "Kaveri." In fact, Carrizo features 3.1 billion transistors (Haswell-D has 1.4 billion). Other bare-metal energy optimizations include an 18% leakage reduction over previous generation, using faster RVT components, which enables 10% higher clock speeds at the same power-draw (as "Kaveri"). A new adaptive-voltage algorithm reduces CPU power draw by 19%, and iGPU power draw by 10%. AMD introduced a few new low-power states optimized for mobile devices such as >9-inch tablets and ultra-compact notebooks, which reduces overall package power draw to less than 1.5W when idling, and a little over 10W when active. In all, AMD is promising a conservative 5% IPC uplift for Excavator over Steamroller, but at a staggering 40% less power, and 23% less die-area.The integrated-GPU is newer than the one featured on "Kaveri." It features 8 compute units (512 stream processors) based on Graphics CoreNext 1.3 architecture, with Mantle and DirectX 12 API support, and H.265 hardware-acceleration, with more than 3.5 times the video transcoding performance increase over "Kaveri." For notebook and tablet users, AMD is promising "double-digit percentage" improvements in battery life.

Now, if only AMD can put six of these leaner, faster, and greener "Excavator" modules onto an AM3+ chip.

85 Comments on AMD's Excavator Core is Leaner, Faster, Greener

Now leveraging HSA to actually use shared memory between both X86 and GPU shaders is an entirely different problem which has more to deal with software design as applications must be able to take advantage of it if the workload is conducive to such improvements.

All in all, I think AMD made a mistake with SMT by using dedicated shared components. It was a huge waste of time for the regular market. If AMD invested these resources into their monolithic X86 core design with AM2+ and AM3, improving the IMC and cpu cache latencies would have done more for performance than SMT ever could have for 95% of situations that most consumers actually care about but instead of trying to scale vertically, they decided to scale horizontally and the result has been, in my opinion, lackluster. I just don't see how AMD is going to get out of this hole they've gotten themselves into.

FP4 Socket with DDR4

www.seair.co.in/fp4-ddr4-export-data.aspx

01-Nov-2014

Banglore Air Cargo

84733099

MOTHERBOARD W/O CPU FP4-DDR4 SODIMM AMDP/N 102-H27202-00

So I can wait to see what they release, and read reviews on it when it's time.

I really hope that they nail it with something.

I hope that their next series of GPUs kick ass too!

I'll wait and see.

My 6 year old Phenom II X6 paired with an HD7790 is gonna kill those 4 core APUs, badly. And if I were building new, as my youngest recently did, you can get an FX8300 for $115 and pair it with an HD7790 or R260 and SLAUGHTER the most expensive APU in their line up thanks to more cores and GDDR 5.

So if my choice is a 4 core APU from AMD or a 4 core CPU from Intel, why would I buy AMD? After comparing doing A/V work and gaming with 6 cores I'll go 8, even 10, but I won't downgrade to just 4, not when you can get 4 WITH HT from Intel, it just wouldn't make any sense.

Of course this is why my youngest never even thought about their APUs, as he already HAD 4 Phenom II cores and had just gotten an HD7790 so there would have been absolutely no point in any chip in their APU lineup...and that is the problem. Still plenty of tasks where more REAL CPU cores can be a benefit, but if you have even the lowest end GDDR 5 card (which is currently a whopping $60) then nothing in their APU lineup makes ANY sense at all.

This is why I'm seriously hoping they either come out with new AM3+ chips or come out with 8-12 real CPU core AM4s soon, because right now I have a sinking feeling they are just gonna throw in the towel WRT all the budget gamers out there and strictly build APU granny boxes and if that is the case? Stick a fork, they are as good as dead as its all the hexa and octo AM3+ guys that are keeping the fanbase alive, recommending their chips to friends, even with no new chips in this long there still isn't anything on the Intel side that can compete with 8 real cores for multithreading until you spend over double the price. But again if the ONLY choices we have is a 4 core APU from AMD, stuck with a GPU that will never EVER get used if you have even a $60 card, versus a 4 core from Intel with higher single core performance AND the option of getting a 4 core with HT down the line when the price drops..why would I stick with AMD when my hexacore gets too long in the tooth?

the whole compute core thing makes since to me but yeah misunderstood by a lot of people as well as hsa for the most part.

it may seem strange that something more advanced and efficient than other desktop platforms started out on the lower end with no new chipset but kaveri was the first generation. apu's by design have the cpu and gpu on the same chip so there was nothing high end to start without further research and refinements. kaveri is pretty good at 900p so I would expect these new apu's to be knocking on the 1080p door. due to the way that technology advances a separate cpu and gpu will remain a better performing option but after another generation or two people will be saying 1080p gaming is a job for one those amd apu's so you can save money if you want.

amd's got plenty of granny boxes coming! zen.. excavator.. carrizo.. playstation.. xbox.. wii..

The simple fact is IMHO the entire APU concept makes ZERO sense with the exception of mobile. In a laptop where space is a premium and power is severely limited? Then sure having the CPU and GPU on one die cuts down the costs and power usage, but on a desktop? Even if you get the lowest end APU you are still getting ripped off, I mean look at the prices, you can get a dual core APU with an HD8300 for $69 OR you can go to some place like Biiz and pick up an FX4300 with four REAL cores that will do any task (not just the extremely limited GPU accelerated ones) for the same money. By the time you figure the increased cost of the APU over the CPU, the need for much faster RAM compared to the CPU as the GPU side of an APU is ALWAYS starved for memory bandwidth? you will simply never come out ahead as even the lowest end GPU with dedicated GDDR 5 memory (which as I said is just $60, the GeForce 610 or 710 IIRC) with just slaughter the thing without effort!

Look I'm about as hardcore an AMD supporter as they come, I have 6 AMD PCs in my family going back to my father's Phenom I quad all the way up to the FX8300 of the youngest, but if you are not running a laptop? There just isn't a selling point for their APUs, there just isn't. If you build a machine with the least expensive APU and I build the least expensive CPU+GPU the increased cost of the APU is gonna make it a losing proposition,just compare the lowest ACTUAL quad core APU with the same on the AM3+ side and its not even funny how lopsided it is, you can get 4 REAL cores AND a GPU for less than the APU quad so no matter how you slice it? It just doesn't make sense.