Wednesday, May 6th 2015

AMD Readies 14 nm FinFET GPUs in 2016

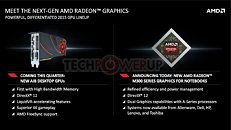

At its ongoing Investor Day presentation, AMD announced that will continue to make GPUs for every segment of the market. The company is planning to leverage improvements to its Graphics CoreNext architecture for the foreseeable future, but is betting on a huge performance/Watt increase with its 2016 GPUs. The secret sauce here will be the shift to 14 nm FinFET process. It's important to note here, that AMD refrained from mentioning "14 nm," but the mention of FinFET is a reliable giveaway. AMD is expecting a 2x (100%) gain in performance/Watt over its current generation of GPUs, with the shift.

AMD's future GPUs will focus on several market inflection points, such as the arrival of CPU-efficient graphics APIs such as DirectX 12 and Vulkan, Windows 10 pulling users from Windows 7, 4K Ultra HD displays getting more affordable (perhaps even mainstream), which it believes will help it sell enough GPUs to return to profitability. The company also announced an unnamed major design win, which will take shape in this quarter, and which will hit the markets in 2016.2015 will be far from dry for the company, as it will introduce its first GPU that will leverage HBM, offering double the memory bandwidth of GDDR5 at half its power draw. The company announced that its HBM implementation will see memory dies placed on the same package as its GPU die, so we will no longer see graphics cards with memory chips surrounding the GPU ASIC. The company will announce a few new high-end graphics cards around Computex 2015.

AMD's future GPUs will focus on several market inflection points, such as the arrival of CPU-efficient graphics APIs such as DirectX 12 and Vulkan, Windows 10 pulling users from Windows 7, 4K Ultra HD displays getting more affordable (perhaps even mainstream), which it believes will help it sell enough GPUs to return to profitability. The company also announced an unnamed major design win, which will take shape in this quarter, and which will hit the markets in 2016.2015 will be far from dry for the company, as it will introduce its first GPU that will leverage HBM, offering double the memory bandwidth of GDDR5 at half its power draw. The company announced that its HBM implementation will see memory dies placed on the same package as its GPU die, so we will no longer see graphics cards with memory chips surrounding the GPU ASIC. The company will announce a few new high-end graphics cards around Computex 2015.

30 Comments on AMD Readies 14 nm FinFET GPUs in 2016

But..... will my system last that long......

And we need a price war.

To imply and falsely say (from one post, which was clarified) that he was circulating rumours is in itself, rumourtastic.

Could you refresh my memory and point to the thread? I may be wrong.

Unless of course you're not talking about HS. But you are, aren't you? because going by previous posts - he annoys you.

Don't worry, found it:

If I say "bananas are blue", then say later, "you may be right, bananas are yellow" you cant state that I circulated mistruths about the colour of bananas, when in the same conversation, I corrected that stance.

Your trolling (because that is what you are doing) is ignoring chronological positioning of statements. If statement A is corrected or clarified by statement B, quoting statement A to prove a point is itself misleading and ignorance of printed truth.

As for the actual story, it'll be a fantastic day when AMD brings it's balls to the table and proves what they can do with good direction.

@Casecutter

Your quoting me does absolutely nothing to ameliorate your pathetic attempt at trolling. Firstly highlighting a question I put forward ( Fiji's launch date might be on the slide (pun intended)? ) rather than the supposed definitive statement you assume I made. A question based upon the Hot Chips Symposium where I quite correctly highlighted that Fiji and HBM's official unveiling will take place (as opposed to public launch of product). The difference you fail to understand is that product launch and white paper publicationare two distinct entities - the latter generally follows the product launch -or, more rarely, is made available at the same time.

Even after it was pointed out the response was hardly “clarified” as completely backing away from the stance.

I think members should read and decide for themselves.

www.techpowerup.com/forums/threads/nvidia-geforce-gtx-980-ti-silicon-marked-gm200-310.212205/#post-3276725

So then you would figure the cards to be much smaller...Yet concentrated heat so giant heatsinks perhaps.

HBM is pretty cool though. Surprised AMD were the first to bring it to the table.

I really hope whatever they come up will be on par with what we expect from the current 4k30 standard; in essence something close (in practical performance) to what 390x is. If you figure using pre-Tonga bandwidth requirements 390x would need 480Gbps (1.5x a 290x at 1018mhz), it would make sense to build a GPU or SOC around 2xHBM2 (at least 512Gbps).

Anything less seems a fool's errand if they plan to have any relevance after 'Xbox 3' and 'PS5'. IMHO it makes sense to tackle something that could feasibly scale up from current consoles and down from those in the future.

Lots of questions (even in gpu designs; reconfigured units and/or higher/lower clocks) until we know what voltages/clocks 14nm LPP will typically be able to accommodate, and how efficiently. Same goes for HBM2. Will AMD decide to go 'out of spec' again, as they appear to be doing for HBM1 (1250mhz at 1.16v vs 1ghz at 1v), or is that simply because of 28nm VDD efficiency and their current gpu design?

It seems pretty clear nvidia is shooting for 1v/256gbps x 3 for the top-end Pascal, but AMD has a tendency to not follow the spec/voltage rules if it allows a more cost-efficient and/or over-all design...and of course nvidia seems pretty content (at least with Maxwell) with supplementing (a minimum of) 1/3 of their bandwidth requirements with on-die cache...

Pretty excited to see how 14nm (and 16nm FF+ for that matter) develops. Because let's face it...this, ladies and gentlemen, is the threshold we've been waiting years and years to happen. AMD design methodologies will finally make sense wrt transistors/tdp/available bandwidth and certain performance realities will hit certain markets; cheap 1080p gpus (and igps) that don't need to make compromises, realistic 4k solutions...etc. On top of that, mobile chips (be it Kirin, 820...even Tegra) should finally be simply spectacular compared to what they need to be able to realistically accomplish.

2016 should be one hell of a year all-around.

There are plenty of things to criticize AMD for, but being first is not one of them.

AMD has been first with most of the major advances in GPU tech, in the last 10 years, in memory, fully complaint DX hardware and connectivity. Theres a lot of things I didn't mention too like, Driver access to overclocking, fan settings, enthusiast controls etc.

Its completely expected for AMD to be first with HBM