Friday, May 27th 2016

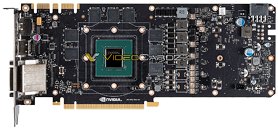

NVIDIA GeForce GTX 1070 Reference PCB Pictured

Here's the first picture of an NVIDIA reference-design PCB for the GeForce GTX 1070. The PCB (PG411) is similar to that of the GTX 1080 (PG413), except for two major differences, VRM and memory. The two PCBs are pictured below in that order. The GTX 1070 PCB features one lesser VRM phase compared to the GTX 1080. The other major difference is that it features larger GDDR5 memory chips, compared to the smaller GDDR5X memory chips found on the GTX 1080. These are 8 Gbps chips, and according to an older article, its clock speed is maxed out to specifications, at which the memory bandwidth works out to be 256 GB/s. The GeForce GTX 1070 will be available by 10th June.

Source:

VideoCardz

37 Comments on NVIDIA GeForce GTX 1070 Reference PCB Pictured

And yes it is fast, to the point that the HBM1 dream died.

Meh

Purely marketing?

Made the card small and nice looking, felt new and fresh and on the X it had a water cooler to boot.

Good marketing that, plus a pretty solid card (that was a tad too pricey sure).

But yeah that was pure marketing, soooo GDDR5X on the GTX1080 is as well I guess?

GTX1080 = 320Gbps / 2560 = 128Mbps per CUDA Core

GTX1070 = 256Gbps / 1920 = 136.5Mbps per CUDA Core

Just food for thought.

Simply throwing more memory bandwidth at a GPU is not always going to yield better performance. AMD has been trying that strategy for generations, and it obviously isn't working. If the GPU itself can't process any faster, then the extra memory bandwidth goes wasted. So the GTX1080, with its roughly 25% faster GPU, can probably benefit from the higher memory bandwidth. While the slower GTX1070 probably wouldn't benefit that much from the higher memory bandwidth.

The GTX 1080 on the other hand is the fastest thing on the market right now.

Hopefully a larger chip with HBM2 will be cheaper. ;)

On two identical cards limited to 4Gb, I think HBM would come out on top. But that test will never happen.

www.hardocp.com/article/2016/05/24/xfx_radeon_r9_fury_triple_dissipation_video_card_review#.V0jv1hUrIuU

I guess you guys are right, after all it's nvidia that actually makes a profit.

Still, goes a long way on telling if HBMs added bandwidth is really useful or not.