Thursday, January 12th 2017

AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

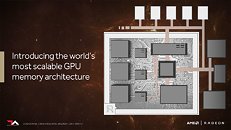

According to WCCFTech, AMD's next-generation Vega architecture of graphics cards will see its launch on consumer graphics solutions by May 2017. The website claims AMD will have Vega GPUs available in several SKUs, based on at least two different chips: Vega 10, the high-end part with apparently stupendous performance, and a lower-performance part, Vega 11, which is expected to succeed Polaris 10 in AMD's product-stack, offering slightly higher performance at vastly better performance/Watt. WCCFTech also point out that AMD may also show a dual-chip Vega 10×2 based card at the event, though they say it may only be available at a later date.AMD is expected to begin the "Vega" architecture lineup with the Vega 10, an upper-performance segment part designed to disrupt NVIDIA's high-end lineup, with a performance positioning somewhere between the GP104 and GP102. This chip should carry 4,096 stream processors, with up to 24 TFLOP/s 16-bit (half-precision) floating point performance. It will feature 8-16 GB of HBM2 memory with up to 512 GB/s memory bandwidth. AMD is looking at typical board power (TBP) ratings around 225W.

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

Source:

WCCFTech

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

187 Comments on AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

You probably meant that ratio of average performance in TFLOPs versus peak theoretical performance in TFLOPs decreases.

I can bring up noise every time, since I'm absolutely right about Nvidia cards being more quiet and way less power hungry which has to do with the noise as well.Then compare R9 290X custom vs. custom 780 Ti and then you'll see I'm right. They are way more efficient and a lot faster. Also Multi Monitor, idle and Bluray / Web power consumption is still a mess on those AMD cards. Efficiency is not only, when you play games.

I'm gonna drop this discussion now, cause you're a fanboy and just a waste of time. Save to say, you didn't prove anything of what I've said wrong. The opposite is true and you totally confirmed me with your cherry picking. Try that with someone else.

You're on Ignore, byebye.

www.techpowerup.com/reviews/ASUS/R9_290X_Direct_Cu_II_OC/

www.techpowerup.com/reviews/MSI/GTX_780_Ti_Gaming/

The custom 780 Ti was 15% faster than the custom 290x at 1080p and cost 18% more, which makes the custom 290x have better performance/price. The custom 780 Ti also consumed 6% more power on average gaming session making it only 10% more efficient at gaming than the custom 290x. Nowadays, in modern games, the reference 780TI and 290x are on par with performance at 1080p, which we can probably safely extrapolate to custom cards. You do the math.

As I said before, you either didn't read the reviews or you have a really bad memory. Pick one. I'm pulling out of this debate as it's not the topic of this thread, however I did enjoy proving you wrong ;).

Let's analyze the power consumption in other areas (single monitor only of course, since the number of multimonitor users is way lower):

The difference in single monitor idle is 10 Watts. This is also the difference between the cards at average gaming. If you leave the 290x idling, it can turn off to ZeroCore Power modus which consumes virtually no power (i measured it myself on my 7750 and you can find measurements online), but I'm putting 2 W there so you won't say I'm cheating ...

If you leave the computer running 24/7 and it idles 12 hours, you game 6 hours, watch a BlueRay movie for 2 hours and do other things for 4 hours the 780 Ti will consume: (12h*10W + 6h*230W + 2h*22W+4h*10W)/24h = 60-70W per hour and the 290X will consume (12h*2W + 6h*219W + 2h*74W+4h*20W)/24h = 60-70W per hour. Virtually the same! You can say whatever you want, but the numbers are on my side :D.

Fermi had huge die size compared to evergreen and succesors ... AMD already had 5870 that 480 couldn't quite dethrone without high tesselation (evergreen's Achilles heel)... thing is 580 was a proper fermi improvement (performance and power, temperature and noise improvement) and 6000 series wasn't improvement at all over evergreen.

Two 5000 or 6000 series gpus in xfire had huge issues with frame pacing back then (and it was an issue all the way to the crimson driver suite), so they would actually beat any fermi in average frame rate, but measured frame time variations made it feel almost the same. Maybe that's what you are referring to?

I believe performance lead for fermi came later with driver maturity ... and by that time it was GTX 580 vs HD 6970 win for nvidia and very soon after GTX 580 vs 7970 win for amd until kepler and so on.

btw.

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_480_Fermi/32.html

Post 1: www.techpowerup.com/forums/threads/amds-vega-based-cards-to-reportedly-launch-in-may-2017-leak.229550/#post-3584964

Post 2: www.techpowerup.com/forums/threads/amds-vega-based-cards-to-reportedly-launch-in-may-2017-leak.229550/page-4#post-3585723

The table for reference:

AMD's larger die have lower performance per flop.

Before you reply that this is a stupid methodology, please put together something based on a methodology that you think is good and use that supporting data.

Am I missing something?

Consumers could care less if there was something the size of a postage stamp or a 8.5"x11" die under there... really.

Since you are depicting a trend in your graph, it should work out if and only if all is measured in same resolution (either that or all resolution summary) and gpu usage is same in all games/samples. The second condition is a difficult one.

Why usage, because for example 8 TFLOPS GPU @75% usage skews your perf/flops ratio compared to say 8 TFLOPS GPU @100% usage.

As I said, good thing you extrapolated a linear trend out of the data points to combat the gpu usage skew.

I'm not even going into how in one game to calculate a single pixel you need X floating point operations, and for another game 2X floating point operations ... but again same games were used so relative trend analysis works out

I may have came across as a dick which wasn't my intention, but I admit that the fact that you are looking for a trend and relative amd vs nvidia relation between trends (using averages and same games) helps making your methodology work.