Thursday, January 12th 2017

AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

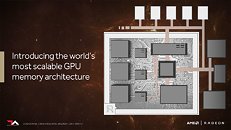

According to WCCFTech, AMD's next-generation Vega architecture of graphics cards will see its launch on consumer graphics solutions by May 2017. The website claims AMD will have Vega GPUs available in several SKUs, based on at least two different chips: Vega 10, the high-end part with apparently stupendous performance, and a lower-performance part, Vega 11, which is expected to succeed Polaris 10 in AMD's product-stack, offering slightly higher performance at vastly better performance/Watt. WCCFTech also point out that AMD may also show a dual-chip Vega 10×2 based card at the event, though they say it may only be available at a later date.AMD is expected to begin the "Vega" architecture lineup with the Vega 10, an upper-performance segment part designed to disrupt NVIDIA's high-end lineup, with a performance positioning somewhere between the GP104 and GP102. This chip should carry 4,096 stream processors, with up to 24 TFLOP/s 16-bit (half-precision) floating point performance. It will feature 8-16 GB of HBM2 memory with up to 512 GB/s memory bandwidth. AMD is looking at typical board power (TBP) ratings around 225W.

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

Source:

WCCFTech

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

187 Comments on AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

I think you have forgot what we were discussing here. Some claims Vega will be more efficient than Pascal, and since Vega 10 will compete with GP104 it's a fair comparison. GP106 is 55% more efficient, GP104 ~80% more efficient and GP102 ~85% more efficient, so AMD have their work cut out for them.

EDIT: Actually did the calculations and Pascal is 59% more efficient on average. 460,470, and 480 vs 1050 Ti, 1060 3, 1060 6, 1070, and 1080.

Edited because there's no point talking performance.

Too many people wave the DX12 flag as if it's magical. It's not. It brings no better gfx settings and is so far proving hard to code.

People get pissed off Nvidia performs so well with such a lean efficient design and people get pissed off because AMD are doing better.

People ought to look at things from outside the arena and see how fantastic both things are for us consumers in the long run.

Hardware has to be available

Developers willing to program for

Enough of a customer base to make it worth it

Nobody has it nailed down and as you rightly say, it's going to take quite a while to get it right. Still a lot of folk on W7 as well (raises hand) which doesn't help the cause.

This is what happens when there's no competition. Not to mention lack of competition is BORING for hardware enthusiasts like myself.Agreed.

The thing is, there's huge improvement in performance using DX12 / vulkan. I've had the opportunity to test the 8GB RX 480 (Asus Strix) and a 4GB RX 470 (PowerColor RedDragon V2) and I have to say I was impressed.

titan x maxwell might not have excellent DP capability as kepler based titan but it's spec is exactly the same as quadro M6000. so if you don't need those certified drivers that come with quadro those titan x can easily replace quadro M6000 at far cheaper price (5k vs 1k). in fact as anandtech point it out some company actually build their product using titan x maxwell instead of using quadro or tesla. titan x pascal was sold for it's unlock INT8 performance.titan x maxwell is a bit weird since there is no advantage to it compared to regular geforce based card. the only advantage it has really is that 12GB of VRAM making it having the same spec as quadro M6000. but i heard talks about some people sought after it's FP16 performance. it might be the reason why nvidia end up cripling FP16 in non tesla based card with pascal.

Also, 1060 outperforming 480 in your chart is... somewhat outdated.Well, intresting to mention that 6 month after release 480 took over.

www.techpowerup.com/forums/threads/rx-480-vs-1060-several-month-ago-vs-now-the-amd-driver-improving-over-time-is-not-a-myth.228443/Bullshit:

www.computerbase.de/2017-01/geforce-gtx-1050-ti-asus-strix-msi-gaming-test/3/#abschnitt_leistungsaufnahme

Thanks to TPU benchmarks (I have yet to find site that claims 480 consumes more than 1070) in practice negligible power consumption differences are hyperboled into orbit.

The odd arguments these people keep making are quite puzzling, no?

-Why do people keep comparing Hawaii and Fiji TFLOPS to Vega, when Polaris is much closer architecturally?

-Why do people keep exaggerating how powerful the Titan is?! As usual people seem to be mislead by the name - it's only ~45% stronger than the Fury X depending on the game and resolution. Do people really think it's going to be hard for AMD to make a card 50% stronger than a card they made 2 years ago?!?!?!

-Overall, why do people continue to just assume AMD can't make powerful cards? The 5870 and 5970 practically had supremacy in the Enthusiast market for a FULL YEAR. The 7970 and then GHz edition essentially held the performance crown for an entire year until Nvidia released a larger card that cost $1000, and then the 290X crushed the Titan and 780 Ti HARD. AMD is no stranger to performance wins.

I don't see the 780ti being crushed HARD, as you put it. I appreciate you prefer AMD (I've seen you on other sites lauding AMD to the moon) but your abuse of truth is silly.

Again, I hope the Vega card is more powerful than most people are expecting. I'll be very pissed off if a card I'm stalling an entire build for doesn't match (or beat) the rumoured 1080ti. I'm in a win, win here - I want Vega to perform well - I'm not a zealot like some Nvidia owners can be. But you're always a wee bit overly red pumped to be taken seriously.

Here's something from 2016:

tpucdn.com/reviews/NVIDIA/GeForce_GTX_1080/images/perfrel_3840_2160.png

tpucdn.com/reviews/NVIDIA/GeForce_GTX_1080/images/perfrel_2560_1440.png

780 Ti almost losing to a 7970 GHz (Which beat the pathetic 780).

To be clear I didn't cherry pick at all. I simply googled "290X 780 Ti 2016". That is the first thing that popped up.

a) 7970 lost to GTX 680 when 680 was released, but it had performance crown for about ~3 months. Later 7970 GHz Edition equalled performance of GTX 680, or was a bit faster, but with way higher power consumption.

b) 290X was faster than GTX 780 at release and had a good fight with GTX Titan. Nvidia then released the 780 Ti to counter the 290X/Hawaii GPUs and the 780 Ti easily won vs. the 290X. Later custom cards came closer to the performance of the 780 Ti, but the 780 Ti custom versions were again faster.

c) Only thing you had right was HD 5870 and 5970 being unmatched until GTX 480 and GTX 500 series came. GTX 480 came late and was faster, but consumed a hell lot of power. GTX 580 easily won vs HD 5000 and HD 6000 series.

This is still talking release and maximum 6 months after it. It's pretty irrelevant to compare performances now and disregarding release performance of the GPUs, as nobody buys a GPU to have more performance than the competitor GPU 3 years later.

Also @the54thvoid is right, here are the other tables:

www.techpowerup.com/reviews/MSI/R9_290X_Lightning/24.html

That's (one of the best) custom 290X losing to reference 780 Ti. Custom 780 Ti is even faster. 290X simply had no chance.

Sorry but I don't need to read your long fanboy rant. Continue to live in the past - All Nvidiots do by necessity.

Oh.... He can't read. That's sad.

Also calling someone else "nvidiot", and behaving like a fanboy is really funny. Seems you don't see your own behaviour as fanboyism, but it is.You're a really funny person. I'd say the only fanboy here, is you.

I posted the aggregate framerate - that includes Nvidia's broken "The Way It's Meant to not Boot" games.

Most people keep their cards for 2-3 years on average. Even at launch the 780 Ti was tied in 4K, and only won by 10% at most in 1080p (1440p depended on the game). Nobody with half a brain pays 30% more money for 0-10% more performance that will only last a year. Considering the 780 Ti came out AFTER the 290X, I would call it pretty pathetic.

Yes 290X is now better than years before, but that doesn't change the fact nobody can see the future. People paying over 500 bucks for a GPU, mostly chose the 780 Ti, because it was simply the all around better GPU, power consumption wise, performance wise by far (custom GPU vs. custom) and also Nvidia had simply better drivers at least until a few months ago. 4K didn't play the slightest role back then, it's not even really important now. 1440p and 1080p which I posted, even the ref 780 Ti had a easy win VS. Lightning 290X there, which is one of the best 290X, and this was many months after the first release of 290X, so drivers were already a lot better. You can say that the 290X/390X is on same level or better now, but power consumption is still a mess (idle, average gaming, multi monitor, blu ray/web). So in the end, it's still not really better in my books, as I'm using Multi Monitor and I don't want to waste 35-40W. I also don't want a GPU that consumes 250-300W of power, that's especially true for the 390X that's even more power hungry than the 290X. Nvidia GPUs are way more sophisticated power gating wise, much more flexible with core clocking, and only Vega can change that, because Polaris didn't. And yes I hope that Vega is a success.

Comparing the 290/390X with the 980 is just laughable. The 980 is a ton more efficient. Maybe efficiency is not important for you, but for millions of users it is. It has also to do with noise, 780 Ti/980 aren't as loud as 290/390X.

But I'm still laughing about you calling me a "Nvidiot fanboy". I'm a regular poster in AMD reddit, I owned several Radeon cards and I know ~everything about AMD. If at all, I'm more likely a fanboy of AMD than Nvidia, but this doesn't change certain facts that you can't change as well.

¯\_(ツ)_/¯

I have owned plenty of Nvidia cards (Haven't owned any for a few years now though). However I honestly don't believe you when you say you own AMD cards considering the continued noob arguments I keep hearing.

The 390X is noisy huh? Um no they were all AIB whisper quiet cards. Of course you probably don't know that because you are clearly uninformed on all of these cards from top-to-bottom. I mean the 290X was hot, but not loud if using its default fan settings,; and again - that's for the cheap launch cards. If you bought the plentifully available AIB cards you would know they were very quiet. Quite funny you bring up noise when the Titan series (And now 1070/80 FE) have been heavily criticized for their under-performing and noisy fan systems.

Also the efficiency argument is my favorite myth. Only with the release of Maxwell did Nvidia start to have any efficiency advantage at all, and that was only against the older generation AMD cards. I will leave you with this:

^WOW! A full 5-10% more efficient! (depending on the card). Anyone who thinks that is worth mentioning is simply looking for reasons to support "Their side."

Pascal was really the first generation Nvidia won efficiency in any meaningful way.

Yeah its a nice little solar system :D

March nv, May Amd, seems reasonable, maybe its just enough to fine tune it to 1080ti..