Thursday, January 12th 2017

AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

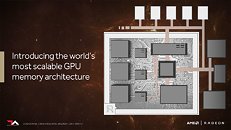

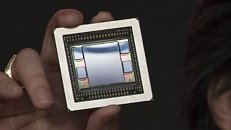

According to WCCFTech, AMD's next-generation Vega architecture of graphics cards will see its launch on consumer graphics solutions by May 2017. The website claims AMD will have Vega GPUs available in several SKUs, based on at least two different chips: Vega 10, the high-end part with apparently stupendous performance, and a lower-performance part, Vega 11, which is expected to succeed Polaris 10 in AMD's product-stack, offering slightly higher performance at vastly better performance/Watt. WCCFTech also point out that AMD may also show a dual-chip Vega 10×2 based card at the event, though they say it may only be available at a later date.AMD is expected to begin the "Vega" architecture lineup with the Vega 10, an upper-performance segment part designed to disrupt NVIDIA's high-end lineup, with a performance positioning somewhere between the GP104 and GP102. This chip should carry 4,096 stream processors, with up to 24 TFLOP/s 16-bit (half-precision) floating point performance. It will feature 8-16 GB of HBM2 memory with up to 512 GB/s memory bandwidth. AMD is looking at typical board power (TBP) ratings around 225W.

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

Source:

WCCFTech

Next up, is "Vega 20", which is expected to be a die-shrink of Vega 10 to the 7 nm GF9 process being developed by GlobalFoundries. It will supposedly feature the same 4,096 stream processors, but likely at higher clocks, up to 32 GB of HBM2 memory at 1 TB/s, PCI-Express gen 4.0 bus support, and a typical board power of 150W.

AMD plans to roll out the "Navi" architecture some time in 2019, which means the company will use Vega as their graphics architecture for two years. There's even talk of a dual-GPU "Vega" product featuring a pair of Vega 10 ASICs.

187 Comments on AMD's Vega-based Cards to Reportedly Launch in May 2017 - Leak

And yes, those drivers aren't even remotely optimal, with 10% faster speed than 1080 in Doom, and further optimizations and higher clockspeed (it was probably thermal throtteling in that closed cage and with barraged fans that don't help to get the heat out the case) it is indeed possible that it could rival Titan XP / GM102.

It feels like the time between a AMD product announcement and first purchase date, is the same as a generation for Nvidia and Intel. My guess is that promotion of their products are much more important to AMD than product development.

The Vega cards replace the fury's not the 290/390 SKU's from what I have seen in the road map.

I honestly think AMD banked on Polaris clocking a good bit higher and being competitive with the 1070, but were horribly let down by GloFo. AMD has a weird gap yes, but I do not think it was Vega I think it was Polaris failing them.

The GP102 & GP100 are different because of HBM2, Nvidia probably felt that they didn't need "nexgen" memory for their gaming flagship that or saving a few more $ was a better idea i.e. with GDDR5x & the single card cannot support these two competing memory technologies.

The Titan is still marketed as a workstation card, I do wonder why?

We've seen similar with HyperMemory and TurboCache on low end cards where system memory seamlesly behaved like on-board graphic memory. It saved costs while delivering nearly the same performance. Having systems with quad channel DDR4 RAM, I can see that as a viable game data storage memory.

this is not the same as Hyper memory/TurboCache those used system memory as an extended frame buffer to increase the frame buffer of the card. The dynamic part was only changing the size of the system ram frame buffer.

Quad channel DDR 4 is not viable for high end gaming, your 2400 MHz ram has a theoretical bandwith of 76,8 GB/s witch is about the same as a R7-250 at 73,6 GB/s.

It's sticking to 16nm, and will not be moving to 10nm. Also, there's rumors that they needed to pull it up to meet a government contract.