Tuesday, February 28th 2017

Is DirectX 12 Worth the Trouble?

We are at the 2017 Game Developers Conference, and were invited to one of the many enlightening tech sessions, titled "Is DirectX 12 Worth it," by Jurjen Katsman, CEO of Nixxes, a company credited with several successful PC ports of console games (Rise of the Tomb Raider, Deus Ex Mankind Divided). Over the past 18 months, DirectX 12 has become the selling point to PC gamers, of everything from Windows 10 (free upgrade) to new graphics cards, and even games, with the lack of DirectX 12 support even denting the PR of certain new AAA game launches, until the developers hashed out support for the new API through patches. Game developers are asking the dev community at large to manage their expectations from DirectX 12, with the underlying point being that it isn't a silver-bullet to all the tech limitations developers have to cope with, and that to reap all its performance rewards, a proportionate amount of effort has to be put in by developers.

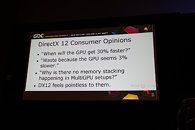

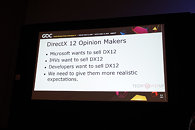

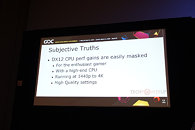

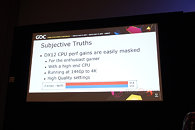

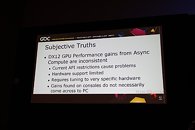

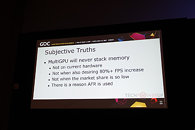

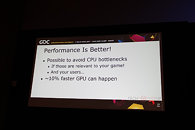

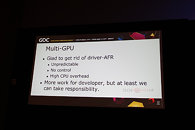

The presentation begins with the speaker talking about the disillusionment consumers have about DirectX 12, and how they're yet to see the kind of console-rivaling performance gains DirectX 12 was purported to bring. Besides lack of huge performance gains, consumers eagerly await the multi-GPU utopia that was promised to them, in which not only can you mix and match GPUs of your choice across models and brands, but also have them stack up their video memory - a theoretical possibility with by DirectX 12, but which developers argue is easier said than done, in the real world. One of the key areas where DirectX 12 is designed to improve performance is by distributing rendering overhead evenly among many CPU cores, in a multi-core CPU. For high-performance desktop users with reasonably fast CPUs, the gains are negligible. This also goes for people gaming on higher resolutions, such as 1440p and 4K Ultra HD, where the frame-rates are low, and the performance tends to be more GPU-limited.The other big feature introduced to the mainstream with DirectX 12, is asynchronous compute. There have been examples of games that take advantage of gaining more performance out of a certain brand of GPU than the other, and this is a problem, according to developers. Hardware support to async compute is limited to only the latest GPU architectures, and requires further tuning specific to the hardware. Performance gains seen on closed ecosystems such as consoles, hence, don't necessarily come over to the PC. It is therefore concluded that performance gains with async compute are inconsistent, and hence developers should manage their time on making a better game than focusing on a very specific hardware user-base.The speakers also cast aspersions on the viability of memory stacking on multi-GPU - the idea where memory of two GPUs simply add up to become one large addressable block. To begin with, the multi-GPU user-base is still far too small for developers to spend more effort than simply hashing out an AFR (alternate frame rendering) code for their games. AFR, the most common performance-improving multi-GPU method, where each GPU in a multi-GPU renders an alternative frame for the master GPU to output in sequence; requires that each GPU has a copy of the video-memory mirrored with the other GPUs. Having to fetch data from the physical memory of a neighboring GPU is a performance-costly and time-consuming process.The idea behind new-generation "close-to-the-metal" APIs such as DirectX 12 and Vulkan, has been to make graphics drivers as less relevant to the rendering pipeline as possible. The speakers contend that the drivers are still very relevant, and instead, with the advent of the new APIs, their complexities have further gone up, in the areas of memory management, manual multi-GPU (custom, non-AFR multi-GPU implementations), the underlying tech required to enable async compute, and the various performance-enhancement tricks the various driver vendors implement to make their brand of hardware faster, which in turn can mean uncertainties for the developer in cases where the driver overrides certain techniques, just to squeeze out a little bit of extra performance.

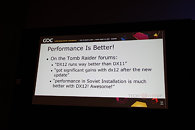

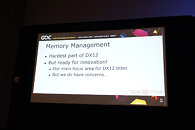

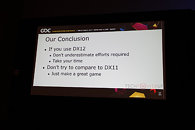

The developers revisit the question - is DirectX 12 worth it? Well, if you are willing to invest a lot of time into your DirectX 12 implementation, you could succeed, such as in case of "Rise of the Tomb Raider," in which users noticed "significant gains" with the new API (which were not trivial to achieve). They also argue that it's possible to overcome CPU bottlenecks with or without DirectX 12, if that's relevant to your game or user-base. They also concede that Async Compute is the way forward, and if not console-rivaling performance gains, it can certainly benefit the PC. They're also happy to have gotten rid of AFR multi-GPU as governed by the graphics driver, which was unpredictable and had little control (remember those pesky 400 MB driver updates just to get multi-GPU support?). The new API-governed AFR means more work for the developer, but also far greater control, which the speaker believe, will benefit the users. Another point he made is that successful porting to DirectX 12 lays a good foundation for porting to Vulkan (mostly for mobile), which uses nearly identical concepts, technologies and APIs as DX12.Memory management is the hardest part about DirectX 12, but the developer community is ready to embrace the innovation (such as mega-textures, tiled-resources, etc). The speakers conclude stating that DirectX 12 is hard, it can be worth the extra effort, but it may not be, either. Developers and consumers need to be realistic about what to expect from DirectX, and developers in particular to focus on making good games, rather than sacrificing their resources on becoming tech-demonstrators than on actual content.

The presentation begins with the speaker talking about the disillusionment consumers have about DirectX 12, and how they're yet to see the kind of console-rivaling performance gains DirectX 12 was purported to bring. Besides lack of huge performance gains, consumers eagerly await the multi-GPU utopia that was promised to them, in which not only can you mix and match GPUs of your choice across models and brands, but also have them stack up their video memory - a theoretical possibility with by DirectX 12, but which developers argue is easier said than done, in the real world. One of the key areas where DirectX 12 is designed to improve performance is by distributing rendering overhead evenly among many CPU cores, in a multi-core CPU. For high-performance desktop users with reasonably fast CPUs, the gains are negligible. This also goes for people gaming on higher resolutions, such as 1440p and 4K Ultra HD, where the frame-rates are low, and the performance tends to be more GPU-limited.The other big feature introduced to the mainstream with DirectX 12, is asynchronous compute. There have been examples of games that take advantage of gaining more performance out of a certain brand of GPU than the other, and this is a problem, according to developers. Hardware support to async compute is limited to only the latest GPU architectures, and requires further tuning specific to the hardware. Performance gains seen on closed ecosystems such as consoles, hence, don't necessarily come over to the PC. It is therefore concluded that performance gains with async compute are inconsistent, and hence developers should manage their time on making a better game than focusing on a very specific hardware user-base.The speakers also cast aspersions on the viability of memory stacking on multi-GPU - the idea where memory of two GPUs simply add up to become one large addressable block. To begin with, the multi-GPU user-base is still far too small for developers to spend more effort than simply hashing out an AFR (alternate frame rendering) code for their games. AFR, the most common performance-improving multi-GPU method, where each GPU in a multi-GPU renders an alternative frame for the master GPU to output in sequence; requires that each GPU has a copy of the video-memory mirrored with the other GPUs. Having to fetch data from the physical memory of a neighboring GPU is a performance-costly and time-consuming process.The idea behind new-generation "close-to-the-metal" APIs such as DirectX 12 and Vulkan, has been to make graphics drivers as less relevant to the rendering pipeline as possible. The speakers contend that the drivers are still very relevant, and instead, with the advent of the new APIs, their complexities have further gone up, in the areas of memory management, manual multi-GPU (custom, non-AFR multi-GPU implementations), the underlying tech required to enable async compute, and the various performance-enhancement tricks the various driver vendors implement to make their brand of hardware faster, which in turn can mean uncertainties for the developer in cases where the driver overrides certain techniques, just to squeeze out a little bit of extra performance.

The developers revisit the question - is DirectX 12 worth it? Well, if you are willing to invest a lot of time into your DirectX 12 implementation, you could succeed, such as in case of "Rise of the Tomb Raider," in which users noticed "significant gains" with the new API (which were not trivial to achieve). They also argue that it's possible to overcome CPU bottlenecks with or without DirectX 12, if that's relevant to your game or user-base. They also concede that Async Compute is the way forward, and if not console-rivaling performance gains, it can certainly benefit the PC. They're also happy to have gotten rid of AFR multi-GPU as governed by the graphics driver, which was unpredictable and had little control (remember those pesky 400 MB driver updates just to get multi-GPU support?). The new API-governed AFR means more work for the developer, but also far greater control, which the speaker believe, will benefit the users. Another point he made is that successful porting to DirectX 12 lays a good foundation for porting to Vulkan (mostly for mobile), which uses nearly identical concepts, technologies and APIs as DX12.Memory management is the hardest part about DirectX 12, but the developer community is ready to embrace the innovation (such as mega-textures, tiled-resources, etc). The speakers conclude stating that DirectX 12 is hard, it can be worth the extra effort, but it may not be, either. Developers and consumers need to be realistic about what to expect from DirectX, and developers in particular to focus on making good games, rather than sacrificing their resources on becoming tech-demonstrators than on actual content.

72 Comments on Is DirectX 12 Worth the Trouble?

The core problem is that Direct3D 12 is in fact the least exciting major version in ages, it does in fact have no major changes, at least when compared to version 8, 9, 10 and 11.

So let me address the common misconceptions one by one.

0 - "Low level API"

This is touted as the largest feature that probably everyone have heard about, yet most people don't have a clue what it means.

"Low level" is at best a exaggeration, "slightly lower level" or "slightly more explicit control". Any programmer would get confused by calling this a low level API. What we are talking of here is slightly more explicit control over memory management, lower overhead operations and fewer state changes. All of this is good, but it's not what I would call a low level API, it's not like I have more features in the GPU code, more control over GPU scheduling or other means of direct control over the hardware. So it's not such a big deal really.

Lower overhead is always good, but in order for there to be a performance gain, then there needs to be some overhead to remove in the first place. API overhead consists of two parts; overhead in the driver (will be addressed in (1)) and overhead in the game engine interfacing with the driver. Whether CPU overhead becomes a major factor is depending on how the game engine works. A very efficient engine using the API efficiently will have low overhead, so upgrading it to Direct3D 12 (or Vulkan) will yield minimal gains. Any game engine which is struggling with CPU overhead will guaranteed have much more overhead inside the game engine itself than the API anyway, so the only solution is to rewrite it properly. A clear symptom of this misconseption is all the people cheering for being able to use more API calls.

There are two ways to utilize a new API in a game engine:

- Create a completely separate and optimized render path.

- Create a wrapper to translate the new API into the old.

Guess which option "all" games so far is using? (The last one)

The whole point of a low level API is gone when you wrap it in an abstraction to make it behave like the old thing. Game engines have to be built from scratch specifically for the new API to have any gain at all from it's "lower level" features. People have already forgot disasters from the past like Crysis, a Direct3D 9 game with Direct3D 10 slapped on top, and why did it perform worse on a better API do you think?

1 - AMD

There is also the misconception that AMD is "better" suited for Direct3D 12, which is caused by a couple factors. First of all, more games are designed for (AMD based) consoles, which means that a number of games will tilt a few percent extra in favor of AMD.

Do you remember I said that the API overhead improvements consisted of two parts? Well the largest one is in the driver itself. Nvidia chose to bring as much as possible to all APIs(1)(2), while AMD retained it for Direct3D 12 to show a larger relative gain. So Nvidia chose to give all games a small boost, while AMD wanted a PR advantage instead.

When it comes to scalability in unbiased games, they scale more or less the same.

2 - Multi-GPU

Since Dirct3D 12 is able to send queues to GPUs of unmatched vendor and performance, many people and journalists assumed this meant multi-GPU scalability across everything. This is very far from the truth, as different GPUs will have to be limited to separate workloads, since transfer of data between GPUs is limited to a few MB per frame to minimize latency and stutter. This means you can offload a separate task like physics/particle simulations to a different GPU, and then just transfer a state back, but you can't split a frame in two and render two halves with a dynamic scene.

3 - Async compute

Async compute is also commonly misunderstood. The purpose of the feature is to utilize idle GPU resources for other workloads to improve efficiency. E.g. while rendering, you can also load some textures and encode some video, since this uses different GPU resources.

If you compare Fury X vs. 980 Ti you'll see that Nvidia beats AMD even though AMD has ~52% more theoretical performance. Yet, when applying async compute to some games AMD get a much higher relative gain. Fans always touts this as superior performance for AMD, but fails to realize that AMD has a GPU with >1/3 of the cores idling, which makes a greater potential to do other tasks simultaneously. So it's in fact the inefficiency of Fury wich gives a greater potential here, not the brilliance. It's also important to note that in order to achieve such gains each game has to be fine tuned to the GPUs, and optimizing a game for an inferior GPU architecture is always wrong. As AMD's architectures become better, we will see diminishing returns from this kind of optimization. Remember, the point of async compute is not to do similar tasks on inefficient GPUs, but to do different types of tasks.

Conclusion

So is Direct 3D 12 worth the trouble?

If you're building it from scratch and not adding any abstractions or bloat then yes!

Or even better, try Vulkan instead.

-----That didn't make any sense at all, please try again. None of the new APIs make the drivers less relevant.When people don't make that distinction, it's a sign that they don't know the difference, and when that's the case they clearly don't know anything about the subject at hand.If only people understood this at least. See my (0).Yes. If you have a game in the works already, just continue with the old API. If you are starting from scratch, then go with the latest exclusively.Calling it "native" might be a stretch, but it's about adding what's called a wrapper or abstraction layer. To a large extent you can make two "similar" things behave like the same at a significant overhead cost. Any coder will understand how an abstraction works.

Let's say you're building a game and you want to target Direct3D 9 and 11, or Direct3D 11 and OpenGL, etc.. You can then create completely separate render paths for each API (basically the whole renderer), or you create an abstraction layer. So when you build a "common API" that hides all the specifics of each API, and then create a single pipeline using the abstraction. Not only is this wasting a lot of CPU cycles on pure overhead, you'll also get the "worst of every API". Because when you break down the rendering into generic API calls, this has to be compatible with the least efficient API, which means that the rendering will not translate into an optimal queue for each API. This will result in a "naive" queue of API calls, far from optimal.

Given choice, is there any downside in choosing supporting Vulkan along with DX12? I can't think of one, only advantages because of the bigger reach.

If you are an engine developer and you are implementing dx12 renderer, might as well do the vulkan renderer too while your head is already swimming in low level gpu arch specifics.

Slowly but surely vulkan render path is becoming as common in major engines as dx12 ... Epic for example, I believe you can use vulkan renderer directly in unreal editor now ... still experimental though

It could be bright future for vulkan ... (puts on shades) ... when it erupts ... YEAAAHH

Looking to drive performance "expanding super playable game to larger audience" as RejZoR stated, the faster all such technology will show it true merits. Once a group really makes complete use of all the various technologies with this then the floodgate will have be opened and others will need to follow or flounder. Many games and gaming houses have stayed in a stagnant place looking to maximize profits believing if they tout visual fidelity, although only delivering bullshit eye candy that eats resources as their sponsoring Over-Lord wants them to enriches their bottom line.

Essentially number of layers now with dx12/vulkan compared to before stayed the same ... only graphics api layer got much thinner and both latency and throughput optimized

So Epic and Dice are expected to do the hard part (close to the metal part), only they have been busy with dx12.

Yes, the number of layers has remained the same. The thing is, some of the things that the drivers was optimizing (per title) behind closed doors, is now exposed to developers. When you put an engine on top of an API (doesn't matter which API), you have two options. First is to act as a facade and present to the user a simplified view of the underlying API and sacrificing flexibility in the process. Second, you expose the maximum level of features, but then the end user gets to deal with all the flexibility plus your engine's API. I wouldn't want to be on the spot to have to choose the right approach :D

Stock Fury X beats stock 980 Ti at 1440p and higher from, what, release date?

And for 1000s time, tflops are just one of the things cards do, not the only measure.

DX12 was the difference between playable and unplayable, at the settings i used at 4K.

... sigh

Graphics engine is so much more and exposes to users/devs highly tunable pre-made systems along with higher api on top of underlying api (engine libs calling underlying api methods).

What I'm saying game devs that use UE never need to think about vulkan api methods, I mean have you seen vulkan api cheat sheet?

www.khronos.org/files/vulkan10-reference-guide.pdf

That's the gpu command pipeline level with fences, sync and cache control, etc. ... however you'd definitely need it if you're rolling your own engine

In the case of the vulkan your first approach would produce vulkan graphics utility library, and second approach would do the same and aditionally produce vulkan wrapper

On the AMD side, the story differs. The cheap RX 470 I got for my guest gaming PC sees massive gains in both games - especially in RotTR, where the game is very enjoyable at Ultra settings on DX12 - including a little AA, while being completely unplayable (for me) at the same setting in D3D 11 (stuttering, huge framerate spikes). Doom gets a comfy 60-70 fps @ 1080p / ultra in D3D 11, but does 90-120 fps in Vulkan (witch puts it close to my GTX 1070 / D3D 11).

If devs ported last-year's AAA titles to D3D 12 / Vulkan, you'd see massive increases in performance on older / lower spec systems, witch is what most consumers want, and what game devs SHOULD want, because their game could reach a broader audience.

NOTE: the machine in question uses an old Phenom II X6 1090T overclocked to 3.6GHz with 4GHz boost - the old CPU might be the main reason for these huge performance increases. The motherboard is a modern MSI AM3+ AMD 970 chipset board and the machine uses 2133MHz CL11 DDR3 running at 1866 CL9.^ This ^

In fact the Fury X can match and (rarely) outperform the 1070 in some games (it smashes the 1070 in Doom / Vulkan) but only with the latest drivers - try it with launch date drivers and it will disappoint. It's seen quite a bit of a performance increase since launch - BUT (and it's a fat one) - it's a very hungry card. In full load it will drink a lot of power compared to the 1070. Heat-wise both are about the same (my 1070 easily gets to 80 something C when boosting to 1911MHz) with the Fury X being slightly hotter. People have been kind of ignoring this card, but it can compete with the 1070 in most titles, especially newer DX12 / Vukan games - good buy if your machine has a good PSU and if you can find it cheaper then the 1070.

www.pcgamer.com/deus-ex-mankind-divided-pc-patch-adds-directx-12-support/

This article gives a strong impression it's a wrapper, like D3D12 in The Division. Is Ashes of the Singularity and Sniper Elite 4 the only games right now that are D3D12 from the ground up?

you lost me at "and how they're yet to see the kind of console-rivaling performance" who wrote this? does he/she have anything to do with computers? PC's dont rival console performance.. they beat it to death without a sweat since ever....

A lot of you seem to have very strong opinions on development. I wonder if any of you actually are software engineers in your day job...

"game dev" means Game Developer aka entire studio. This includes everyone from tie wearing suites down to code monkeys. I don't care from who the order comes, it's dumb and retarded. And clearly from someone with a tie who doesn't even know what the F he's doing. Making game more accessible performance wise means you'll expand the potential sales to everyone, not just those with ridiculously high end systems. +PROFIT. But whatever, what do I know, I'm just a nobody...