Tuesday, August 29th 2017

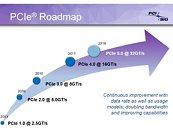

PCI-SIG: PCIe 4.0 in 2017, PCIe 5.0 in 2019

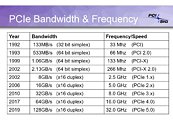

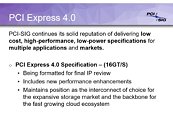

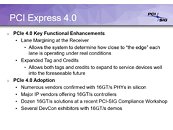

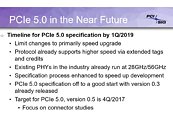

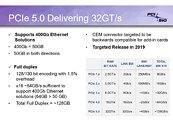

After years of continued innovation in PCIe's bandwidth, we've hit somewhat of a snag in recent times; after all, the PCIe 3.0 specification has been doing the rounds on our motherboards ever since 2010. PCI-SIG, the 750-member strong organization that's in charge of designing the specifications for the PCIe bus, attribute part of this delay to industry stagnation: PCIe 3.0 has simply been more than enough, bandwidth-wise, for many generations of hardware now. Only recently, with innovations in storage mediums and innovative memory solutions, such as NVMe SSDs and Intel's Optane, are we starting to hit the ceiling on what PCIe 3.0 offers. Add to that the increased workload and bandwidth requirements of the AI field, and the industry now seems to be eager for an upgrade, with some IP vendors even having put out PCIe 4.0-supporting controllers and PHYs into their next-generation products already - although at the incomplete 0.9 revision.However, PCIe 4.0, with its doubled 64 GB/s bandwidth to PCie 3.0's comparably paltry 32 GB/s (yet more than sufficient for the average consumer), might be short lived in our markets. PCI-SIG is setting its sights on 2019 as the year for finalizing the PCIe 5.0 specifications; the conglomerate has accelerated its efforts on the 128 GB/s specification, which has already achieved revision 0.3, with an expected level of 0.5 by the end of 2017. Remember that a defined specification doesn't naturally and immediately manifest into products; AMD themselves are only pegging PCIe 4.0 support by 2020, which makes sense, considering the AM4 platform itself has been declared by the company has being supported until that point in time. AMD is trading the latest and greatest for platform longevity - though should PCIe 5.0 indeed be finalized by 2019, it's possible the company could include it in their next-generation platform. Intel, on the other hand, has a much faster track record of adopting new technologies on its platforms; whether Intel's yearly chipset release and motherboard/processor incompatibility stems from this desire to support the latest and greatest or as a way to sell more motherboards with each CPU generation is a matter open for debate. However, considering Intel's advances with more exotic memory subsystems such as Optane, a quicker adoption of new PCIe specifications is to be expected from the company.

Source:

Tom's Hardware

31 Comments on PCI-SIG: PCIe 4.0 in 2017, PCIe 5.0 in 2019

Unless you mean that's TOO fast. I'm not sure we can ever have too fast of advancement.

PCIE spec market is not in a huge rush. staying with 3.0 until 2020 will be just fine. This way there will be more time for chip makers to aim for a finalized 5.0 spec much ahead

Gpus were only ever designed for the 8/16x slot anyway...

GPUs do not move the world. NICs do.

Its also why AMD came up with Infinity Fabric and have plans on using it in server space, more faster.

Look at MIDI, AC measurement (weighted average vs. peak average) and any standard used in the automotive industry...adopting a new standard usually takes decades, not years, but in computer technology it sometimes only takes months.

I was just clarifying your point. We didn't need to go down a rabbit hole. Anyone with half their grey matter can figure out this is with high-end cards...