Monday, August 20th 2018

NVIDIA GeForce RTX Series Prices Up To 71% Higher Than Previous Gen

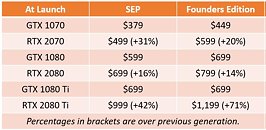

NVIDIA revealed the SEP prices of its GeForce RTX 20-series, and it's a bloodbath in the absence of competition from AMD. The SEP price is the lowest price you'll be able to find a custom-design card at. NVIDIA is pricing its reference design cards, dubbed "Founders Edition," at a premium of 10-15 percent. These cards don't just have a better (looking) cooler, but also slightly higher clock speeds.

The GeForce RTX 2070 is where the lineup begins, for now. This card has an SEP pricing of USD $499. Its Founders Edition variant is priced at $599, or a staggering 20% premium. You'll recall that the previous-generation GTX 1070 launched at $379, with its Founders Edition at $449. The GeForce RTX 2080, which is the posterboy of this series, starts at $699, with its Founders Edition card at $799. The GTX 1080 launched at $599, with $699 for the Founders Edition. Leading the pack is the RTX 2080 Ti, launched at $999, with its Founders Edition variant at $1,199. The GTX 1080 Ti launched at $699, for the Founders Edition no less.

The GeForce RTX 2070 is where the lineup begins, for now. This card has an SEP pricing of USD $499. Its Founders Edition variant is priced at $599, or a staggering 20% premium. You'll recall that the previous-generation GTX 1070 launched at $379, with its Founders Edition at $449. The GeForce RTX 2080, which is the posterboy of this series, starts at $699, with its Founders Edition card at $799. The GTX 1080 launched at $599, with $699 for the Founders Edition. Leading the pack is the RTX 2080 Ti, launched at $999, with its Founders Edition variant at $1,199. The GTX 1080 Ti launched at $699, for the Founders Edition no less.

225 Comments on NVIDIA GeForce RTX Series Prices Up To 71% Higher Than Previous Gen

thats too much lol too much , like you i find really appealing the gtx 1080 ti now. Im gonna seat and wait for the real numbers on those new cards.

I just told you why it's so expensive. Yet you fail to undestand basics of chip production. They can't produce 2080Ti for the same price as 1080Ti - it's technicly impossible. Unless you think they should sell at a loss? Because that's the only way they could do it. I mean forget revenue. Even if they sold these cards at break even prices it would still be more expensive than 16nm Pascal.

If the 2080ti isn't a huge leap in 'all games' above Pascal's best, then the cost is not justified. The development and cost of a product is required to be reflected by its price. Arguments on wafer size are simplications of a development failure if said new design does not have huge perf increase over last gen.

Reviews will be very telling, one way or another.

Edit: put simply, if a company pisses 10billion down the drain in research for an underperforming product, said company usually has a stock price implosion.

The maturity of the process affects yields. I gave you numbers based on best case end realistic ecpectations on defect rate. TLDR: worst case=1250$ per chip. Realistic=641$ per chip.

The way you talk you expect 12nm process to have a 0.00% defect rate because it is "old". All process nodes no matter how old or new will have some amount of defects. That's unavoidable. You not only expect Nvidia to sell their products at a loss but you also expect them to deny the laws of physics.

Fine i get you think it's overpriced. I told you there are reasons besides greed but you keep playing the same record over and over.

So let me ask you. What price for these cards would be reasonable for you?

I get they are pushing a new direction and I applaud that but it looks like a cash grab to me, and a lot of other observers, including web reviewers.

@W1zzard is the one that will tell us, assuming he gets a 2080ti.

And please, understand, my purchase history is GTX Titan, GTX 780ti (X2), Kingpin GTX 980ti, GTX 1080ti. So, not a hater, very much a supporter of the fastest consumer gfx cards.

Sure Nvidia has margins. Especially on FE models. There's litte doubt that FE cooler does not cost extra 200$ like in the case of 2080Ti FE vs SEP MSRP. But some people seem to believe Nvidia's margins are 50% or more from each 2080Ti card. That's just funny.

I'm really interested in seeing the performance of these cards in RT and standard titles, I hope the 2060 is more powerful than a 1080 Ti or Titan Xp.

63.3% Q2 2018/FY 2019

64.5% Q1 2018/FY 2019

641$ was explained earlier. It is a reasonable estimate based on available data for TU102 cost. Asking for TU102 in 750-800$ range means you're asking Nvidia to sell it with 0 margin. The only way they would do that, ever - is if AMD was breathing down their necks. Wich is not the case unfortunately.

Would i like cheaper high end cards? Sure. Who would not. But i also understand the cost of the chips themselves and that there is no competition.

Pricing will cause that, and it shouldn’t come to having to do that. Once you get to a tier you like....stay there. If you keep slipping down, it will be prohibitively more expensive to buy the tier you were always used to, whatever that is.

The conclusion is that Nvidia is trying to rip us off , and the price I crease is huge and in my opinion not justified . As for wafer price I found you overestimated the cost by at least 10000 $ , 20000 $ per wafer was a year ago , now it's probably a lot less . So if the rtx 2080 ti chip cost 350 $ maybe . Anyway that's the assumptions . And the price hike is way to much to be justified . Just look at financial results of Nvidia . They realise that they have no real competition in high end cards , that's why they are ripping us off . I think they could easily sell rtx 2080 ti for 800 $ which would be a 100$ more than gtx 1080 ti , and still make a lot of profit . But the point is that they are being greedy . What is really co concerning in my opinion is your mindset , trying to justyfi that incredibly big price increase . If we follow your thinking then soon for 500$ we will buy low end graphics , and we should be ok with that ? Price Increase bigger than performance gain , when it should be the other way around ? Its insane isn't it ? No real performance number during conference ? Isn't that sketchy as fuck ? That's what happens when there is no competition , soon Nvidia could have 100 % margin , and everybody should be happy and give them as much as they want ? . So let's raise taxes , why not , our government can do that ,let's give government 80 % of our earnings to them , and we should be happy about that. What is most concerning is justifing the greedy company , and trying every possible way to justify that price , why not . Next generation could cost 2000$ , and then , yeah sure , new technology , so we should gladly pay more etc ? That defies common logic , price performance ratio . That's the consequence of no competition , and company that is taking advantage of that . Imagine a situation , when there is one high speed internet provider , and if you want high speed connection , and after three years he wants 72 % more , just because he doesn't have competition . Yeah sure , when even Xbox one X , can playy some games at 4k 30 fps , while the rtx 2070 which will be over 500 $ the price of whole console , probably couldn't handle 60 FPS , where there is logic in that, and that's the price of GPU only . So every year , I should go tier down ? Where is progress in that . What about price to performance increase , like it was every generation . It defies logic .

this is not what Jensen wanted you to think about now (with that full moth of preorder time before first real reviews - that is the true inovation here :D)... think about all the GigaRays and RTX-OPS and preorder now, for just "499" (that actually might be 1299,- for the card he demoed)... think about the technology and 10 year development, think about die size (size DOES matter!), think about Jensen's enthusiasm and press that preorder. Dont be a downer and dont think about those +20% performance real world gains and over +50% price gains (in 2.5 year time vs previous gen Pascal) - that kind of negativity is for loosers, downers, amd fanboiz, you are not like that - buy now - think later. Dont think about RayTrace as performance hog aka - 30-50fps on a 1920x1080 resolution on a 1299$ gpu :D, think about GIGA and Rays in a speed of light, think about those slideshow gameplays (with RTX ON) Jensen sowed us - those "just works!". Most of you downers got this wrong, but some enlightened folks (enlightened by the giga Rays of pure bliss :D) here gets the Jensens vision and the future and announced their preorder plans like a champs *stands up and claps with tears in his eyes and then salutes the green flag* - you are da real MVPs - let the Jensen Rays be with you

This would contradict your claim that Nvidia is massively increasing margins. Surely they would not swallow half the wafer cost to keep RTX 8000 at 10000?

So if it's so expensive then simple logic dictates that the wafer must be more expensive. 25000$ is not unreasonable cost even if they get only a handful of fully enabled TU102's from it.In your dreams maybe. Here on the real world things are less rosy...They could but as we both know they have no incentive to do so.Don't put words in my mouth. That is your interpretation. I'm justifying no one. I'm trying to see why it's so expensive instead of jumping to the most obvious and easy answer like most people.Yeah that was strange.Do they also increase your speeds by 20-30% before asking that? Will the price per Mbit/s remain the same? Not the best comparison.Consoles are running bare metal API's and games are perfectly optimized for those. Plus checkerboard 4K rendering meaning not real 4K. That is why they can run what seems like 4K 30 on much slower hardware.