Saturday, January 26th 2019

Anthem VIP Demo Benchmarked on all GeForce RTX & Vega Cards

Yesterday, EA launched the VIP demo for their highly anticipated title "Anthem". The VIP demo is only accessible to Origin Access subscribers or people who preordered. For the first hours after the demo launch, many players were plagued by servers crashes or "servers are full" messages. Looks like EA didn't anticipate the server load correctly, or the inrush of login attempts revealed a software bug that wasn't apparent with light load.

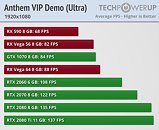

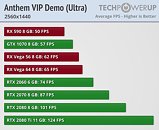

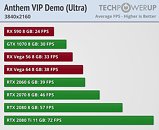

Things are running much better now, and we had time to run some Anthem benchmarks on a selection of graphics cards, from AMD and NVIDIA. We realized too late that even the Anthem Demo comes with a five activation limit, which gets triggered on every graphics card change. That's why we could only test eight cards so far.. we'll add more when the activations reset.We benchmarked Anthem at Ultra settings in 1920x1080 (Full HD), 2560x1440 and 3840x2160 (4K). The drivers used were NVIDIA 417.71 WHQL and yesterday's AMD Radeon Adrenalin 19.1.2, which includes performance improvements for Anthem.At 1080p, it looks like the game is running into a CPU bottleneck with our Core i7-8700K (note how the scores for RTX 2080 and RTX 2080 Ti are very close together). It's also interesting how cards from AMD start out slower at lower resolution, but make up the gap to their NVIDIA counterparts as resolution is increased. It's only at 4K that Vega 64 matches RTX 2060 (something that would be expected for 1080p, when looking at results from recent GPU reviews).

We will add test results for more cards, such as the Radeon RX 570 and GeForce GTX 1060, after our activation limit is reset over the weekend.

Things are running much better now, and we had time to run some Anthem benchmarks on a selection of graphics cards, from AMD and NVIDIA. We realized too late that even the Anthem Demo comes with a five activation limit, which gets triggered on every graphics card change. That's why we could only test eight cards so far.. we'll add more when the activations reset.We benchmarked Anthem at Ultra settings in 1920x1080 (Full HD), 2560x1440 and 3840x2160 (4K). The drivers used were NVIDIA 417.71 WHQL and yesterday's AMD Radeon Adrenalin 19.1.2, which includes performance improvements for Anthem.At 1080p, it looks like the game is running into a CPU bottleneck with our Core i7-8700K (note how the scores for RTX 2080 and RTX 2080 Ti are very close together). It's also interesting how cards from AMD start out slower at lower resolution, but make up the gap to their NVIDIA counterparts as resolution is increased. It's only at 4K that Vega 64 matches RTX 2060 (something that would be expected for 1080p, when looking at results from recent GPU reviews).

We will add test results for more cards, such as the Radeon RX 570 and GeForce GTX 1060, after our activation limit is reset over the weekend.

134 Comments on Anthem VIP Demo Benchmarked on all GeForce RTX & Vega Cards

Vega 56 beats 1070 at 1440p & 4K and we would probably have the very same situation with 1080 & 64 (had the former been tested), which is more or less exactly what you would expect with a Frostbite engine.

The issue ain't that Vega is underperforming, but rather that the RTX cards perform surprisingly well with Anthem. Kinda like what we had with the id Tech 6 engine games.

And that ain't really an issue - it's a great news for RTX owners, so let's just be happy for them as there are not so many titles that show a big performance leap over the GTX10xx generation.

Cheers!

Was W1z streaming the game while he played?

Does W1z have other applications running at the same time as the game is played?

When was the last clean install this guy did?

What driver is being used?

Does W1z run a fairly well known review site or does he have a grand total of 60 followers on YouTube?

Gee I know whose reviews I will be looking at.

god I hate kids so much So so much

this post was sponsored by Tunnelbear but then the bear got hungry and ate it

I used to think that people that bought Titans were stupid and ruining the industry. However, everyone has their reasons for buying them. When I figured that out, it became easy to see why people bought things and then accept that others are different.

It makes life much easier when you accept that others have different needs and view points.

can we finally let this fanboi roast die now kid obviously has no clue how shit accually works and is just regurgitating garbage he saw on youtube

Your reading: Fail...

Back on topic, this game seems like it's going to be a beast and will require beefy hardware to run.

Frostbite isn't a new engine, this shouldnt still be happening.So was Kepler a "workstation" GPU as well? Should we have not called the geforce 680 a "gaming GPU"? Because GCN was a near 1:1 foe for Kepler.

GCN's problem is that its old. It's not that "AMD makes workstation cards ONLY, STOP COMPARING THEM!!1!!", AMD didnt have much in the way of funding, and all of it went into ryzen, so GCN was left with table scraps. That has gotten them into their current position. GCN was given more compute power because, at the time, compute was seemingly the wave of the future for gaming, and GCN's first technical competitor was Fermi, a very compute focused design. And when the 580 and 590 came out, nobody was typing "THESE ARE NOT GAMING CARDS OMGZZZZ!!!1!!"

AMD has made, and continues to make, gaming cards. Nobody is claiming the RX 580 is a compute card, anybody who does is a fool. Vega was a bit of a mistake, we all know that, just like the tesla titan was a mistake. Navi is their first large adjustment to their main GCN arch, while I dont think it will be anything near the VILV5/4 > GCN switch, NAVI will undoubtedly make large efficiency gains, and I wouldnt be surprised if compute on consumer models was cut down to make the cards more competitive in the gaming segment.