Saturday, January 26th 2019

Anthem VIP Demo Benchmarked on all GeForce RTX & Vega Cards

Yesterday, EA launched the VIP demo for their highly anticipated title "Anthem". The VIP demo is only accessible to Origin Access subscribers or people who preordered. For the first hours after the demo launch, many players were plagued by servers crashes or "servers are full" messages. Looks like EA didn't anticipate the server load correctly, or the inrush of login attempts revealed a software bug that wasn't apparent with light load.

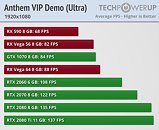

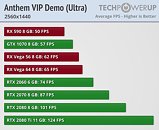

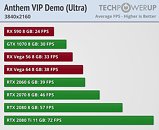

Things are running much better now, and we had time to run some Anthem benchmarks on a selection of graphics cards, from AMD and NVIDIA. We realized too late that even the Anthem Demo comes with a five activation limit, which gets triggered on every graphics card change. That's why we could only test eight cards so far.. we'll add more when the activations reset.We benchmarked Anthem at Ultra settings in 1920x1080 (Full HD), 2560x1440 and 3840x2160 (4K). The drivers used were NVIDIA 417.71 WHQL and yesterday's AMD Radeon Adrenalin 19.1.2, which includes performance improvements for Anthem.At 1080p, it looks like the game is running into a CPU bottleneck with our Core i7-8700K (note how the scores for RTX 2080 and RTX 2080 Ti are very close together). It's also interesting how cards from AMD start out slower at lower resolution, but make up the gap to their NVIDIA counterparts as resolution is increased. It's only at 4K that Vega 64 matches RTX 2060 (something that would be expected for 1080p, when looking at results from recent GPU reviews).

We will add test results for more cards, such as the Radeon RX 570 and GeForce GTX 1060, after our activation limit is reset over the weekend.

Things are running much better now, and we had time to run some Anthem benchmarks on a selection of graphics cards, from AMD and NVIDIA. We realized too late that even the Anthem Demo comes with a five activation limit, which gets triggered on every graphics card change. That's why we could only test eight cards so far.. we'll add more when the activations reset.We benchmarked Anthem at Ultra settings in 1920x1080 (Full HD), 2560x1440 and 3840x2160 (4K). The drivers used were NVIDIA 417.71 WHQL and yesterday's AMD Radeon Adrenalin 19.1.2, which includes performance improvements for Anthem.At 1080p, it looks like the game is running into a CPU bottleneck with our Core i7-8700K (note how the scores for RTX 2080 and RTX 2080 Ti are very close together). It's also interesting how cards from AMD start out slower at lower resolution, but make up the gap to their NVIDIA counterparts as resolution is increased. It's only at 4K that Vega 64 matches RTX 2060 (something that would be expected for 1080p, when looking at results from recent GPU reviews).

We will add test results for more cards, such as the Radeon RX 570 and GeForce GTX 1060, after our activation limit is reset over the weekend.

134 Comments on Anthem VIP Demo Benchmarked on all GeForce RTX & Vega Cards

@TheGuruStud is correct. Devs need to fix their shit. BFV is a very unique Frostbite example because both AMD and Nvidia have extensively been in-house to

optimize ithelp the blundering DICE devs out. But they really shouldn't have to; the GPU hardware isn't magically changing or anything.Yes, yes he really does. 100% agreed. But I do not doubt his accuracy, regardless.I'm not seeing the CPU overhead here, Vega is right about where it should be, across all resolutions. 56 under a 1070, and 64 just over it. Can it improve a few %, sure it probably can. The REAL outlier here is in fact the GTX 1070 at 1440p. To me this looks a lot like lacking optimizations on the developer side, not so much Nv/AMD. Pascal's performance is abysmal compared to Turing's, for example. No blanket statement about either camp would be accurate.Even the quote you linked to: AMD has worked with DICE for Battlefield. Not for Frostbite as an engine. Engines are not biased. They are objective, neutral, its code. What does happen is that specific architectures are better suited to work with certain engines. That is precisely the other way around. Engines are not built for GPU architectures (at least, not in our generic gaming space anymore) - engines are iterative, and last many generations of GPUs. And in between Engine and GPU, there is one vital part you've forgotten about: an abstraction layer, or, an API like DX11/DX12/Vulkan. That is part of the reason why engines can be iterative. The API does the translation work, to put it simply.

Look at NetImmerse/Gamebryo/Creation Engine, nearly two decades worth of games built on it. Ubisoft's Anvil is another example, used in games for the past ten years, multiple consoles and platforms.

Look at the Vega performance for Anthem compared to Battlefield. Literally nothing aligns so far with the idea that Frostbite is 'biased towards AMD'. If anything, that just applies to Battlefield, which makes sense, but that is mostly attributable not even to the engine, but to the API. AMD worked with DICE to implement Mantle, and many bits are similar between it and DX12/Vulkan.

Oh, one final note; Frostbite games have released on the PS3 (Nvidia GPU) long before AMD came to visit DICE.

nl.wikipedia.org/wiki/Frostbite_(engine)

Why did anyone expect GPUs to suddenly be able to push 4x as many pixels comfortably as they've done for the last ten years? If you want to early adopt, don't whine about all the bad things that come with it. You're in a niche, deal with it. Not so sure why 'asshats are still' using 1080p monitors in your view, its a pretty solid sweetspot resolution for a normal viewing distance and gaming. It is without any shadow of a doubt the most optimal use of your GPU horsepower given the PPI of typical monitors. In that sense 4K is horribly wasteful, you render shitloads of pixels you'll never notice.Correct, I wasn't going to go into that but its true, and on top of that, there IS an issue with AMD's DX11 drivers that are higher on overhead like @cucker tarlson pointed out. Just not so sure that Anthem suffers from that issue specifically. On UE4 you're already seeing a somewhat different picture right about now.

All bioware titles this gen were better on nvidia.

Turing is a very impressive architecture technically, turing cards perform really well on both pascal and GCN favored titles.

I don't think there will be any specific engine or game that will perform signficantly better on GCN versus turing [relatively] which was the case for GCN vs. Pascal.

Hai neighbor (Cbus)!!