Tuesday, April 9th 2019

Intel Reveals the "What" and "Why" of CXL Interconnect, its Answer to NVLink

CXL, short for Compute Express Link, is an ambitious new interconnect technology for removable high-bandwidth devices, such as GPU-based compute accelerators, in a data-center environment. It is designed to overcome many of the technical limitations of PCI-Express, the least of which is bandwidth. Intel sensed that its upcoming family of scalable compute accelerators under the Xe band need a specialized interconnect, which Intel wants to push as the next industry standard. The development of CXL is also triggered by compute accelerator majors NVIDIA and AMD already having similar interconnects of their own, NVLink and InfinityFabric, respectively. At a dedicated event dubbed "Interconnect Day 2019," Intel put out a technical presentation that spelled out the nuts and bolts of CXL.

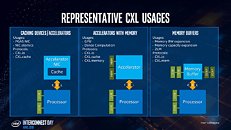

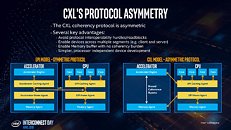

Intel began by describing why the industry needs CXL, and why PCI-Express (PCIe) doesn't suit its use-case. For a client-segment device, PCIe is perfect, since client-segment machines don't have too many devices, too large memory, and the applications don't have a very large memory footprint or scale across multiple machines. PCIe fails big in the data-center, when dealing with multiple bandwidth-hungry devices and vast shared memory pools. Its biggest shortcoming is isolated memory pools for each device, and inefficient access mechanisms. Resource-sharing is almost impossible. Sharing operands and data between multiple devices, such as two GPU accelerators working on a problem, is very inefficient. And lastly, there's latency, lots of it. Latency is the biggest enemy of shared memory pools that span across multiple physical machines. CXL is designed to overcome many of these problems without discarding the best part about PCIe - the simplicity and adaptability of its physical layer.CXL uses the PCIe physical layer, and has raw on-paper bandwidth of 32 Gbps per lane, per direction, which aligns with PCIe gen 5.0 standard. The link layer is where all the secret-sauce is. Intel worked on new handshake, auto-negotiation, and transaction protocols replacing those of PCIe, designed to overcome its shortcomings listed above. With PCIe gen 5.0 already standardized by the PCI-SIG, Intel could share CXL IP back to the SIG with PCIe gen 6.0. In other words, Intel admits that CXL may not outlive PCIe, and until the PCI-SIG can standardize gen 6.0 (around 2021-22, if not later), CXL is the need of the hour.The CXL transaction layer consists of three multiplexed sub-protocols that run simultaneously on a single link. They are: CXL.io, CXL.cache, and CXL.memory. CXL.io deals with device discovery, link negotiation, interrupts, registry access, etc., which are basically tasks that get a machine to work with a device. CXL.cache deals with the device's access to a local processor's memory. CXL.memory deals with processor's access to non-local memory (memory controlled by another processor or another machine).Intel listed out use-cases for CXL, which begins with accelerators with memory, such as graphics cards, GPU compute accelerators, and high-density compute cards. All three CXL transaction layer protocols are relevant to such devices. Next up, are FPGAs, and NICs. CXL.io and CXL.cache are relevant here, since network-stacks are processed by processors local to the NIC. Lastly, there are the all-important memory buffers. You can imagine these devices as "NAS, but with DRAM sticks." Future data-centers will consist of vast memory pools shared between thousands of physical machines and accelerators. CXL.memory and CXL.cache are relevant. Much of what makes the CXL link-layer faster than PCIe is its optimized stack (processing load for the CPU). The CXL stack is built from the ground up keeping low-latency as a design goal.

Source:

Serve the Home

Intel began by describing why the industry needs CXL, and why PCI-Express (PCIe) doesn't suit its use-case. For a client-segment device, PCIe is perfect, since client-segment machines don't have too many devices, too large memory, and the applications don't have a very large memory footprint or scale across multiple machines. PCIe fails big in the data-center, when dealing with multiple bandwidth-hungry devices and vast shared memory pools. Its biggest shortcoming is isolated memory pools for each device, and inefficient access mechanisms. Resource-sharing is almost impossible. Sharing operands and data between multiple devices, such as two GPU accelerators working on a problem, is very inefficient. And lastly, there's latency, lots of it. Latency is the biggest enemy of shared memory pools that span across multiple physical machines. CXL is designed to overcome many of these problems without discarding the best part about PCIe - the simplicity and adaptability of its physical layer.CXL uses the PCIe physical layer, and has raw on-paper bandwidth of 32 Gbps per lane, per direction, which aligns with PCIe gen 5.0 standard. The link layer is where all the secret-sauce is. Intel worked on new handshake, auto-negotiation, and transaction protocols replacing those of PCIe, designed to overcome its shortcomings listed above. With PCIe gen 5.0 already standardized by the PCI-SIG, Intel could share CXL IP back to the SIG with PCIe gen 6.0. In other words, Intel admits that CXL may not outlive PCIe, and until the PCI-SIG can standardize gen 6.0 (around 2021-22, if not later), CXL is the need of the hour.The CXL transaction layer consists of three multiplexed sub-protocols that run simultaneously on a single link. They are: CXL.io, CXL.cache, and CXL.memory. CXL.io deals with device discovery, link negotiation, interrupts, registry access, etc., which are basically tasks that get a machine to work with a device. CXL.cache deals with the device's access to a local processor's memory. CXL.memory deals with processor's access to non-local memory (memory controlled by another processor or another machine).Intel listed out use-cases for CXL, which begins with accelerators with memory, such as graphics cards, GPU compute accelerators, and high-density compute cards. All three CXL transaction layer protocols are relevant to such devices. Next up, are FPGAs, and NICs. CXL.io and CXL.cache are relevant here, since network-stacks are processed by processors local to the NIC. Lastly, there are the all-important memory buffers. You can imagine these devices as "NAS, but with DRAM sticks." Future data-centers will consist of vast memory pools shared between thousands of physical machines and accelerators. CXL.memory and CXL.cache are relevant. Much of what makes the CXL link-layer faster than PCIe is its optimized stack (processing load for the CPU). The CXL stack is built from the ground up keeping low-latency as a design goal.

37 Comments on Intel Reveals the "What" and "Why" of CXL Interconnect, its Answer to NVLink

Intel had an open source video driver for Linux long before AMD. Also: en.wikipedia.org/wiki/Thunderbolt_(interface)#Royalty_situation

AMD has to go the open route. They're the underdog, they can't sell closed solutions. If things changed, I'm pretty sure they'd reconsider their approach.

Got it.

Having said that each use(r) case is different, so while some enterprses may need the extra lanes - they should have plenty to spare with PCIe 4.0 perhpas with the exception of (extreme) edge cases.

Some key points wrt competing solutions ~

www.openfabrics.org/images/eventpresos/2017presentations/213_CCIXGen-Z_BBenton.pdf

www.csm.ornl.gov/workshops/openshmem2017/presentations/Benton%20-%20OpenCAPI,%20Gen-Z,%20CCIX-%20Technology%20Overview,%20Trends,%20and%20Alignments.pdf

Until then, if Intel will add support in their CPU for something, it will became a defacto standard.

And yes, building for profit works, provides money for future research and development.

Open solutions don't work by themselves, they are supported by the evil non open products. Nobody likes to work for free, even the kids in their parents bedrooms want money for new phones, movie tickets with their dates, gas money...

**(160 lanes requires cutting cpu interconnects from 4 to 3/ While flexibility is nice, this is by far not optimal for compute intensive setups. Naples suffered due to interconnect saturation with nvme devices, While the bandwidth has doubled the core count has as well. Time will tell if the ram speed bump, and I/O die brings enough of an improvement to offset the loss of an interconnect.

AMD also has a 4 gpu infinity fabric ringbus that takes the load off the cpu. infinity fabric is very similar to ccix in being an alternative lower latency protocol over pcie.

Also, whoever mentioned no pcie 4 boards being on the market is only half correct, no x86 boards, but powerpc has had them for awhile, and I think a few arm boards.

2nd fun fact, powerpc chips have nvlink interconnects on die, so rather than connecting to nvlink gpus through a pcie switch... they are part of the mesh.