Tuesday, August 13th 2019

110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

AMD this Monday in a blog post demystified the boosting algorithm and thermal management of its new Radeon RX 5700 series "Navi" graphics cards. These cards are beginning to be available in custom-designs by AMD's board partners, but were only available as reference-design cards for over a month since their 7th July launch. The thermal management of these cards spooked many early adopters accustomed to seeing temperatures below 85 °C on competing NVIDIA graphics cards, with the Radeon RX 5700 XT posting GPU "hotspot" temperatures well above 100 °C, regularly hitting 110 °C, and sometimes even touching 113 °C with stress-testing application such as Furmark. In its blog post, AMD stated that 110 °C hotspot temperatures under "typical gaming usage" are "expected and within spec."

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

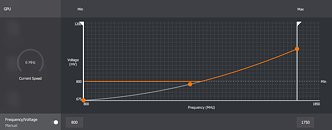

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

Source:

AMD

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

159 Comments on 110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

It's clear that AMD maxed these chips completely out, just like Ryzen chips, to look good in reviews but there's no OC headroom as a result. Which is why Custom versions perform pretty much identical to reference and overclocks 1.5% on average.

Your example with "it makes everything hotter" is moot here, as we are talking about only 1 out of 64 sensors reporting that temp.

Overall temp of the chip in TPUs tests of ref card was 79C, +4 degrees if OCed.

Nowhere 110.

Only 6 degrees higher than 2070s (blower ref vs aib-ish ref)I don't see it that way. No matter who what and where, first couple of month (or longer) there are shortages and price gouging, regardless of when AIBs come.There is nothing to fix, besides people's perception.

We are talking about 79C temp overall, with one out of gazillion of "spot" sensors reporting particular temp.

We have no idea how much those temps would be in case of NV, but likely also over 100.

according to Globalfoundries :

www.globalfoundries.com/sites/default/files/product-briefs/product-brief-14lpp-14nm-finfet-technology.pdf

Standard temperature range: -40°C to 125°

AMD set it to 110'c.So any process Unit's Temp in any layers must not excess more than 110'c and you guy ,like kid, scream it like House is in Fire?

What's junction temp for Turning card ? I bet Nvidia doesn't want to reveal it.

In my case its because I don't buy 'high end' hardware,more of a budget-mid range user so I never really considered 1080 and cards around that range when they were new/expensive.

Pretty much always use my cards for 2-3 years before upgrading and this 5700 will be my biggest/most expensive upgrade yet and it will be used for 3 years at least.

I don't mind playing with 45-50 fps and droping settings to ~medium when needed so I easily last that much,probably wouldn't even bother upgrading yet from my RX 570 if I still had my 1920x1080 monitor but this 2560x1080 res is more GPU heavy and some new games are kinda pushing it already.

If Borderlands 3 will run alright with the 570 I might even delay that purchase since it will be my main game for a good few months at least.

+Problem is that I don't want to buy a card with 6GB Vram cause I prefer to keep the Texture setting ~high at least and with 3-4 years in mind thats gonna be a problem 'already ran into this issue with my previous cards'.

Atm all of the 8GB Nvidia cards are out of my budget '2060S ' and I'm not a fan of used cards especially when I plan to keep it for long 'having no warranty is a dealbreaker for me'.

Dual fan 2060S models start around ~500$ here with tax included,blower 5700 non XT ~410 so even the aftermarket models will be cheaper and thats the max I'm willing to spend.

My cards were like this,at least what I can remember:

AMD 'Ati' 9600 Pro,6600 GT,7800 GT,8800GT 'Died after 2.5 years',GTS 450 which was my warranty replacement,GTX 560 Ti 'Died after 1 Year,had no warranty on it..',AMD 7770,GTX 950 Xtreme and now the RX 570.

That 950 is still running fine at my friend who bought it from me,its almost 4 years old now.

My bro had more AMD cards than me now that I think of it,even had a 7950 Crossfire system for a while and that ran 'hot'. :D

If I recall correctly then his only dead card was a 8800GTX,all of his AMD cards survived somehow.

Gotta stop acting like AMD is doing something radically new. Its clear as day; the GPU has no headroom, and it constantly pushes itself to max temp limit, and while doing so, heat at memory ICs gets to max 'specced' as well. So what if the die is cooler - it still won't provide any headroom to push the chip further. The comparisons with Nvidia therefore fall flat completely as well, because Nvidia DOES have that headroom - and does not suffer from the same heat levels elsewhere on the board.

Its not my problem you cannot connect those dots, and you can believe whatever you like to believe... to which the follow up question is: did you buy one yet? After all, they're fine and AIB cards don't clock higher, so you might as well... GPU history is full of shitty products, and this could well be another one (on ref cooling).

AMD has always been the most transparent to what their products are doing under the hood but by the same token this drives away people that don't know what to do with this information, it's a shame.

I know a guy who still games on his R9 290, with 390X bios.

I run a Fury X, and it was in the first 99 boards made. It's running a modified bios that lifts the power limit, under volts, tightens the HBM timings, and performs far better than stock.

The Fury series like the Vegas, need water cooling to perform their best. Vega64/V2/56 on air, is just disappointing because they are loud and/or throttle everywhere.

I have had a few GPUs that were bit by the NV soldergate...

Toshiba lappy 7600 GT, replaced and increased clamp pressure mods they directed to use.

Thinkpad T61 and it's Quadro NVS140M, Lenovo made Nvidia, remake the GPU, with proper solder. I hunted down and aquired myself one.

But ATI/AMD aren't exempt...

My most notorious death card, was a Powercolor 9600XT... that card died within 2-3 weeks everytime, and I had to RMA it 3 times. I still refuse to use anything from TUL/Powercolor because of the horrible RMA process, horrible customer service, and their insistence on using slow UPS. So I got nailed with a $100 brokerage bill every time. I sold it cheap after the last RMA, guy messaged me angry a month later that it died on him.

My uncle got 2 years out of a 2900 XT... It was BBA... lol

Now, enter Navi: if you don't adjust the fan profile, the card will simply continuously bump into the red zone, right up to max spec. There is no safeguard to kick it down a notch consistently. Like a mad donkey it will bump its head into that same rock every time, all the time.

The way both boost mechanics work is quite different, still, and while AMD finally managed to get a form of boost going that can utilize the headroom available, it does rely on cooling far more so than GPU Boost does - and what's more, it also won't boost higher if you give it temperature headroom. Bottom line, they've still got a very 'rigid' way of boosting versus a highly flexible one.

If you had to capture it one sentence; Nvidia's boost wants to stay as far away from the throttle point as it can to do best, while AMD's boost doesn't care how hot it gets to maximize performance as long as it doesn't melt.

I do not understand at all how you conclude that their algorithm must be worse because it does not make frequent adjustments like Nvidia's. If anything this is proof their hardware is more balanced and no large adjustments are needed to keep the GPU in it's desired operating point.Again, If their algorithm figures out it's a valid move to do that this means an equilibrium has been reached. There is no need for any additional interventions. The only safeguards needed after that are for thermal shutdown and whatnot and I am sure they work just fine otherwise they would all burn away from the moment they are turned on.

Do not claim their cards do not have safeguards in this regard, it's simply untrue. You now better than this, come on.You are simply wrong and I am starting to question whether or not you really understand how these things work.

They both seek to maximize performance while staying away from the throttle point as far as possible only if that's the right thing to do. If you go and look back at reference models of Pascal cards they all immediately hit their temperature limit and stay there just in the same way the 5700XT does. Does that mean they didn't care how hot those got ?

Of course the reason I brought up Pascal is because those have the same blower coolers, they don't use those anymore but let's see what happens when Truing GPUs do have that kind of cooling :

What a surprise, they also hit their temperature limit. So much for Nvidia wanting to stay as far away from the throttle point, right ?

This is not how these things are supposed to work. Their goal is not to just stay as far away from the throttle point, if you do that your going to have a crappy boost algorithm. Their main concern is to maximize performance even if that means you need to stay right at the throttling point.

And the ref cooler is balanced out so that, in ideal (test bench) conditions, it can remain just within spec without burning itself up too quickly. I once again stress the Memory IC temps, which, once again, is easily glossed over but very relevant here wrt longevity. AIB versions then confirm the behaviour because all they really manage is a temp drop with no perf gain.

And ehm... about AMD not knowing what they're doing... we are in Q2 2019 and they finally managed to get their GPU Boost to 'just not quite as good as' Pascal. You'll excuse me if I lack confidence in their expertise with this. Ever since GCN they have been struggling with power state management. Please - we are WAY past giving AMD the benefit of the doubt when it comes to their GPU division. They've stacked mishap upon failure for years and resources are tight. PR, strategy, timing, time to market, technology... none of it was good and even Navi is not a fully revamped arch, its always 'in development', like an eternal Beta... and it shows.

Here is another graph to drive the point home that Nvidia's boost is far better.

NAVI:

Note the clock spread while the GPU keeps on pushing 1.2V. And not just at 1.2V but at each interval. Its a mess and it underlines voltage control is not as directly linked to GPU clock as you'd want.

There is also still an efficiency gap between Navi and Pascal/Turing, despite a node advantage. This is where part of that gap comes from.

Ask yourself this, where do you see an equilibrium here? This 'boost' runs straight into a heat wall and then panics all the way down to 1800mhz, while losing out on good ways to drop temp: dropping volts. And note; this is an AIB card.

www.techpowerup.com/review/msi-radeon-rx-5700-xt-evoke/34.html

Turing:

You can draw up a nice curve to capture a trend here that relates voltage to clocks, all the way up to the throttle target (and néver beyond it, under normal circumstances - GPU Boost literally keeps it away from throttle point before engaging in actually throttling). At each and every interval, GPU boost finds the optimal clock to settle at. No weird searching and no voltage overkill for the given clock at any given point in time. Result: lower temps, higher efficiency, maximized performance, and (OC) headroom if temps and voltages allow.

People frowned upon Pascal when it launched for 'minor changes' compared to Maxwell, but what they achieved there was pretty huge, it was Nvidia's XFR. Navi is not AMD's GPU XFR, and if it is, its pretty shit compared to their CPU version.

And.. surprise for you apparently but that graph you linked contains a 2080 in OC mode doing... 83C. 1C below throttle, settled at max achievable clockspeed WITHOUT throttling.www.nvidia.com/en-us/geforce/forums/discover/236948/titan-x-pascal-max-temperatures/

So as you can see, Nvidia GPUs throttle 6C before they reach 'out of spec' - or permanent damage. In addition, they throttle such that they stop exceeeding the throttle target from then onwards under a continuous load. Fun fact, Titan X is on a blower too... with a 250W TDP.

Long gone are the days when a new generation of video cards or processors offer big or even relatively impressive performance for the prices of the hardware it's/they're replacing. Now days it seems like all they do is give is just enough of an upgrade to justify the existence of said products or at least in their(AMD/Intel/Nvidia) minds.

Sad times.

Do you by any chance own a leather jacket and your favorite color is green ?

Th3pwne3, I feel you. Those days are gone but I am sure that jump from 16nm to 7nm would bring much bigger differences than RDNA 1.0 showed. Ampere and/or RDNA 2.0 to the rescue in 2020. 28nm to 14/16nm brought impressive results, cards like 480 and 1060 for 250eur with 500eur 980 perf. Well, 280eur Turing 1660ti almost mimic that with 450eur 1070 perf, 16nm vs 12nm. FFS, 7nm Navi for 400eur only with 500eur ultra oced 2070 perf.

There is no point in discussing readouts like "Nvidia vs AMD". It's obvious and known that Radeons generally runs a highter temp. level.

Important here is to mention -or to repeat - as it has been pointed out previously from many others here: Temperature is relative and therefore it has to be evaluated accordingly. Means: As far as I know the VIIs are layed out to run on ~125°C (don't ask now which part of the card - answer: probably the ones mentioned above as they r the ones generating most).

So again, temperatures should be compared on Devices with same or derivative architectures. I mean, of course one can compare (almost) anything - but rather more as a basis of discussion and opnion. Like e.x.: the tires and wheels of a sports car get much hotter then a family sedan. Are high temperatures bad for the wheels ? - yes and no. But for a sports car it's needed no question (breaks, tyre grip...). So again it's relative/"a matter of perspective".

The last point goes with that above. The discussion about the Sensors/readout-points. I want to point out I'm not having any qualified knowledge or education about this subject per se. But it's actually simple logic - the nearer you get with the sensors to where energy transfers to heat, the higher read out you will get. So simple enough right ? - as mentioned above if other cards have architectures and within those, Sensors that are related/derivative/similar, one could compare. But how can anybody compare readouts of different sensors at different "places". in short: The higher the "delta" btw. the "near-core" temperatures in respect to the package temperature - the better. With AMDs sensors/sensorplacement and their readouts. users possess more detailed info of heat generation and travel.

So from that what I've read from the posts before and according what I've put together above - the VII (at least) have more accurate readouts.

And finally, our concern is the Package Temp - the rest, one should check every now and then to have a healthy card.

And finally about buying a GPU and wanting to keep it ... 3-4 even 7 years some had written.

BS - if we talk here about high-end cards, it's very simple for 90% of us the life span is 1-2 years for 5% 0.5-1year and 5% max 3 year. Any GPU you buy now (except for the Titans&Co. - maybe) is in 3 years good enough for your 8-9 year old brother to play minecraft .... that's fact - the ones complaining/worrying and making romantic comments like wanting to keep them 7 years and .... so on. My answer is - then keep them. have fun. And make your self busy in complaining and being toxic about the cards that the became slower then when you had purchased them. ( headshake* - people who write crap like that and sadly think like that they need and always will need to something to complain about, fight about - it's not an issue of knowledge or even opinion. It's and issue of character....

Finally I bought last year 1.Q a Strix 1080Ti (before 2x Saphire RX 550+ nitro+ SE in Crossfire) - 2 days later went then to buy the VII - why ? I got the LG 43" monitor centered and 2 AOC monitors left&right in portrait orientation. And I realized .... the Multimonitor Software of AMD is better then Nvidias ... cuz it doesn't support it - so because of AMDs "Radeon Adrenalin" app it was a simple decision - and until today I've not regreted it nor had any issues with it 'til today.

People seem to have a really hard time understanding that the closer to the transistors you put a temperature sensor, the hotter it's going to be. If you could measure the temperature inside a transistor gate, I'm sure it would be even higher. This is why temperature limits for the junction is different than the limits for the edge.

If temperatures are within spec and it maintains them, who cares? It literally means that this is the range that AMD certifies their hardware good at. I'd be more concerned if it was regularly exceeding the spec.