Tuesday, August 13th 2019

110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

AMD this Monday in a blog post demystified the boosting algorithm and thermal management of its new Radeon RX 5700 series "Navi" graphics cards. These cards are beginning to be available in custom-designs by AMD's board partners, but were only available as reference-design cards for over a month since their 7th July launch. The thermal management of these cards spooked many early adopters accustomed to seeing temperatures below 85 °C on competing NVIDIA graphics cards, with the Radeon RX 5700 XT posting GPU "hotspot" temperatures well above 100 °C, regularly hitting 110 °C, and sometimes even touching 113 °C with stress-testing application such as Furmark. In its blog post, AMD stated that 110 °C hotspot temperatures under "typical gaming usage" are "expected and within spec."

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

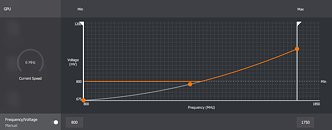

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

Source:

AMD

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

159 Comments on 110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

The misguided idea that 'because a company engineered and released it' it must be okay has been proven numerous times to be just that - misguided. Never underestimate what the pressure of commercial targets and shareholders will mean for end users.Haha indeed lol. Anandtech comment section still isn't pretty btw.

Also the most common points on failure are the solder joins or the Capacitiors of the VRMs.

The GPU and Memory ICs themselves are rarely the first to fail unless they have been overclocked heavily / subject to extremely high voltage.

50$ motherboard combined with a high-end, 12 core and even overclocked CPU. Runs. And it will proberly run for another year or so if the build quality is just right.

The reason why it runs, and that you see nowhere, is that AMD requires this with mobo vendors. It does'nt want a FX era over and over again where certain boards throttle with a 125W CPU.

Meanwhile, I get about 150-200 EUR returned on every GPU upgrade which allows me to buy into same or higher tier without 'spending more' than I did on the previous card. Every time. I've made about 1200 EUR on GPU sales for personal use. You enjoy your 3 year cards, to each his own, its good these furnaces still have a market I guess.

Do check out that GN review of the Sapphire though, it nicely underlines the point, even memory ICs get to boiling point which is definitely not where you want them. I vividly remember the EVGA GTX 1070 FTW - another one of those cards 'that was just fine' until EVGA deemed it necessary to supply thermal pads after all and revise their product line and shroud entirely.

Anyway, non issue because it was already clear that you had to stay far away from the reference designs.That's not how commerce works, that is how charity works. And not a single charity exists to solve problems, but rather to preserve them to cash in even more.

If AMD can't compete, we need a new player. I hear Intel is working on something. And if AMD GPU business falls flat (which it will eventually if they keep at it like this) someone will buy the IP and take over the helm. I'm not worried and I don't root for multinationals.

My $0.02:

Please find below a GPU-Z screenshot taken after Fire Strike Extreme Stress Test run on my reference (Sapphire) RX5700XT.

The card is set in Wattman to boost up to 1980MHz at 1006mV (with GDDR6 mildly OCed from 875MHz to 900MHz). It runs with these settings just fine. Performance is very satisfactory (I have a 2560x1440 144Hz display), temps are in check, fan noise is barely audible. Just so you know, I have a mATX case, it does not have very good airflow.

I have no idea why RX5700XT runs by default at 1203mV. IMHO this is very high and is the culprit of reference cards running hot, loud and being power hungry. From what I've seen so far, all reference cards can be undervolted by a huge margin, which resolves all heat/noise/power consumption issues.

For the sake of comparison, reference RX5700 (non-XT) runs at 1025mV.

Food for thought.

My RX590 goes from 1150mv back to 1110mv. It can do 1090mv but at the cost of a crashing radeon relive. So i stick it at 1110mv with a core of around 1450mhz which is still very good.

I'd rather take a shot at overclocking a card that runs great out of the box than undervolting one that needs it badly.

Dies do not have uniform thermals across their surface and on certain spots such as where the FPUs sit indeed can reach well over 100C. This has gotten worse over the years as the thermal density of chips keeps rising, you can have TDPs ranging from 10W to 1000W these hotspots will not go away. AMD being on 7nm, again, makes this worse.

Let's spell it out in the simplest of terms so that everyone gets it :

You have two dies, each use 100W and each benefit from the same amount of cooling but one of them is half the size. This inevitably means it will have higher thermal density and will run at higher temperatures, there is no going around it. This of course is taken into account when this things are designed but you can only minimize this effect so much.

Again, this is about the thermal density not TDP, not cooling, nor architecture and you can't really do anything about it. Do not believe for a second Nvidia, Intel or anyone isn't dealing with this. This hot and power hungry meme should die, it has run it's course, now you just look like you don't have a damn clue what you're talking about.

If you go down one size on the memory thermal pads from 1.5mm to 1mm it closes the gap between the die and cooler. It really does work.

AMD officially said they will max all their chips, instead of leaving some in the tank for the "few percent" that overclock. Looks like this holds true when looking at Ryzen and the 5700 XT custom cards. 2-3% performance gained with max OC. The Asus Strix gained 0.7%...Not really... You can get reference 5700 XT for 10 bucks less than custom 2060 Super... You need hearing protection with the 5700 XT ref tho

You get Control and Wolfenstein with all 2060 Super which can easily be sold.

cheapest non-reference 5700xt here is 2200pln for pulse,2060 S is 1800 PLN for zotac/pny/gainward dual fan + 2 games worth 300 pln total

Also, that Asus 3 fan 5700 XT comes within 10 fps of 2080 SUPER on a few games, Sekiro at 1440p being one. My gigabyte 3 fan should do the same, not bad for $420. :)