Tuesday, August 13th 2019

110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

AMD this Monday in a blog post demystified the boosting algorithm and thermal management of its new Radeon RX 5700 series "Navi" graphics cards. These cards are beginning to be available in custom-designs by AMD's board partners, but were only available as reference-design cards for over a month since their 7th July launch. The thermal management of these cards spooked many early adopters accustomed to seeing temperatures below 85 °C on competing NVIDIA graphics cards, with the Radeon RX 5700 XT posting GPU "hotspot" temperatures well above 100 °C, regularly hitting 110 °C, and sometimes even touching 113 °C with stress-testing application such as Furmark. In its blog post, AMD stated that 110 °C hotspot temperatures under "typical gaming usage" are "expected and within spec."

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

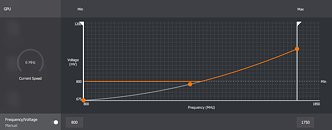

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

Source:

AMD

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

159 Comments on 110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

We have to remember that most reviews are conducted on open test benches or in open cases, while all customers will run these in closed cases, and even the best of us will not keep it completely dust free. That's why it's important that any product have some thermal headroom when reviewed under ideal circumstances, since real world conditions will always be slightly worse.

The problem here is AMD once again managed to release ref designs that visibly suck, and its not good for their brand image, it does not show dedication to their GPUs much like Nvidia's releases are managed. The absence of AIB cards at launch makes that problem a bit more painful. And its not a first - it goes on, and on. In the meantime, we are looking at a 400 dollar card. Its not strange to expect a bit more.

Oh and by the way, I said similar stuff about the Nvidia Founders when Pascal launched, but the difference there was that Pascal and GPU Boost operated at much lower temps. And even thén the FE's still limited performance a bit.

In the past ~10+ years I only had 2 cards die on me and both were Nvidia cards so theres that.

Don't care about ref/blower cards either,whoever buys those should know what they are buying instead of waiting some time to get 'proper' models.

I'm planning to buy a 5700 but I'm not in a hurry,I can easily wait till all of the decent models are out and then buy one of them 'Nitro/pulse/giga G1 probably'.

And don't forget there is still the usual GPU-Temperature. Which is showing the temperatures we are used to.

We could only compare Nvidia and AMD cards, when we have a sensor with with a lot of temp zones, which we could put between the GPU-Die and the cooler. We could see the hot spots no matter who built the card.

Extra start and stops means extra thermal cycles for the wires. This is similar to the concern that other members have raised about the solder joins of the GPU.

The there is the wear on the bearings depending on type. Rifled Bearing and Fluid Dynamic Bearings require a certain speed to get the lubricant flowing.

This means at start up there are parts of the bearing with very little lubrication which cause extra wear on the bearing than otherwise.

Now because the the fan blades are rather light loads, the motor gets up to speed quickly and the effects are minimal.

Therefore I said it is only slightly detrimental to fan life span.

Shutting the fans off at idle is for noise reasons and nothing else, that is exactly what I said in my post thanks for repeating my point.

No not a fact, it certainly doesn't decrease the number of starts, the GPU fans will spin up at least once on boot.

Also depending on the design of the card, some GPUs will start the fans on video play back due to the the gpu heating up under load for hardware acceleration.

So the best case scenario is it is the same number of start cycles.

So you come with absolutely no data, lots of assumptions & then ignoring historical trends & call whatever I'm saying as utter BS, great :rolleyes:

Could AMD have done a better job with the cooling ~ sure, do we know that the current solution will fail medium - long term? You have absolutely 0 basis to claim that, unless you know more than us about this "issue" or any other on similar products from the competitors.

You moronic faqs, why bother to waste energy commenting at all ! You are in no measure to understand squat, so please go do something else with your life, instead of spamming us on the Forums here !

The performance we get at the end of the day, includes that boost.

You can't count it as something being added on top.More of a PR, nothing practical. We don't even know what "spot" temps of NV are.That's simply caused by playing catch-up game.

And, frankly, I'd rather learn what's coming 1-2 month in advance, rather than wait for Ref and AIB cards to hit together. (I don't even get what ref cards are for, other than that)Ok, let me re-state this again:

1) AMD used a blower type (stating that is the only way they can guarantee the thermals)

2) Very small perf diff between AIB and Ref proves that even ref 5700 XT is not doing excessive throttling, despite being 20+ degrees hotter.

3) "Spot temperature" is just a number, that makes sense only in pair with ref cards (who buys them) and even there, is not causing practical problems, although I admit that @efikkan has a point and it might have bad impact on card's longevity, still, "ref card, who cares"

In short: possibly bad impact on card longevity, but we are not sure. Definitely not having serious performance impact. We don't even know what values are for NV, as there is no exposed sensor.

lol

www.extremetech.com/gaming/296577-why-110-degree-temps-are-normal-for-amds-radeon-5700-5700-xt

This hotspot 110'c was there long before Navi/Radeon VII but people couldn't understand thing.here one of people said very clear :I hope one day Intel/Nvidia/AMD follows path and allow us to see temp of all array of sensors.

www.nasa.gov/image-feature/goddard/views-of-pluto-through-the-years

this is best example for those who don't understand.:rolleyes:

From 1995 to 2015.It took them 20 years to get a SHARP image of Pluto Planet.

I find it amazing that they have 32 sensors on the Vega and 64 on the Radeon VII. I wonder how many the Navi GPUs have. And if we get a tool to see the temps in a colored 2d-texture we could see where and when what part of the GPU is utilized.

And think about the huge GPU dies of the Nvidia RTX cards. They likely have a lot of headroom with a junction temp optimization.

People complaining about the "not so good" 7nm GPUs. It is a new process, and it will take some time to get the best out of that manufacturing node. And we will see how good Nvidia's architecture is scaling on 7nm when it will be released :)

By the way, your 7700K link kinda underlines that we know about the 'hot spots' on Intel processors, otherwise you wouldn't have that reading. But these Navi temps are not 'spikes'. They are sustained.

We can keep going in circles about this but the idea that Nvidia ref also hits these temps is the same guesswork; but we do have much better temp readings from all other sensors on a ref FE board - including memory ICs. And note: FE's throttle too but I've seen GPU Boost in action and it does the job a whole lot better; as in, it will rigorously manage voltages and temps instead of 'pushing for the limit' like we see on these Navi boards. This is further underlined by the OC headroom the cards still have. There are more than enough 'data points' available...

Besides, nothing is really new here - AMD's ref cards have always been complete junk.We are never sure until after the fact. I'll word it differently. The current state of affairs does not instill confidence. And no, I don't 'care' about ref cards either, but I pointed that out earlier; AMD should, especially when AIB cards are late to the party. These kinds of events kill their momentum for any GPU launch, and it keeps repeating itself.

With both cards overclocked, the AIB MSI 2070 Gaming Z (Not super) is still about 5% faster than the MSI Evoke 5700 XT ... so if price difference is deemed big enough (-$50) I can see the attraction ... but the 5700XT being 2.5 times as loud is a deal breaker at any price. The Sappire is slower still but it's significantly quieter

MSI 2070 = 30 dbA

MSI 5700 XT = 43 dba .. 13 dba = 2.46 times as loud

tpucdn.com/review/msi-radeon-rx-5700-xt-evoke/images/relative-performance_2560-1440.png

MSI 5700 XT = 100%

Reference 2070 = 96%

tpucdn.com/review/msi-radeon-rx-5700-xt-evoke/images/overclocked-performance.png

MSI Evoke Gain from OC = 100% x (119.6 / 115.1) = 103.9

tpucdn.com/review/msi-geforce-rtx-2070-gaming-z/images/overclocked-performance.png

MSI 2070 Gain from overclocking = 96% x (144.5 / 128.3) = 108.1

108.1 / 103.9 = + 4.85%

The Gaming Z is a $460, the Evoke suggested at $430.... will likely be higher for the 1st few months.

If we ask, "Is a 5% increase in performance worth a 7% increase in price ?" It would be to me. But with a $1200 build versus a $1230 build, that's a 5% increase in speed for a 2.5% increase in price, and that's a more appropriate comparison as the whole system is faster and the card don't deliver any fps sitting on your desk. However, the 800 pound gorilla in the room is the 43 dbA 2.5 times as loud thing.

I think the issue here is, from what we have seen so far most of the 5700XT cards are not true AIB cards but more like the EVGA Black series ... pretty much a reference PCB with a AIB cooler. Asus went out and beefed up the VRMS with an 11 / 2 + 1 design versus the 7 / 2 reference . They didn't cool near as well as the MSI 5700 XT or 2070, The did a lot better on the"outta the box" performance but OC headroom was dismal. As the card was so aggressively OC'd in the box, manual OC'ing added just 0.7% performance.

Asus 5700 XT Strix = 100% x (118.3 / 117.4) = 100.77

MSI 2070 Gaming Z = 95% x (144.5 / 128.3) = 107.00

107.00 / 100.77 = + 6.18 %

Interesting tho that Asus went all out, spending money on the PCB redesign when MSI (and Sapphire) looks like they used a cheaper memory controller than the reference card and yet MSI hit 119.6 in the OC test where as Asus only hit 118.3. Still, tho it will surely cost closer to what the premium AIB 2070s costs due to the PCB redesign and tho it's 7C hotter and 6% slower than the MSI 2070 ... it's only 6 dbA louder (performance BIOS). To get lower (+2 dbA), the performance drops and temps go up to 82C.

Tho the Asus is 6% slower and the MSI is 5% slower than the MSI 2070.... if I couldn't get a 2070, and was looking to choose a 5700 XT, it would have to be the Asus. ... but not at $440.

As for the hot spots, I'm kinda betwixt and between ... Yes, I'm inclined to figure that I have neither the background nor experience to validate or invalidate what they are saying .... but in this era ... lying to everybody seems to be common practice. In recent memory we have AMD doing the "it was designed that way" routine when the 6 pin 480's were creating fireworks .... and then they issued a soft fix , followed by a move to 8-pin cards. EVGA said "we designed it that way" when 1/3 of the heat sink missed the GPU on the 970 .... and again, shortly thereafter they issued a redesign. Yet again, when the EVGA 1060s thru 1080s started smoking, the "we designed it that way" mantra was the 1st response and then there was the reacall / do it yaself kit / redesign with thermal pads.

All I can say is "I don't know ... I'm in no position to judge. Ask me again in 6 monts after we get user feedback. But I also old enough to remember AMDhaving fun at nvidias expense frying an egg on the GTX 480 card.

How much is the power consumption when those GPUs pass 100°C ??

Every modern GPU has a target set in the bios, without any overclock it just reach the power target and stays there.

It is not like the old days where GPUs run themselves into the ground when you run Furmark.

i have only had nvidia cards die too but thats because they are always the best bang for buck. (only bought a few amd gpu's 9800 that could unlock shaders? or 9700 vanilla? i forget and the 1950xtxtxtx? still have it on the wall of my garage. Most deaths are from simple cap that i could have replaced but by the time they die i would rather hang them on the wall then repair and use. (maybe 30 motherboards some with cpu's and coolers intact on my wall and 20 gfx cards over the years.)

its strange to me why people want a 5700 anyway, the 1080ti has been out for how long? i purchased two of them used long ago for 450 and 500 (just about 2 years ago to the day) they seem to run better then the new 5700xt in every scenario. so its people that love the amd brand and are hoping for a better future?

if i was to purchase a card today it would be a open box 2080 i think they run 550? To bad nothing has hdmi 2.1 so i will just sit and wait for next gen after next gen still so slow and overpriced. (id be happy with 8k@120hz hehehe)