Wednesday, March 4th 2020

Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

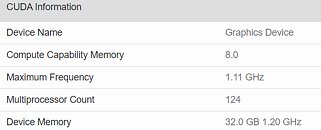

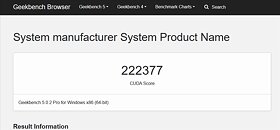

(Update, March 4th: Another NVIDIA graphics card has been discovered in the Geekbench database, this one featuring a total of 124 CUs. This could amount to some 7,936 CUDA cores, should NVIDIA keep the same 64 CUDA cores per CU - though this has changed in the past, as when NVIDIA halved the number of CUDA cores per CU from Pascal to Turing. The 124 CU graphics card is clocked at 1.1 GHz and features 32 GB of HBM2e, delivering a score of 222,377 points in the Geekbench benchmark. We again stress that these can be just engineering samples, with conservative clocks, and that final performance could be even higher).

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

Sources:

@_rogame (Twitter), Geekbench

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

62 Comments on Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

Nvidia has been making cards with 24 GB RAM since Maxwell.

47 is likely a mistake, though.

GA100 largest chip

- 800mm2 die

- 55 Billion transistors

- 2304 Gbps bandwidth speed

- 6 HBM2 memory stacks

www.personal-view.com/talks/discussion/22993/nvidia-ampere-gpus-comingStill I am surprised Nvidia allowed usage of gaming level GPU to access both Tensorflow and full CUDA developer toolkit. I am seeing 1060 all the way to Titan RTX used in genomic workstations. Maybe it is just to get all the research labs hooked up on their butter smooth computation experience.

Modern games do quite a lot of compute work on GPU aka compute shaders. But building compute pipelines (including openCL) is still not as productive as CUDA. I guess it could be an attempt to lure developers to make use of CUDA interoperability, and therefore more dependence on Nvidia GPU.

You're claiming otherwise, so the burden of proof is on you.

What the hell, they'd probably do it if they could market the card as "8K" optimized.

graphicscardhub.com/how-much-vram-for-gaming/

www.gamingscan.com/how-much-vram-do-i-need/

nvidia/comments/bbxic1

Check this quote and i do agree,

"Funny side note to prove this point; if you play the RE2 Remake it has a VRAM usage display next to the graphic settings. At 1440P with max settings the game will tell you it needs 11GB of VRAM and gives you a big warning, yet running the game itself, I never went over 7GB, and again, that's allocated not actually used. "

"Yeah, to actually run out of vram on re2 on 11gb I had to run 8k with maxed out graphics and at least "6gb high textures" option. At "4gb high textures" it would run fine. Of course fps was low but no stutters. "

Finished re2 remake and was playing on 4k and used all my vram memory, had to se4t it right to be at 90%, lower settings.

Also, like I said those 24/48GB VRAM, if real, are most likely for professional cards.

Actually, looking around a bit, it seems RE2 Remake is downright broken when reporting VRAM needs: forums.overclockers.co.uk/threads/resident-evil-2-remake-biggest-use-of-vram-in-an-official-release.18843137/

:laugh:

And I've seen AMD OpenCL 2.0 cards beaten by Nvidia OpenCL 1.2 cards in less professional apps.

NV has refused to support OpenCL 2.0 to force apps to use CUDA to support the newer functions. If they weren't scared, they'd enable the support.

As for performance a Radeon VII will smack around a 2080 Ti in OpenCL workloads.

For mining on GPUs, 290s, 390s, Vegas were god.

NV is scared because they pull in loads of cash from CUDA licensing. OpenCL torpedoes that.