Friday, April 3rd 2020

Ryzen 7 3700X Trades Blows with Core i7-10700, 3600X with i5-10600K: Early ES Review

Hong Kong-based tech publication HKEPC posted a performance review of a few 10th generation Core "Comet Lake-S" desktop processor engineering samples they scored. These include the Core i7-10700 (8-core/16-thread), the i5-10600K (6-core/12-thread), the i5-10500, and the i5-10400. The four chips were paired with a Dell-sourced OEM motherboard based on Intel's B460 chipset, 16 GB of dual-channel DDR4-4133 memory, and an RX 5700 XT graphics card to make the test bench. This bench was compared to several Intel 9th generation Core and AMD 3rd generation Ryzen processors.

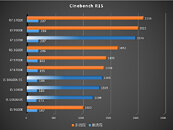

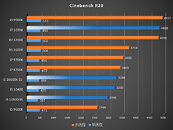

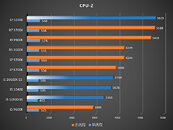

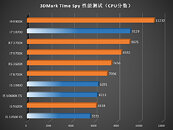

Among the purely CPU-oriented benchmarks, the i7-10700 was found to be trading blows with the Ryzen 7 3700X. It's important to note here, that the i7-10700 is a locked chip, possibly with 65 W rated TDP. Its 4.60 GHz boost frequency is lesser than that of the unlocked, 95 W i9-9900K, which ends up topping most of the performance charts where it's compared to the 3700X. Still the comparison between i7-10700 and 3700X can't be dismissed, since the new Intel chip could launch at roughly the same price as the 3700X (if you go by i7-9700 vs. i7-9700K launch price trends).The Ryzen 7 3700X beats the Core i7-10700 in Cinebench R15, but falls behind in Cinebench R20. The two end up performing within 2% of each other in CPU-Z bench, 3DMark Time Spy and FireStrike Extreme (physics scores). The mid-range Ryzen 5 3600X has much better luck warding off its upcoming rivals, with significant performance leads over the i5-10600K and i5-10500 in both versions of Cinebench, CPU-Z bench, as well as both 3DMark tests. The i5-10400 is within 6% of the i5-10600K. This is important, as the iGPU-devoid i5-10400F could retail at price points well under $190, two-thirds the price of the i5-10600K.These performance figures should be taken with a grain of salt since engineering samples have a way of performing very differently from retail chips. Intel is expected to launch its 10th generation Core "Comet Lake-S" processors and Intel 400-series chipset motherboards on April 30. Find more test results in the HKEPC article linked below.

Source:

HKEPC

Among the purely CPU-oriented benchmarks, the i7-10700 was found to be trading blows with the Ryzen 7 3700X. It's important to note here, that the i7-10700 is a locked chip, possibly with 65 W rated TDP. Its 4.60 GHz boost frequency is lesser than that of the unlocked, 95 W i9-9900K, which ends up topping most of the performance charts where it's compared to the 3700X. Still the comparison between i7-10700 and 3700X can't be dismissed, since the new Intel chip could launch at roughly the same price as the 3700X (if you go by i7-9700 vs. i7-9700K launch price trends).The Ryzen 7 3700X beats the Core i7-10700 in Cinebench R15, but falls behind in Cinebench R20. The two end up performing within 2% of each other in CPU-Z bench, 3DMark Time Spy and FireStrike Extreme (physics scores). The mid-range Ryzen 5 3600X has much better luck warding off its upcoming rivals, with significant performance leads over the i5-10600K and i5-10500 in both versions of Cinebench, CPU-Z bench, as well as both 3DMark tests. The i5-10400 is within 6% of the i5-10600K. This is important, as the iGPU-devoid i5-10400F could retail at price points well under $190, two-thirds the price of the i5-10600K.These performance figures should be taken with a grain of salt since engineering samples have a way of performing very differently from retail chips. Intel is expected to launch its 10th generation Core "Comet Lake-S" processors and Intel 400-series chipset motherboards on April 30. Find more test results in the HKEPC article linked below.

97 Comments on Ryzen 7 3700X Trades Blows with Core i7-10700, 3600X with i5-10600K: Early ES Review

Intel chips have to be patched for meltdown as well as a bunch of other vulnerabilities, including SGX, the patches aren't limited to spectre :rolleyes:

This is the latest I could find on phoronix, again I'll add that a truly apples to apples comparison is nigh impossible but any mitigation, hardware or software, will have an impact on performance!

www.phoronix.com/scan.php?page=article&item=3900x-9900k-mitigations&num=8

There is some overall performance hit due to software changes to mitigate Spectre but that affects everyone across the board.

I can't find the exact test right now. Look for 9900K R0 results in mitigation performance article before MDS was found/published.

The problem with finding a good comparison for this is that Intel has increasing amount of mitigations in 3 or 4 different steppings plus most of the time there is an issue that is not fixed in hardware :D

Zen are chiplets designs ... the yields are way better than Intel's. Hence why AMD have much lower costs, which have been discussed constantly for the last 3 years now.

It's way worse now for Intel than it was back in 2017. These 10xxx series chips push clocks and power draw way beyond what Intel's 14nm process was ever intended or supposed to reach.

It wouldn't surprise me if yield for i9 10xxx chips is less than 40%. I'd be absolutely amazed if it was much over 50%.

AMD can push their price way lower whilst still retaining a decent margin.

---

Anyway, these look like a very poor proposition vs Ryzen 3xxx .... and are likely to look outright Pentium 4-ish vs Ryzen 4xxx.

9900K gets hit by 5.5% vs 3900X 3.7%. 3900X should actually do even slightly better. For some reason Zen2 seem to have a slightly heavier spectre_v2 mitigation enabled.

Edit:

My point was about hardware fixes. If you look at the enabled mitigations, both CPUs have several mitigations active for spec_store_bypass, spectre_v1 and spectre_v2:Edit2:

EPYC Rome vs Cascade Lake at similar mitigation setup - 2.2% vs 2.8% impact from mitigations:

www.phoronix.com/scan.php?page=article&item=epyc-rome-mitigations&num=1

Zen 2 have several advantages, including energy efficiency, and performance advantages in several areas including (large) video encoding, blender rendering, etc. which are relevant considerations for many buyers.

The decision should come down to which product is objectively better for the specific user's use case. Unlike a few years ago where there was a single clear option, today the "winner" heavily depends on the use case.

Most of 300-series chipsets are reportedly on 14nm process and are 50-60mm^2.

I do not see how that would be hugely profitable for them. Even less so given shortage of manufacturing capacity.

Actually, I should make my 10940X a 5.0GHz processor. Still have plenty of headroom.

Their strategy was advancing process technology as fast as possible, and for many years they were the best, easily 2-3 years in front of every other manufacturer. This process advantage allowed them to increase the cores sizes/caches, keep frequencies relatively low (under 4 Ghz usually) and improve performance each generation.

The improvements for each generations were considered based on various aspects, like cost, competition, software optimization for multi core, etc.

Intel had basically 0 competition up until 2017. None. For that you can thank AMD.

Also, software wasn't very multithreaded up until 2015-2016, right when Skylake launched.

So, if you use your brain, you will see that there was no point in this world for Intel to launch a 20 core CPU in 2013, when games usually used max 2-4 cores and even Windows 7 or 8 was limited to a small number of cores.

Professional users on the other hand had options in the name of HEDT products that increased number of cores each year.

I think this still holds true today, with the exception that the sweet spot for number of cores has now moved to 8 cores, thanks to consoles.

So use your brain and understand all the variables involved and then start making judgements.

Example - if only 10% of the users have 12-core CPUs, of course the games won't utilise it.

The games will wait until 60% or more of the users have these 12-core CPU SKUs.

As any good programmer can tell you; doing multithreading well is hard, and doing multithreading badly is worse than no multithreading at all. And just because an application spawns extra threads doesn't mean it benefits performance.

But with so powerful 16-core Ryzen CPUs, the programmers can start realising that they can offload the heavy work off the GPU and force it on the CPU.

Physics, AI, etc. All need CPU acceleration.