Friday, April 3rd 2020

Ryzen 7 3700X Trades Blows with Core i7-10700, 3600X with i5-10600K: Early ES Review

Hong Kong-based tech publication HKEPC posted a performance review of a few 10th generation Core "Comet Lake-S" desktop processor engineering samples they scored. These include the Core i7-10700 (8-core/16-thread), the i5-10600K (6-core/12-thread), the i5-10500, and the i5-10400. The four chips were paired with a Dell-sourced OEM motherboard based on Intel's B460 chipset, 16 GB of dual-channel DDR4-4133 memory, and an RX 5700 XT graphics card to make the test bench. This bench was compared to several Intel 9th generation Core and AMD 3rd generation Ryzen processors.

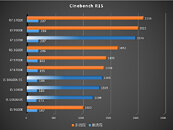

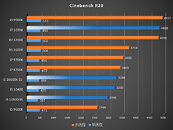

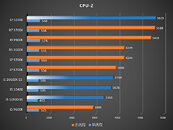

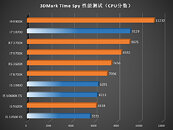

Among the purely CPU-oriented benchmarks, the i7-10700 was found to be trading blows with the Ryzen 7 3700X. It's important to note here, that the i7-10700 is a locked chip, possibly with 65 W rated TDP. Its 4.60 GHz boost frequency is lesser than that of the unlocked, 95 W i9-9900K, which ends up topping most of the performance charts where it's compared to the 3700X. Still the comparison between i7-10700 and 3700X can't be dismissed, since the new Intel chip could launch at roughly the same price as the 3700X (if you go by i7-9700 vs. i7-9700K launch price trends).The Ryzen 7 3700X beats the Core i7-10700 in Cinebench R15, but falls behind in Cinebench R20. The two end up performing within 2% of each other in CPU-Z bench, 3DMark Time Spy and FireStrike Extreme (physics scores). The mid-range Ryzen 5 3600X has much better luck warding off its upcoming rivals, with significant performance leads over the i5-10600K and i5-10500 in both versions of Cinebench, CPU-Z bench, as well as both 3DMark tests. The i5-10400 is within 6% of the i5-10600K. This is important, as the iGPU-devoid i5-10400F could retail at price points well under $190, two-thirds the price of the i5-10600K.These performance figures should be taken with a grain of salt since engineering samples have a way of performing very differently from retail chips. Intel is expected to launch its 10th generation Core "Comet Lake-S" processors and Intel 400-series chipset motherboards on April 30. Find more test results in the HKEPC article linked below.

Source:

HKEPC

Among the purely CPU-oriented benchmarks, the i7-10700 was found to be trading blows with the Ryzen 7 3700X. It's important to note here, that the i7-10700 is a locked chip, possibly with 65 W rated TDP. Its 4.60 GHz boost frequency is lesser than that of the unlocked, 95 W i9-9900K, which ends up topping most of the performance charts where it's compared to the 3700X. Still the comparison between i7-10700 and 3700X can't be dismissed, since the new Intel chip could launch at roughly the same price as the 3700X (if you go by i7-9700 vs. i7-9700K launch price trends).The Ryzen 7 3700X beats the Core i7-10700 in Cinebench R15, but falls behind in Cinebench R20. The two end up performing within 2% of each other in CPU-Z bench, 3DMark Time Spy and FireStrike Extreme (physics scores). The mid-range Ryzen 5 3600X has much better luck warding off its upcoming rivals, with significant performance leads over the i5-10600K and i5-10500 in both versions of Cinebench, CPU-Z bench, as well as both 3DMark tests. The i5-10400 is within 6% of the i5-10600K. This is important, as the iGPU-devoid i5-10400F could retail at price points well under $190, two-thirds the price of the i5-10600K.These performance figures should be taken with a grain of salt since engineering samples have a way of performing very differently from retail chips. Intel is expected to launch its 10th generation Core "Comet Lake-S" processors and Intel 400-series chipset motherboards on April 30. Find more test results in the HKEPC article linked below.

97 Comments on Ryzen 7 3700X Trades Blows with Core i7-10700, 3600X with i5-10600K: Early ES Review

You must have the games behaving in the same way, otherwise it's pure wastage of silicon.

Just run your games on a GPU, then.

Cinebench is doing ray tracing. Games are using a far more efficient ways to render a scene and it is being done on GPU.

Physics needs faster CPUs and you get physics done on the CPU.

So, no, it's not done and will not, and should not be done on GPU.

In a game at 120 FPS, each frame have a 8.3 ms window for everything during a single frame. In modern OS's like Windows or Linux you can easily get latencies of 0.1-1 ms (or more) due to scheduling, since they are not realtime operating systems. Good luck with having your 16 render threads sync up many times within a single frame without causing serious stutter.

You clearly didn't understand the contents of my previous post. I mentioned Asynchronous vs. synchronous workloads. Non-realtime rendering jobs deal with "large" work chunks on the second or minute scale, and can work independently and only need to sync up when they need the next one. In this case the synchronization overhead becomes negligible, which is why such workloads can scale to almost an arbitrary count of worker threads.

Realtime rendering is however a pipeline of operations which needs to be performed within a very tight performance budget in the ms scale, and steps of this pipeline is down on the microsecond scale. Then any synchronization overhead becomes very expensive, and such overhead usually grows with thread count.

Physics is both a complex and simple problem at the same time. Parts of it are better run on CPU, parts are better run on GPU.

GPU is a lot (A LOT) of simple computation devices for parallel compute and largely a SIMD device.

CPU is a complex compute device that is a lot more powerful and independent.

Both have their strengths and weaknesses but especially in games they complement each other.

Edit:

By the way, what you see in 3DMark Physics tests are still rendered on GPU although its load is deliberately kept as low as possible.

Yields have probably never been worse on 'small' chips for a desktop platform than this 10xxx series. How could they have been? These are stretched to absolute breaking point. And why? Because they have no other choice.

It's why you have the obscenity of a desktop 16 core 3950X, on Clevo's new laptop workstation platform, limited to a strict 65W TDP, and Intel's new top 8 core laptop chip drawing 176W on a similar platform.

Ryzen 4000 is a much greater threat then 3700x OC is. Rumors are pointing to 15% IPC increase and 300-500 mhz higher clock rates. Even if AMD only managed 10% IPC jump with the same clocks or 5% IPC jump with their CPUs able to hit 4.7-4.8 GHz reliably instead of 4.5-4.6, they would take what remains of intel's performance crown, especially in games as AMD's cache changes should dramatically reduce per core latency, which is what holds Ryzen back in gaming applications.

The 10 series from intel is gonna bomb at this rate. Bonkers power draw that makes the FX 9590 look civilized and heat production that even 360mm rads struggle to handle.

I'm not going to speculate about Zen 3's IPC gains, especially when such rumors are either completely bogous or based on cherry-picked benchmarks which have nothing to do with actual IPC. I've seen estimates ranging from ~7-8% to over 20% (+/- 5%), and such claims are likely BS, because anyone who actually knows would know precisely, not give a large range, as IPC is already an averaged number. And it's very unlikely that anyone outside AMD actually knows until the last few months.

The good news for AMD and gaming is that the CPU only have to be fast enough to feed the GPU, and as we can already see with Intel's CPUs pushing ~5 GHz, the gains are really minimal compared to the Skylakes boosting to ~4.5 GHz. Beyond that point you only really gain some more stable minimum frame rates, except for edge cases of course. If Intel today launched a new CPU with 20% higher performance per core, it wouldn't be much faster than i9-9900K in gaming (1440p), at least not until games all of a sudden becomes much more demanding on the CPU side while feeding the GPUs, which is not likely. Zen 2 is already fairly close to Skylake in gaming, Zen 3 should have a good chance to achieve parity, even with modest gains. It really comes down to what kind of areas are improving. Intel's success in gaming is largely due to the CPU's front-end; prefetching, branch-prediciton, out-of-order-window, etc. While other areas like FPU performance will mean much less for gaming. As I said, IPC is already an averaged number, across a wide range of workloads, which means a 10% gain in IPC doesn't mean 10% gain in everything, it could easily mean 20% gain in video encoding and 2% gain in gaming, etc.I'm just curious, why wait for DDR5 of all things?

If you really need memory bandwidth, just buy one of the HEDT platforms, and you'll have plenty. Most non-server workloads aren't usually limited by memory bandwidth anyway, so that would be the least of my concerns for a build.

And then there is always the next big one…

I'm more interested in architectural improvements than nodes. Now that CPUs of 8-12 cores are already widely available as "mainstream", the biggest noticeable gain to end-users would be performance per core.

Yields on 14nm with chips this size are excellent, there is no doubt about that.

Servers are different. LCC (10-core) is 325 mm^2, HCC (18-core) is 485 mm^2 and XCC (28-core) is 694 mm^2. LCC yields are not a big problem, HCC is so-so and XCC yields are definitely a problem.

That 3950X score is 65 ECO mode score, meaning "65W TDP" - that is 88-90W.

10980X 107W PL2 and 56s tau are a disgrace, but not that unexpected.

There is a huge difference there, why overblow the numbers to this degree is beyond me.

AMD's Zen 3 will likely have an 8-core CCX, so that jumping from core to core on different CCX adding incredible amounts of latency will be gone.

And Intel will be RIP.It likely boosts up to the mentioned by you TDP but then settles in its targeted limit of 65-watt!

What you are talking about is Intel's system where PL1 = TDP, PL2 = boost power limit and Tau is the time CPU boosts higher than PL1.

Both are simplified from how they actually function but that is the gist of it.

In the best case, you may take a little bit of their computing power over the cloud....... but I doubt it will be anytime soon.

Our internet connections are too slow.

And you can always take normal semiconductors silicon chips and build supercomputers for the very same purpose.

For now, AMD with Zen is your solution with multiple cores.

AMD CTO Mark Papermaster: More Cores Coming in the 'Era of a Slowed Moore's Law'

www.tomshardware.com/news/amd-cto-mark-papermaster-more-cores-coming-in-the-era-of-a-slowed-moores-law

1. Avoiding big dies. Think competing with and overshadowing 18/28-core Intel Xeons, which is what AMD EPYC is currently very successful at.

2. Yields on a cutting edge node. This is largely down to die size.

On smaller dies, chiplet design is not necessarily a benefit.

- Memory latency (and latency to cores on another die) has been talked about a lot and this is a flip side of chiplet coin. This is not generally a problem for server CPUs as environment, goals and software for those are meant to be well parallelized and distributed. There are niches that get hit but this is very minor. For desktop, these are a bunch of things that do get affected - games are the most obvious one both due to the way games work as well as games being a big thing for desktop market.

- At the same time, something like 200mm^2 is not a large die for an old manufacturing process and yields are not a problem with these. This is the size of a 10-core Intel Skylake-derived CPU. It is probably relevant to mention AMD has been competing well (and with good prices) with dies that size for the last 3 years. AMD 8-core Ryzen 3000 has 125mm^2 IO Die (which by itself is the same size as Intel 4-core CPU) and 75mm^2 CCD.

10nm is scrapped for the S series.

No matter how you try to spin it Intel will still offer the highest fps in a consumer chip for the majority of games now and into the near future and until amd can claim this people will not care about anything else you're using to try and make amd look like the "right" you choice.

The only choice for most gamers is for my "X" amount of dollars to spend which platform will give me the most FPS in the games I play.

It looks like that even with all these "vulnerability fixes" and pushing things to their max the Intel chips will still be the best for gamers and until this changes amd will always be fighting an uphill battle.

I've been ready since the 1800x to jump on the ryzen train but sadly when benchmarks came out the 7700k was the better gaming choice and now with a new upgrade looming for me it still looks like even after 3+ years Intel will still be the place I go for maximum gaming performance rig along with whatever will take the top spot for gpu performance in the upcoming releases from either Nvidia or AMD.

I'm no fanboy of anything but the highest performance and nothing so far shows me as having any other choice than Intel once again.