Thursday, June 25th 2020

Bad Intel Quality Assurance Responsible for Apple-Intel Split?

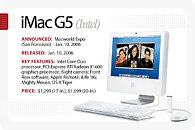

Apple's decision to switch from Intel processors for its Mac computers to its own, based on the Arm architecture, has shaken up the tech world, even though rumors of the transition have been doing rounds for months. Intel's first official response, coupled with facts such as Intel's CPU technology execution being thrown completely off gear due to foundry problems; pointed toward the likelihood of Intel not being able to keep up with Apple's growing performance/Watt demands. It turns out now, that Intel's reasons are a lot more basic, and date back to 2016.

According to a sensational PC Gamer report citing former Intel principal engineer François Piednoël, Apple's dissatisfaction with Intel dates back to some of its first 14 nm chips, based on the "Skylake" microarchitecture. "The quality assurance of Skylake was more than a problem," says Piednoël. It was abnormally bad. We were getting way too much citing for little things inside Skylake. Basically our buddies at Apple became the number one filer of problems in the architecture. And that went really, really bad. When your customer starts finding almost as much bugs as you found yourself, you're not leading into the right place," he adds.It was around that time that decisions were taken at the highest levels in Apple to execute a machine architecture switch away from Intel and x86, the second of its kind following Apple's mid-2000s switch from PowerPC to Intel x86. For me this is the inflection point," says Piednoël. "This is where the Apple guys who were always contemplating to switch, they went and looked at it and said: 'Well, we've probably got to do it.' Basically the bad quality assurance of Skylake is responsible for them to actually go away from the platform." Apple's decision to dump Intel may have only been more precipitated with 2019 marking a string of cybersecurity flaws affecting Intel microarchitectures. The PC Gamer report cautions that Piednoël's comments should be taken with a pinch of salt, as he has been among the more outspoken engineers at Intel.Image Courtesy: ComputerWorld

Source:

PC Gamer

According to a sensational PC Gamer report citing former Intel principal engineer François Piednoël, Apple's dissatisfaction with Intel dates back to some of its first 14 nm chips, based on the "Skylake" microarchitecture. "The quality assurance of Skylake was more than a problem," says Piednoël. It was abnormally bad. We were getting way too much citing for little things inside Skylake. Basically our buddies at Apple became the number one filer of problems in the architecture. And that went really, really bad. When your customer starts finding almost as much bugs as you found yourself, you're not leading into the right place," he adds.It was around that time that decisions were taken at the highest levels in Apple to execute a machine architecture switch away from Intel and x86, the second of its kind following Apple's mid-2000s switch from PowerPC to Intel x86. For me this is the inflection point," says Piednoël. "This is where the Apple guys who were always contemplating to switch, they went and looked at it and said: 'Well, we've probably got to do it.' Basically the bad quality assurance of Skylake is responsible for them to actually go away from the platform." Apple's decision to dump Intel may have only been more precipitated with 2019 marking a string of cybersecurity flaws affecting Intel microarchitectures. The PC Gamer report cautions that Piednoël's comments should be taken with a pinch of salt, as he has been among the more outspoken engineers at Intel.Image Courtesy: ComputerWorld

81 Comments on Bad Intel Quality Assurance Responsible for Apple-Intel Split?

So yeah, quit the FUD. Fact is while this isn't pure fact yet, it isn't "fake news" either.

For example, a lot of ARM SoCs now have something like a Cortex-M0 as their PMC, they have another custom DSP that handles audio, they multiple DSPs that handle video encoding, decoding, transcoding, etc. simply because the ARM cores are not powerful enough and not general purpose enough to do a good job doing these things. Ok, so some of these things are needed to make an SoC work, but ARM based SoCs have many more sub processors than x86/x64 CPUs have.

Look at the Renoir die shots that were posted last week as an example, not taking the GPU or interface parts into account, how many sub-processors are there in these? AMD has their Platform Security Processor, but that's it afaik. As this is an APU, it obviously has a media engine as well, which most likely contains some kind of DSP at the very least.

Apple is relying on a lot more additional sub-processors to get tings done, as per below. They have an always-on processor, they have the crypto accelerator, a neural engine, a machine learning accelerator (aren't the last two the same thing, more or less?) and a camera processor. Ok, so the last one is because this is more of a tablet chip design, but my point here is that x86/x64 doesn't rely on as many extra bits, instead the rely on raw power, for better or worse. Video codecs is one of the simplest examples, as I pointed out in my previous post in this thread. Every time there's a new video codec, a new hardware block has to be added to ARM SoCs for them to be able to play back the codec, unless it's a very simple codec, since the CPU cores are often not capable of playing back video files based on new, more efficient codecs. Yes, this has been an issue in the past with x86/x64 systems too, both H.264 and H.265 had problems on older CPUs and would need 90-100% of the CPU to do software playback. However, on an ARM based SoC from the same period, the same files, simply wouldn't work, due to reliance on fixed function video decoders.

I'm not saying that x86/x64 platforms aren't using more and more of these sub-processors, but most of them seem to be closely tied in to the GPU, rather than the CPU. It's obviously hard to do an apples to apples comparison (no pun intended), as the platforms are so different architecturally. My point was simply that Apple is going to have to be on the cutting edge with these sub-processors all the time and if they bet on the wrong standard, then you won't be able to watch some content on your shiny new Mac, as the codec isn't support and might never be.

It's nigh on impossible to predict what will be the winning standards and as much as most companies bet on H.265, it seems now that, at least to some extent, that VP9 and AV1 are gaining popularity due to being royalty free. That means a lot of older ARM based SoCs will be unable to play back this content, due to lack of a decoder, whereas both can be played back on a regular PC just fine.

Sorry about coming back to the video codec thing all the time, but it really is the simplest example which will continue to cause the biggest problems in the future, as long as we don't have a single standard that everyone agrees to use.

Regardless, ARM processors to date, are a lot more limited in terms of what they can do on their own, without support from these additional sub and co-processors.

I think Apple is betting bit on their iOS/PadOS ecosystem when it comes to software. A lost of major software is already available for these platforms and I guess the final OS for the new ARM based Macs will be based a lot more on the mobile OSes and as such, many of the apps are likely to just need UI changes to work on larger and higher resolution screens. That's not a minor task in all fairness, but I believe it's easier to do than re-write x86/x64 software for ARM.

They're also making some bold claims about developers having to make next to no changes to their software to make it work on the new processors, but I'm not sure I'm buying that. A lot of that also seems to hinge on Rosetta 2 and then you're losing a lot of performance due to the translation layer. I mean, does anyone remember Transmeta? Sure, that was WLIV, not RISC, but it still had a translation layer, which was partially in hardware and as such should be a lot faster than doing it all in software, which is what I presume Rosetta 2 is doing.

I just wonder how long Apple will keep the Mac Pro around. Is the model out now truly the last or are they going to keep it around for a while based on x86. Seeing how Apple seemed to have burned the bridge with Intel, maybe the next Mac Pro will be powered by AMD? Apple hasn't ruled that out, as far as I know.

So it doesn't help if the AMD makes highly efficient processors, or if Intel miraculously makes a new, much better processor line - the software side of x86 is even worse than the hardware. I partially blame Intel for forcing companies not to use multicore efficiently, because that would favor AMD's Zen - so outside of 3D rendering there are very few applications that fully use modern PC processors.

Your point about the "migration" of knowledge is an interesting one - I do wonder how many Intel engineers "migrated" over to Apple's CPU engineering division during this time.Using SPEC 2006... a benchmark that is 14 years old... and has been officially retired by its authors. Would you put any faith in a GPU review that used 3DMark05 to rate a Turing or Navi GPU? Didn't think so.ARM released its first CPU that could hit 2GHz in 2009 at 40nm. Over a decade later, there are no commercial ARM CPUs that are able to hit even 3GHz at 7nm. That's the reason they jumped on the MOAR CORES bandwagon, because the architecture has hit a very fundamental clock speed wall that they haven't been able to overcome (similarly to Intel with NetBurst, and that uarch wasn't salvageable at the end of the day... makes you wonder...).[citation needed]

Software is bad because many of the ginormous companies that write the software that everyone uses as standard, are really bad at writing software. What they are good at is marketing and crushing or buying out any competitors so that they don't have to write good software. Adobe is probably the best-known example, but there are many others across all sectors (Sage is one in financials, for example).

When you couple the fact that these companies can't write good software, and the fact that writing multithreaded code is difficult, and the fact that most app workloads aren't easily parallelised, the end result is software that is either slow and inefficient, or even buggier than you'd expect.But Apple has the "performance" users locked into their ecosystem so that it's too much of a pain to think of going anywhere else - or at least, they think they do.

Like you said, quite possibly this is another long-term Apple strategy, to get rid of the so-called "high-end" machines side of the business and only concentrate on making phones and netbooks (sorry, despite what Apple says, a so-called laptop with an ARM CPU will always be a netbook to me). Considering where the majority of Apple's profits come from, and the fact that the niche "high-end" market likely costs them a lot more relatively, it would make a lot of sense.

Of course there's still the much more limited instruction set, but my impression is that the upcoming ARMv9 ISA will go a long way towards alleviating that and making ARM much more viable as a high performance general purpose architecture, especially by bringing with it alternatives to AVX and similar heavy compute operations. And you can bet your rear end Apple will be adopting that as early as possible (remember how early they were to jump on 64-bit ARM?).

Parallelism in software always has costs and the more threads there are, the higher the cost of overhead climbs. This is why simply throwing more cores at a problem won't necessarily improve performance, especially compared to x86 which implements parallelism in hardware at virtually no cost (besides transistors/power).

x86 can't work normally in low power envelopes up to 2-3 watts, which greatly reduce the carbon footprint in the companies who would like to implement so aggressively high energy efficient components.

You are speaking of high performance from x86 but the cost is systems with a single CPU of over 150 watts, up to 400 watts and more.