Friday, August 14th 2020

Micron Confirms Next-Gen NVIDIA Ampere Memory Specifications - 12 GB GDDR6X, 1 TB/s Bandwidth

Micron have spilled the beans on at least some specifications for NVIDIA's next-gen Ampere graphics cards. In a new tech brief posted by the company earlier this week, hidden away behind Micron's market outlook, strategy and positioning, lie some secrets NVIDIA might not be too keen to see divulged before their #theultimatecountdown event.

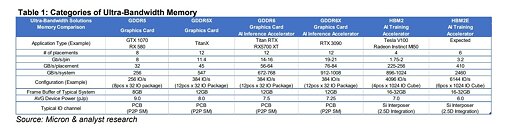

Under a comparison on ultra bandwidth solutions, segregated into the GDDR6X column, Micron lists a next-gen NVIDIA card under the "RTX 3090" product name. According to the spec sheet, this card features a total memory capacity of 12 GB GDDR6X, achieved through 12 memory chips with a 384-bit wide memory bus. As we saw today, only 11 of these seem to be populated on the RTX 3090, which, when paired with specifications for the GDDR6X memory chips being capable of 19-21 Gbps speeds, brings total memory subsystem bandwidth towards the 912 - 1008 GB/s range (using 12 chips; 11 chips results in 836 GB/s minimum). It's possible the RTX 3090 product name isn't an official NVIDIA product, but rather a Micron-guessed possibility, so don't look at it as factual representation of an upcoming graphics card. One other interesting aspect from the tech brief is that Micron expects their GDDR6X technology to enable 16 Gb (or 2 GB) density chips with 24 Gbps bandwidth, as early as 2021. You can read over the tech brief - which mentions NVIDIA by name as a development partner for GDDR6X - by following the source link and clicking on the "The Demand for Ultra-Bandwidth Solutions" document.

Source:

Micron

Under a comparison on ultra bandwidth solutions, segregated into the GDDR6X column, Micron lists a next-gen NVIDIA card under the "RTX 3090" product name. According to the spec sheet, this card features a total memory capacity of 12 GB GDDR6X, achieved through 12 memory chips with a 384-bit wide memory bus. As we saw today, only 11 of these seem to be populated on the RTX 3090, which, when paired with specifications for the GDDR6X memory chips being capable of 19-21 Gbps speeds, brings total memory subsystem bandwidth towards the 912 - 1008 GB/s range (using 12 chips; 11 chips results in 836 GB/s minimum). It's possible the RTX 3090 product name isn't an official NVIDIA product, but rather a Micron-guessed possibility, so don't look at it as factual representation of an upcoming graphics card. One other interesting aspect from the tech brief is that Micron expects their GDDR6X technology to enable 16 Gb (or 2 GB) density chips with 24 Gbps bandwidth, as early as 2021. You can read over the tech brief - which mentions NVIDIA by name as a development partner for GDDR6X - by following the source link and clicking on the "The Demand for Ultra-Bandwidth Solutions" document.

53 Comments on Micron Confirms Next-Gen NVIDIA Ampere Memory Specifications - 12 GB GDDR6X, 1 TB/s Bandwidth

On a dedicated graphics card, VRAM is a separate address space, but with a modified driver you should be able to have full access to it.

The reason why swapping VRAM to RAM is slow is not bandwidth, but latency. Data has to travel both the PCIe bus and the system memory bus, with a lot of syncing etc.

Why would a company make a game now that requires DirectX 14 that is not supported by any hardware, nobody would be able to buy it.

www.accton.com/Technology-Brief/the-challenge-of-pam4-signal-integrity/

Edit:Higher tier, higher VRAM capacity SKUs will use slower GDDR6 with higher density modules.

i hope Samsung is doing 3090 Vram chips

And that's now the memory issues are sorted.

Time will tell though.

It's indeed way worst if you don't have enough system ram as you have to swap, but it still bad on a GPU as the latency from ram (if you have enough) is too high for smooth gameplay.

You want in your GPU to have all it need for the next 5 or so seconds.

Still today, one of the low hanging fruits for better visual quality without involving too much calculation is better and more textures. Ram can also be used to store temporary data that will need to be reuse later in the rendering or in future frames.

CGI can use way more than 12 GB of textures.

But, the quantity is not only the main thing to consider. Speed is as important. No point of having a 2 TB SSD as GPU memory.

But in future, GPU might have a SSD slot on board to be use as a asset cache. (Like some Radeon pro card already have).

The key here is the memory bus width. I think 16 GB would have been perfect but without a hack like the 970, that would be impossible and I'm not sure they want to do that on a flagship GPU.

Most implementations of resource streaming so far has relied on fetching textures based in GPU feedback, which results in "texture popping" and stutter. This approach is a dead end, and will never work well. The proper way to solve it is to prefetch resources into a cache, which is not a hard task for most games, but still something which needs to be tailored to each game. The problem here is that most game developers uses off-the-shelf universal game engines and write no low-level engine code at all.It may, but I'm very skeptical about the usefulness of this. It will be yet another special feature which practically no game engines will implement well, and yet another "gimmick" for game developers to develop for and a QA nightmare.

But most importantly, it's hardware which serves no need. Resource streaming is not hard to implement at the render engine level. As long as you prefetch well, it's no problem to stream even from a HDD, decompress and then load into VRAM.

As for SSD texture cache, time will tell. It's already a game changer on pro card for offline rendering. SSD are becoming cheap and having a 1 TB added to a 600$ video card might end up to be a minor portion of the global price.

There are advantages of having it on the GPU. Lower latency. The GPU could also handle the I/O instead of the cpu and the bandwidth between the cpu and the GPU could be used for something else. The GPU could also use it to store many things like more mipmap, more level of details for geometry etc. The demo of the unreal engine 5 demonstrated what you can do by having a more detailed working set.

And I think the fact most gaming studios use third party engine is a better thing for technology adoption than the opposite. The big engine maker have to work on getting the technology into the engine to stay competitive and the studios only have to implement it while they create theirs games.

Time will tell.

While SSDs of varying quality are cheap these days*, having one on the graphics card introduces a whole host of new problems. Firstly, if this is going to be some kind of "cache" for games, when it needs to be managed somehow, and the user would probably have to go in to delete or prioritize it for various games. Secondly having it in a normal SSD and going through the RAM serves a huge benefit. You can apply a lossy compressions (probably ~10-20x), decompress it in the CPU and send the decompressed data to the GPU. This way, each large game wouldn't take up >200 GB. To do the same on the graphics card, it would require even more unnecessary dedicated hardware. A graphics card should do graphics, not everything else.

*) Be aware that most "fast" SSDs only have a tiny SLC SSD that's actually fast, and a large TLC/QLC SSD which is much slower.These big universal engines leaves very little room to utilize hardware well. There are barely any games properly written for DirectX 12 yet, how would you imagine more exotic gimmicks will end up?

Every year which passes by, these engines get more bloated. More and more generalized rendering code means less efficient rendering. The software is really lagging behind the hardware.

Micron does stack launch issues with their new GDDR stuff. Pascal also had a mandatory update due to instability on GDDR5X. Additionally its well known Samsung chips are better clockers for the past gen(s).I dont recall issues with gddr5x.. link me so I can see? :)

Overclocking is also beyond my perview, but that is true. My point was simply to clarify that both micron and Samsung equipped cards had the same issue.

EDIT: correction; title says gddr5x, but the issue was on gddr5. My bad