Friday, January 29th 2021

AMD Files Patent for Chiplet Machine Learning Accelerator to be Paired With GPU, Cache Chiplets

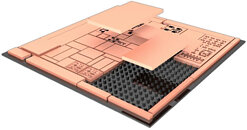

AMD has filed a patent whereby they describe a MLA (Machine Learning Accelerator) chiplet design that can then be paired with a GPU unit (such as RDNA 3) and a cache unit (likely a GPU-excised version of AMD's Infinity Cache design debuted with RDNA 2) to create what AMD is calling an "APD" (Accelerated Processing Device). The design would thus enable AMD to create a chiplet-based machine learning accelerator whose sole function would be to accelerate machine learning - specifically, matrix multiplication. This would enable capabilities not unlike those available through NVIDIA's Tensor cores.

This could give AMD a modular way to add machine-learning capabilities to several of their designs through the inclusion of such a chiplet, and might be AMD's way of achieving hardware acceleration of a DLSS-like feature. This would avoid the shortcomings associated with implementing it in the GPU package itself - an increase in overall die area, with thus increased cost and reduced yields, while at the same time enabling AMD to deploy it in other products other than GPU packages. The patent describes the possibility of different manufacturing technologies being employed in the chiplet-based design - harkening back to the I/O modules in Ryzen CPUs, manufactured via a 12 nm process, and not the 7 nm one used for the core chiplets. The patent also describes acceleration of cache-requests from the GPU die to the cache chiplet, and on-the-fly usage of it as actual cache, or as directly-addressable memory.

Sources:

Free Patents Online, via Reddit

This could give AMD a modular way to add machine-learning capabilities to several of their designs through the inclusion of such a chiplet, and might be AMD's way of achieving hardware acceleration of a DLSS-like feature. This would avoid the shortcomings associated with implementing it in the GPU package itself - an increase in overall die area, with thus increased cost and reduced yields, while at the same time enabling AMD to deploy it in other products other than GPU packages. The patent describes the possibility of different manufacturing technologies being employed in the chiplet-based design - harkening back to the I/O modules in Ryzen CPUs, manufactured via a 12 nm process, and not the 7 nm one used for the core chiplets. The patent also describes acceleration of cache-requests from the GPU die to the cache chiplet, and on-the-fly usage of it as actual cache, or as directly-addressable memory.

28 Comments on AMD Files Patent for Chiplet Machine Learning Accelerator to be Paired With GPU, Cache Chiplets

as in....

Let's hope this AI doesn't pick the street lingo of the LoL community!

Chicklets Machine...

Yeah, there are some LUTs on those FPGAs, but the actual computational girth comes from these babies: www.xilinx.com/support/documentation/white_papers/wp506-ai-engine.pdf

would be nice if Amd got one Api ish too.

In all seriousness, this just sounds like AMD doing more of the same thing they've been working towards for years. You have an I/O chiplet, and a CPU chiplet, and soon we'll have GPU chiplets and AI accelerator chiplets. We've already seen that this can scale well, so this should be an exciting prospect for future products. An APU with one of all of the above would be one hell of a chip.

Remember "Super ALUs" ?? Yeah, they're not around. AMD decided against them for whatever reason. Maybe it wasn't as good as other techniques they got, or maybe they ran some simulations and it could have made things worse. Just wait for the whitepapers to come out.

MOAR CHIPLUTZ

github.com/ROCmSoftwarePlatform/tensorflow-upstream Been supported via ROCm for a couple of years now.

PyTorch has been supported since ROCm 3.7, 4.01 is current. github.com/aieater/rocm_pytorch_informations

Nvidia's stuff is definitely a bit more plug and play, and AMD's engineering support is just now ramping, they have a long way to catch up.

There are a lot of interesting accelerators on the market now, its a fun time.

If it's the old dual core designs with two chips, they were two full blown single core chips on one package. Not quite the same as a chiplet .