Friday, June 4th 2021

AMD Breaks 30% CPU Market Share in Steam Hardware Survey

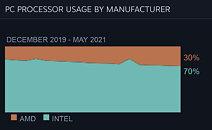

Today, Valve has updated its Steam Hardware Survey with the latest information about the market share of different processors. Steam Hardware Survey is a very good indicator of market movements, as it surveys users that are spread across millions of gaming systems that use Valve's Steam gaming platform. As Valve processes information, it reports it back to the public in a form of market share of different processors. Today, in the Steam Hardware Survey for May 2021, we got some interesting data to look at. Most notably, AMD has gained 0.65% CPU market share, increasing it from the previous 29.48% to 30.13%. This represents a major move for the company, which didn't own more than 30% market share with its CPUs on Steam Survey in years.

As the Steam Survey tracks even the market share of graphics cards, we got to see a slight change there as well. As far as GPUs go, AMD now holds 16.2% of the market share, which is a decrease from the previous 16.3%. For more details about Steam Hardware Survey for May 2021, please check out Steam's website here.

Source:

Steam Hardware Survey

As the Steam Survey tracks even the market share of graphics cards, we got to see a slight change there as well. As far as GPUs go, AMD now holds 16.2% of the market share, which is a decrease from the previous 16.3%. For more details about Steam Hardware Survey for May 2021, please check out Steam's website here.

104 Comments on AMD Breaks 30% CPU Market Share in Steam Hardware Survey

Hardware itself maters so little. I still remember that some crazy man ported a whole Civilization 5 (and it wasn't a cut down version either, you could do anything that you could on desktop version, but on phone) to Nokia 5230 running Symbian S60v5. There were some others like Asphalt 6, Toon Warz, some Daytona USA close, Riptide GP clone on that. And what that Nokia actually had? 400MHz single core chip, resistive touch screen (no multitouch), 128MB RAM, 70MB storage. So it was a complete potato and ass to develop for as it needed some optimizations to make games work without multitouch. But anyway, it still got some technically impressive titles despite all that. And now we have all the power, all the specs and no proper titles and that's why mobile gaming is pretty much dead aside from occasional P2W title.Well with that AMD sure sold their chance even if it was small and what they sold to Qualcomm eventually became Adreno and today it's the GPU in mobile space. It's crazy good and it sells very well. Even if Qualcomm actually made their own GPU from zero it might have taken them too long and they would have ended up as Mali. nVidia wasn't too bad with their Tegra stuff, but they ran way too hot, weren't exactly good for battery life and pretty much were gone after X1. Had they actually developed something proper, they could have succeeded. Anyway as user of Tegra 3, which ran at 90C while running GTA Vice City I surely didn't enjoy having my hands tried. At least nV made Tegra 3 chips, which were for a while the chips to get. But yeah, considering how "well" AMD was doing back then, maybe it's good that they didn't have mobile chips to care about. FX and Phenom II era was the worst AMD malaise era that they ever had and if not Ryzen and console sales, they would surely had been bankrupt by now.

Not sure I agree with that. See link for Qualcomms latest top of the range GPU performance. Make sure you read the comment below the benchmarks, as you'll see that the device in question is a lot like your old Tegra 3 device.

www.anandtech.com/show/16447/the-xiaomi-mi-11-review/3

Qualcomm is only better than the rest of the competition that runs Android.

ARM hasn't managed to come up with a really good GPU yet and we've yet to see a full implementation from Imagination Technologies, as for some reason, their GPUs only ends up as low-end implementations.

Apple is rather surprisingly the market leader, after making their own GPU from scratch (or at least so they claim).

AMD would've been bankrupt now if it wasn't for the fact that they bought ATI.

Anyway, Nexus 7 was a such garbage that without marketing efforts at the time it would be never talked about. It had shit ton of other problems. Most of them were just shoddy manufacturing and shoddy software support. Looking back at those years, if I could come back to those times I would never ever buy a Nexus 7 again. It's essentially a British Leyland of tablets.As if they are selling to someone else. They are clearly dominating and if you want the best chips, It's Snapdragon only nowadays. Best midrange chip is Snapdragon too.Who knows. RTG alone isn't great, it's the consoles and APUs that are their biggest success.

Speaking about GPUs, AMD offering is not so good and availability is even worse than Nvidia, so it’s hardly a surprise the result.70° for a 5800X are quite normal and nothing strange there.

50° on a 10700 means your are running it at ridiculous Intel 65W power limit, which means performance are way lower than a 5800X.

an unlocked 10700 (I’m running one in my son’s PC) is not different from a 5800X.In my house now we have 3 computers, with 10700, 5800X and 5900X, so I can compare them.

5800X is quite hot, but not unreasonably so. Thermal density on that tiny 8 core chiplet is the reason, but it still runs below 80° in stress tests and around 72/73° maximum while gaming in most demanding games.

10700 has lower temperature only if you keep it at the ridiculous Intel long power limit of 65W, that can destroy performance. I unlocked it and it reaches 75° in stress test, with much higher power consumption than the Ryzen.

5900X is the best in this matter: under stress test it stays around 70° with PBO active. But it has a strange behavior during some gaming, where single core clock sometimes reach almost 5 GHz so there are thermal spikes around 74° (average temperature during gaming is well below 70°).

It's obviously nice to have steady business from a customer like Apple, but as with consoles, you're not looking at much margin at this kind of business.

What it likely does though is make the books look nice and it gives AMD a better negotiating position with everything from foundry partners to material makers, as they have more volume than the next guy, which means steady business for everyone else too.

S3 was bought by VIA in 2001, so clearly that wasn't on the table, as AMD only bought ATI in 2006. S3 is owned by HTC these days, for some silly reasons that I could explain, but it has mainly to do with the Taiwanese staff bonus system and some graphics patents and a lawsuit.

XGI was spun out of SiS and Trident, which had been making graphics chips since the late 80's in Trident's case.

Could AMD have bought XGI? Maybe, but unlikely as they were still part of SiS.

Could AMD have bought SiS? Possibly, but it's much harder to buy and integrate two culturally very different companies.

Could AMD have bought VIA? Possibly, but same as above, although I guess that wouldn't have been allowed, as VIA owns Centaur Technology which has an x86 license, so...

ATI was after all Canadian and would've been much easier culture wise to integrate with, vs. any Asian company.

After well over a decade of living in Taiwan, I still don't understand how or why things are the way they are here, as there's so many things that aren't done in a sensible and logical way and that applies from everything from carpentry to marketing to product development and business management.

It's not all about money and it's also harder to buy a company in a different part of the world, due to vastly different regulation.

As for Matrox, they're still an independent company based in Canada and I doubt they would've been interested in an offer from AMD.

Also, I presume you've never used a graphics product from S3 or XGI, even less so SiS, if you think ATI was bad. Man, you have no idea...

But I'm glad you're confident AMD could've done better elsewhere, yet know so little about the industry and what has been going on. Some of us have actually worked in the industry and tested all these products and more that you most likely have never heard of. Did you know Micron Technology bought a rather decent graphics chip maker called Rendition back in 1998?

If we're going to speculate wildly here, AMD could've bought Imagination Technologies and gotten their PowerVR technology, or simply licensed their graphics technology, as Apple used to.

ATI did also buy a handful of different graphics chip makers, such as ArtX (they made the graphics chip for the GameCube and worked on integrated graphics with ALi, a Taiwanese chipset maker), BitBoys (out of Finland that claimed to have some revolutionary stuff that never made a real appearance), Tseng Labs (which was Tridents main competitor) and some other smaller players.

Regardless, at the time that AMD bought ATI, there weren't many players left to chose from. I guess they could've bought 3Dlabs from Creative Labs, but maybe that deal was never on the table. Beyond that, I don't know of anyone else that was still in business around that time so...

Anyhow, the TLDR; is this, AMD didn't really have any other real choice than ATI, regardless of what you've read or think.

Whatever flaws samsung may have, nvidia using them for the ampere line turned into a major blessing for nvidia.

Also, some nations simply don't allow foreign takeover of local companies, or larger than a certain percentage of foreign ownership.

Oh and Geode was National Semiconductor and Cyrix, AMD got involved three years later.I guess that's what happens when you're the best at what you're doing and everyone wants to make their products with you.

I don't think ever posted proof of what they are saying