Monday, October 13th 2008

Core i7 940 Review Shows SMT and Tri-Channel Memory Let-down

As the computer enthusiast community gears up for Nehalem November, with reports suggesting a series of product launches for both Intel's Core i7 processors and compatible motherboards, Industry observer PC Online.cn have already published an in-depth review of the Core i7 940 2.93 GHz processor. The processor is based on the Bloomfield core, and essentially the Nehalem architecture that has been making news for over an year now. PC Online went right to the heart of the matter, evaluating the 192-bit wide (tri-channel) memory interface, and the advantage of HyperThreading on four physical cores. In the tests, the 2.93 GHz Bloomfield chip was pitted against a Core 2 Extreme QX9770 operating at both its reference speed of 3.20 GHz, and underclocked to 2.93 GHz, so a clock to clock comparison could be brought about.

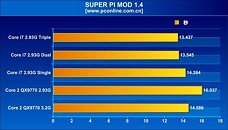

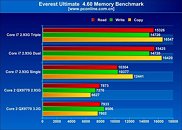

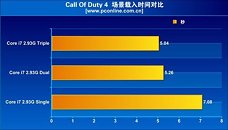

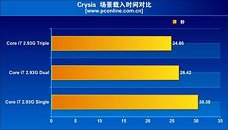

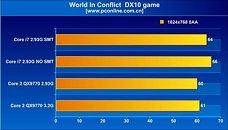

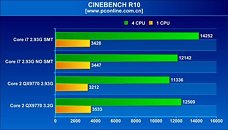

The evaluation found that the performance increments tri-channel offers over dual-channel memory, in real world applications and games, are just about insignificant. Super Pi Mod 1.4 shows only a fractional lead for tri-channel over dual-channel, and the trend continued with Everest Memory Benchmark. On the brighter side, the integrated memory controller does offer improvements over the previous generation setup, with the northbridge handling memory. Even in games such as Call of Duty 4 and Crysis, tri-channel memory did not shine.As for the other architectural change, simultaneous multi-threading, that makes its comeback on the desktop scene with the Bloomfield processors offering as many as eight available logical processors for the operating system to talk to, it appears to be a mixed bag, in terms of performance. The architecture did provide massive boosts in WinRAR and Cinebench tests Across tests, enabling SMT brought in performance increments of roughly 10~20% with general benchmarks that included Cinebench, WinRAR, TMPGEnc, and Fritz Chess. With 3DMark Vantage, SMT provided a very significant boost to the scores, with about 25% increments. It didn't do the same, to current generation games such as Call of Duty 4, World in Conflict and Company of Heroes. What's more, the games didn't seem to benefit from Bloomfield in the first place. The QX9770 underclocked at 2.93 GHz, outperformed i7 940, both with and without SMT, in some games.

Source:

PC Online

The evaluation found that the performance increments tri-channel offers over dual-channel memory, in real world applications and games, are just about insignificant. Super Pi Mod 1.4 shows only a fractional lead for tri-channel over dual-channel, and the trend continued with Everest Memory Benchmark. On the brighter side, the integrated memory controller does offer improvements over the previous generation setup, with the northbridge handling memory. Even in games such as Call of Duty 4 and Crysis, tri-channel memory did not shine.As for the other architectural change, simultaneous multi-threading, that makes its comeback on the desktop scene with the Bloomfield processors offering as many as eight available logical processors for the operating system to talk to, it appears to be a mixed bag, in terms of performance. The architecture did provide massive boosts in WinRAR and Cinebench tests Across tests, enabling SMT brought in performance increments of roughly 10~20% with general benchmarks that included Cinebench, WinRAR, TMPGEnc, and Fritz Chess. With 3DMark Vantage, SMT provided a very significant boost to the scores, with about 25% increments. It didn't do the same, to current generation games such as Call of Duty 4, World in Conflict and Company of Heroes. What's more, the games didn't seem to benefit from Bloomfield in the first place. The QX9770 underclocked at 2.93 GHz, outperformed i7 940, both with and without SMT, in some games.

91 Comments on Core i7 940 Review Shows SMT and Tri-Channel Memory Let-down

Video encoders are going to cream themselves, at least.

I'm not sure what the i7 CPU will cost, but isnt comparing it to the Extreme core 2 model giving it a bit of a disadvantage? Wouldnt hte performance different be a lot smaller vs a lower cached CPU?

edit:

load and idle power graph.

One thing that should go wild on that thing is photoshop

Ultimately, bandwidth doesn't matter so long as it doesn't run out. For instance, interstate highways are great--until they have a traffic jam. As such, memory never really weighs very heavily into benchmarking. It only has a major impact if it errors or if there is a traffic jam--both are for the worse. So in regards to memory, uneventful is a great thing.

Now directly to your question: tri-channel is addressing the need of the processor more than anything else. In order to add another two DIMMs, they increase the distance and therefore the latency. Specific references such as FPS goes down slightly in order to prevent a disaster (traffic jam). I think they are being very pro-active on this whole memory bandwidth issue but I really don't like the way it is progressing (has been progressing for almost a decade). This small decrease in performance with tri-channel is basically universal until they try to reinvent memory.

Look at a video card such as the 8600GT and its 128 bit memory bus. You can slap more ram on it (256/512/1024MB), and that will prevent running out of ram (texture swapping) _without_ improving performance.

You could also add more channels with the same amount of ram, which gives you better performance, but not if the game/application was designed for a 128 bit bus/256MB of ram.

Two examples:

128 bit bus with 1GB of ram, or a 512 bit bus with 256MB of ram.

Tri channel will help prevent bottlenecks as we all go 8GB+ in our systems, and i think we need benchmarks with MORE than a measly 2GB of ram, before calling it a failure.

I believe Core i7 (or at least the concepts that it is pushing for) won't go mainstream until Microsoft releases a 64-bit only operating system. I just hope AMD takes the ques from Intel and moves towards the same direction. AMD will get kicked in the balls again if they don't.

Todays games do not fit that category, directX 11 games will (native multithreading), and any form of media encoding definately will.

I bet Vmwares will run uber fast on these systems.

It is awkward how manufacturers are pushing for server technology in home computers. I mean, ten years ago, it was all about the clockspeed. Today, they realized that clockspeed isn't virtually unlimited and have looked to mainframe servers in to how to fix it: more processors. Because more processors means more of everything (sockets, DIMMs, power, etc.), they had to find a way to make more affordable and marketable. The answer was in the form of cores on the same CPU die. The GPU crowd is now realising the same thing but there is a great deal of latency involved. Once the GPU crowd jumps on the same multi-core bandwagon as the CPU crowd has been on for several years, games will start to benefit from CPUs with lots of cores.

DDR is such because it works in clock's low and high states. That is Dual Data Rate, so again how do you do Quad/Octo data rate if your clock signal only has two states?

As I see it, multiplexing the clock signal in time is not an option, because isn't that the same as just running the memory at twice the speed?

EDIT: :banghead: Forget about QDR, I'm stupid, I forgot you still have rising and falling edges like in Intel's Quad pumped FSB. I still fail to see how you could do 8 ops per cycle though.

HDR :)

History tells us anything made by Rambus is doomed to failure in the PC world; just look at RDRAM and the brief stint Intel had with it. Rambus specializes in high performance, soldered in situations. They're kind of like Apple come to think of it. They make a product, don't care what others say about it, and just expect people to come crawling to them for licensing.

It's JEDEC (a forum including all major processor and memory manufacturers) that has to decide when it's time to move to a new memory technology. I'm afraid we're probably going to be stuck with DDR derivitives until photon processors come out. :shadedshu

1- I need more sleep.

2- I was right in the first place. Falling and rising edges are still ONLY 2. Left edge of low state is the same as the right one of high state. :roll: Quad Pumping is done using 2 clocks with 90º phase difference.

3- XDR AFAIK uses a ring bus to acces the different memory banks, so is effectively multiplexing the data signals and it's a completely different aproach to SDRAM. It's also different to what I said about multiplexing the clock signal, which would be pointless IMO: if memory can run faster just run it faster, IMO FSB could easily keep up. In fact I have always considered the FSB was so "slow" compared to the CPU clock because the memory was even slower. And if you are doubling the memory bits/banks per clock why multiplex the external clock (or use two different phased signals) to be able to use them and not just double the lanes?

That being said, I think I have to elaborate more on my question. Using XDR as main memory is out of the question, we could do that (with it's pros and cons), but that wouldn't be using QDR/ODR SDRAM. My question is how and why you use a Quad Pumped Syncronous RAM when for doing that you have to double the accesible bits per clock of your memory chips without obtaining the benefits of a fully parallel design, if you could just run the memory twice as fast. I'm going to make a diagram of what I say because I don't know how to explain it better now and I'm sure no one will understand this mess. :o

A circuit can know when it is on high/low state OR when he is changing from low to high and viceversa as is the case with DDR. But once it is in one state how does it know it has to perform another task? It can't until another edge comes.