Though the NVIDIA nForce 650i Ultra MCP was launched at the end of last year it took NVIDIA some time to come up with an actual reference design. Again

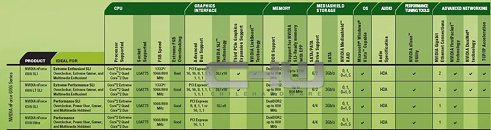

ChileHardware were the first in the business to show some facts. The 650i ultra is the least feature-packed chipset out of the 600i family, with the absence of SLI being the biggest drawback. But if you don't intend to go for a SLI setup then the board will please you with full Core 2 Extreme, Core 2 Duo and Core 2 Quad support. Furthermore we are talking about dual-channel DDR2-800 (four DIMM sockets), a single PCIe x16, two PCIe x1 and three PCI (32bit/33MHz) slots, four SATA II ports and a single PATA port (HDD or optical drive).

The ATX I/O panel is not that crowded either, besides the obligatory PS/2 keyboard and mouse ports there are four USB 2.0 ports, the Gigabit Ethernet connector and the eight-channel audio connectors.

The mentioned Gigabit port supports NVIDIAs FirstPacket prioritizing technology in order to deliver the lowest pings possible while gaming. The SATA ports support Raid arrays (Raid 0, 1, 0+1 and 5) and onboard you will find four additional USB2.0 pinouts to be used with a USB bracket. Its price will be in the 50-100 US$ range, companies like EVGA, XFX, ECS, Biostar will soon adopt this design to their product family. All in all very nice budget solution mainboard that has its strengths.