NVIDIA Does a TrueAudio: RT Cores Also Compute Sound Ray-tracing

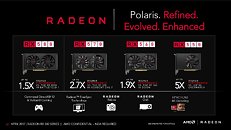

Positional audio, like Socialism, follows a cycle of glamorization and investment every few years. Back in 2011-12 when AMD maintained a relatively stronger position in the discrete GPU market, and held GPGPU superiority, it gave a lot of money to GenAudio and Tensilica to co-develop the TrueAudio technology, a GPU-accelerated positional audio DSP, which had a whopping four game title implementations, including and limited to "Thief," "Star Citizen," "Lichdom: Battlemage," and "Murdered: Soul Suspect." The TrueAudio Next DSP which debuted with "Polaris," introduced GPU-accelerated "audio ray-casting" technology, which assumes that audio waves interact differently with different surfaces, much like light; and hence positional audio could be made more realistic. There were a grand total of zero takers for TrueAudio Next. Riding on the presumed success of its RTX technology, NVIDIA wants to develop audio ray-tracing further.

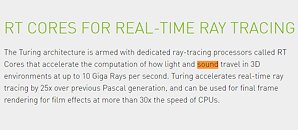

A very curious sentence caught our eye in NVIDIA's micro-site for Turing. The description of RT cores reads that they are specialized components that "accelerate the computation of how light and sound travel in 3D environments at up to 10 Giga Rays per second." This is an ominous sign that NVIDIA is developing a full-blown positional audio programming model that's part of RTX, with an implementation through GameWorks. Such a technology, like TrueAudio Next, could improve positional audio realism by treating sound waves like light and tracing their paths from their origin (think speech from an NPC in a game), to the listener as the sound bounces off the various surfaces in the 3D scene. Real-time ray-tracing(-ish) has captured the entirety of imagination at NVIDIA marketing to the extent that it is allegedly willing to replace "GTX" with "RTX" in its GeForce GPU nomenclature. We don't mean to doomsay emerging technology, but 20 years of development in positional audio has shown that it's better left to game developers to create their own technology that sounds somewhat real; and that initiatives from makers of discrete sound cards (a device on the brink of extinction) and GPUs makers bore no fruit.

A very curious sentence caught our eye in NVIDIA's micro-site for Turing. The description of RT cores reads that they are specialized components that "accelerate the computation of how light and sound travel in 3D environments at up to 10 Giga Rays per second." This is an ominous sign that NVIDIA is developing a full-blown positional audio programming model that's part of RTX, with an implementation through GameWorks. Such a technology, like TrueAudio Next, could improve positional audio realism by treating sound waves like light and tracing their paths from their origin (think speech from an NPC in a game), to the listener as the sound bounces off the various surfaces in the 3D scene. Real-time ray-tracing(-ish) has captured the entirety of imagination at NVIDIA marketing to the extent that it is allegedly willing to replace "GTX" with "RTX" in its GeForce GPU nomenclature. We don't mean to doomsay emerging technology, but 20 years of development in positional audio has shown that it's better left to game developers to create their own technology that sounds somewhat real; and that initiatives from makers of discrete sound cards (a device on the brink of extinction) and GPUs makers bore no fruit.