MSI Unveils its GeForce GTX 1650 Series Graphics Cards

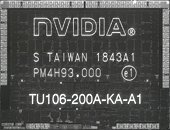

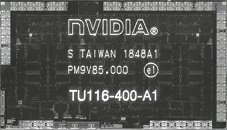

As the world's most popular GAMING graphics card vendor, MSI is proud to announce its new graphics card line-up based on the new GeForce GTX 1650 GPU, the latest addition to the NVIDIA Turing GTX family. The GeForce GTX 1650 has been carefully architected to balance performance, power, and cost, and includes all of the new Turing Shader innovations that improve performance and efficiency, such as Concurrent Floating Point and Integer Operations, a Unified Cache Architecture with larger L1 cache, and Adaptive Shading.

Equipped with excellent thermal solutions, the MSI GeForce GTX 1650 series is designed to provide higher core and memory clock speeds for increased performance in games. MSI's GAMING series delivers the top notch in-game and thermal performance that gamers have come to expect from MSI. With solid and sharp designs, ARMOR and VENTUS provide a great balance with strong dual fan cooling and outstanding performance. The AERO ITX is a great option for gamers looking to include Turing power into a small form factor build. With this comprehensive line-up there is plenty of choice for any build.

Equipped with excellent thermal solutions, the MSI GeForce GTX 1650 series is designed to provide higher core and memory clock speeds for increased performance in games. MSI's GAMING series delivers the top notch in-game and thermal performance that gamers have come to expect from MSI. With solid and sharp designs, ARMOR and VENTUS provide a great balance with strong dual fan cooling and outstanding performance. The AERO ITX is a great option for gamers looking to include Turing power into a small form factor build. With this comprehensive line-up there is plenty of choice for any build.