NVIDIA's 20-series Could be Segregated via Lack of RTX Capabilities in Lower-tier Cards

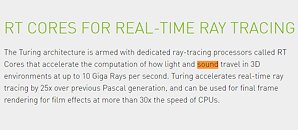

NVIDIA's Turing-based RTX 20-series graphics cards have been announced to begin shipping on the 20th of September. Their most compelling argument for users to buy them is the leap in ray-tracing performance, enabled by the integration of hardware-based acceleration via RT cores that have been added to NVIDIA's core design. NVIDIA has been pretty bullish as to how this development reinvents graphics as we know it, and are quick to point out the benefits of this approach against other, shader-based approximations of real, physics-based lighting. In a Q&A at the Citi 2018 Global Technology Conference, NVIDIA's Colette Kress expounded on their new architecture's strengths - but also touched upon a possible segmentation of graphics cards by raytracing capabilities.

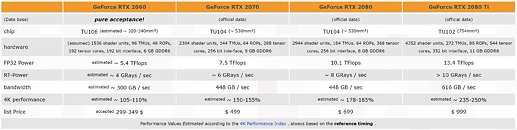

During that Q&A, NVIDIA's Colette Kress put Turing's performance at a cool 2x improvement over their 10-series graphics cards, discounting any raytracing performance uplift - and when raytracing is indeed brought into consideration, she said performance has increased by up to 6x compared to NVIDIA's last generation. There's some interesting wording when it comes to NVIDIA's 20-series lineup, though; as Kress puts it, "We'll start with the ray-tracing cards. We have the 2080 Ti, the 2080 and the 2070 overall coming to market," which, in context, seems to point out towards a lack of raytracing hardware in lower-tier graphics cards (apparently, those based on the potential TU106 silicon and lower-level variants).

During that Q&A, NVIDIA's Colette Kress put Turing's performance at a cool 2x improvement over their 10-series graphics cards, discounting any raytracing performance uplift - and when raytracing is indeed brought into consideration, she said performance has increased by up to 6x compared to NVIDIA's last generation. There's some interesting wording when it comes to NVIDIA's 20-series lineup, though; as Kress puts it, "We'll start with the ray-tracing cards. We have the 2080 Ti, the 2080 and the 2070 overall coming to market," which, in context, seems to point out towards a lack of raytracing hardware in lower-tier graphics cards (apparently, those based on the potential TU106 silicon and lower-level variants).