Monday, August 20th 2018

NVIDIA GeForce RTX 2000 Series Specifications Pieced Together

Later today (20th August), NVIDIA will formally unveil its GeForce RTX 2000 series consumer graphics cards. This marks a major change in the brand name, triggered with the introduction of the new RT Cores, specialized components that accelerate real-time ray-tracing, a task too taxing on conventional CUDA cores. Ray-tracing and DNN acceleration requires SIMD components to crunch 4x4x4 matrix multiplication, which is what RT cores (and tensor cores) specialize at. The chips still have CUDA cores for everything else. This generation also debuts the new GDDR6 memory standard, although unlike GeForce "Pascal," the new GeForce "Turing" won't see a doubling in memory sizes.

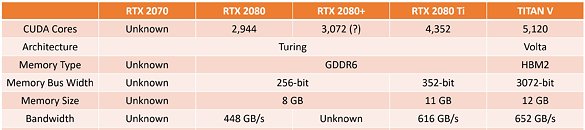

NVIDIA is expected to debut the generation with the new GeForce RTX 2080 later today, with market availability by end of Month. Going by older rumors, the company could launch the lower RTX 2070 and higher RTX 2080+ by late-September, and the mid-range RTX 2060 series in October. Apparently the high-end RTX 2080 Ti could come out sooner than expected, given that VideoCardz already has some of its specifications in hand. Not a lot is known about how "Turing" compares with "Volta" in performance, but given that the TITAN V comes with tensor cores that can [in theory] be re-purposed as RT cores; it could continue on as NVIDIA's halo SKU for the client-segment.The RTX 2080 and RTX 2070 series will be based on the new GT104 "Turing" silicon, which physically has 3,072 CUDA cores, and a 256-bit wide GDDR6-capable memory interface. The RTX 2080 Ti is based on the larger GT102 chip. Although the maximum number of CUDA cores on this chip is unknown the RTX 2080 Ti is reportedly endowed with 2,944 of them, and has a slightly narrower 352-bit memory interface, than what the chip is capable of (384-bit). As we mentioned earlier, NVIDIA doesn't seem to be doubling memory amounts, and so we could expect 8 GB for the RTX 2070/2080 series, and 11 GB for the RTX 2080 Ti.

Source:

VideoCardz

NVIDIA is expected to debut the generation with the new GeForce RTX 2080 later today, with market availability by end of Month. Going by older rumors, the company could launch the lower RTX 2070 and higher RTX 2080+ by late-September, and the mid-range RTX 2060 series in October. Apparently the high-end RTX 2080 Ti could come out sooner than expected, given that VideoCardz already has some of its specifications in hand. Not a lot is known about how "Turing" compares with "Volta" in performance, but given that the TITAN V comes with tensor cores that can [in theory] be re-purposed as RT cores; it could continue on as NVIDIA's halo SKU for the client-segment.The RTX 2080 and RTX 2070 series will be based on the new GT104 "Turing" silicon, which physically has 3,072 CUDA cores, and a 256-bit wide GDDR6-capable memory interface. The RTX 2080 Ti is based on the larger GT102 chip. Although the maximum number of CUDA cores on this chip is unknown the RTX 2080 Ti is reportedly endowed with 2,944 of them, and has a slightly narrower 352-bit memory interface, than what the chip is capable of (384-bit). As we mentioned earlier, NVIDIA doesn't seem to be doubling memory amounts, and so we could expect 8 GB for the RTX 2070/2080 series, and 11 GB for the RTX 2080 Ti.

25 Comments on NVIDIA GeForce RTX 2000 Series Specifications Pieced Together

So is it another same old same old...

Not fused about more VRAM just yet, the increase in bandwidth is welcome and I am sure I'll be wanting more the next round, but for now that 11GB will do for 4K and I would think 8GB for lower wont be a problem for another gen or 2.

DX12 + DXR + GameWorks / RTX

Like Physics acceleration a la PhysX

I maxed out my 8GB 1080 when playing RawData Early dev with Tweaked rendering distance, but haven’t seen that with my 1080TI

RTX 4000 series next year 2048/4096/6144 core or stays the same 1536/3072/4608, just shrinked to 1/2 the size.

You see “maxed out” only because the GPU is storing graphics there because it can, because it is there, NOT because it needs to.

Price is important too. If is right i will think about it .

I don't think so. So overall, I think this will turn out the be one of the most boring generations of cards in recent years...

I bet Nvidia would only consider that if AMD suddenly launched some overwhelmingly powerful and efficient GPU... which will probably be not the case for a long time.

EDIT: Honestly, Nvidia should just make the 2080+ card the "default" 2080, they are so similar I don't see the point of launching both of them as different products...