Saturday, November 12th 2011

Sandy Bridge-E Benchmarks Leaked: Disappointing Gaming Performance?

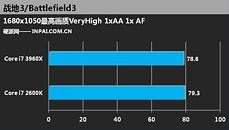

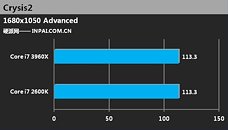

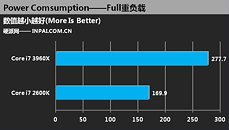

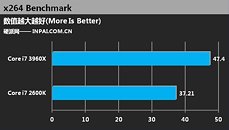

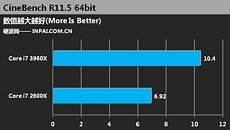

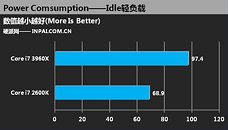

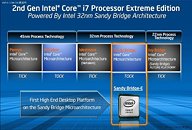

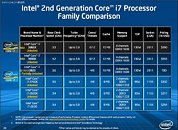

Just a handful of days ahead of Sandy Bridge-E's launch, a Chinese tech website, www.inpai.com.cn (Google translation) has done what Chinese tech websites do best and that's leak benchmarks and slides, Intel's NDA be damned. They pit the current i7-2600K quad core CPU against the upcoming i7-3960X hexa core CPU and compare them in several ways. The take home message appears to be that gaming performance on BF3 & Crysis 2 is identical, while the i7-3960X uses considerably more power, as one might expect from an extra two cores. The only advantage appears to come from the x264 & Cinebench tests. If these benchmarks prove accurate, then gamers might as well stick with the current generation Sandy Bridge CPUs, especially as they will drop in price, before being end of life'd. While this is all rather disappointing, it's best to take leaked benchmarks like this with a (big) grain of salt and wait for the usual gang of reputable websites to publish their reviews on launch day, November 14th. Softpedia reckons that these results are the real deal, however. There's more benchmarks and pictures after the jump.

Source:

wccftech.com

171 Comments on Sandy Bridge-E Benchmarks Leaked: Disappointing Gaming Performance?

I don't need to say that either. Just because you can afford a $1000 CPU, and have use for it, doesn't mean everyone else does. 2500k has the best balance right now, and, is the CPU to get. End of story.

Except you're asking me that when you know what we're actually on about.

www.tomshardware.com/reviews/core-i7-3960x-x79-performance,3026.html

No point really spending more than 300$ when it performs so admirably well, and can be overclocked to beat the crap out of additional core processors.

With that aside, they say they doubt mobo's will have those built-in. PCI-E expanders (like Lucid) break the original purpose of platform. You can have the same on Sandy.

New architecture gives same gaming framerates as old architecture = disappointing. What's hard to see?

Of course, we need to hold our breath for the official results on release day to be sure, which is why I pose it as a question.

Regardless, I'll say it again, wait for the official benchies tomorrow before passing judgment.

And welcome to TPU. :toast:

If it was true, then one would simply buy the cheapest CPU and be done with it.

You don't know what Touhou is? :laugh:

It's not exactly known for its graphics, considering the games were all made by one guy. So even if I don't have a 3960X yet, I'm sure that such results are true. AFAIK you can't do anything with the frame cap at 60fps, and even an Atom and Intel integrated graphics can play the game.

And measuring gaming performance with a softaware frame cap or vsync on is pretty stupid, isn't it? :shadedshuOne wouldn't game at it, but one would certainly bench at it when comparing CPU performance, to remove the GPU from the equation.

It should have been obvious already what I was trying to point out already. There are "benchmarks" where CPU power doesn't really matter at all. ;)

Touhou is just a very extreme and ridiculous example. :laugh:

---------------------

Then see this. At a lower resolution, there's a big difference in frametime (in a more CPU intensive case at the same time). This is a properly done test, that one above isn't:

www.hardwarecanucks.com/charts/index.php?pid=70,76&tid=3