Wednesday, November 14th 2012

NVIDIA to Pull Through 2013 with Kepler Refresh, "March of Maxwell" in 2014

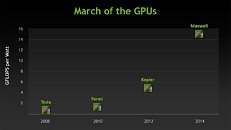

Those familiar with "Maxwell," codename for NVIDIA's next-generation GPU architecture, will find the new company roadmap posted below slightly different. For one, Maxwell is given a new launch timeframe, in 2014. Following this year's successful run at the markets with the Kepler family of GPUs, NVIDIA is looking up to Kepler Refresh GK11x family of GPUs to lead the company's product lineup in 2013. The new GPUs will arrive in the first half of next year, most likely in March, and will be succeeded by the Maxwell family of GPUs in 2014. Apart from the fact that Kepler established good performance and energy-efficiency leads over competitive architectures, a reason behind Maxwell's 2014 launch could be technical. We know from older reports that TSMC, NVIDIA's principal foundry partner, will begin mass production of 20 nanometer chips only by Q4-2013.

Source:

WCCFTech

20 Comments on NVIDIA to Pull Through 2013 with Kepler Refresh, "March of Maxwell" in 2014

TPU quoting from Wccftech ? :shadedshu

Wccftech is basically full of smelly brown stuff.

OO So far is this date. First they said, MAXWELL will come in 2013. Disappointment .:ohwell:

I wish AMD will make some very good GPU units to push closer to the date 2013. AMD 8870 Specs show promising increase, but the essence is 18u/m manufacturing technology. 16 times increase in GFLOPS per watt from may current GPU s sounds beautiful.:D

wccftech.com/nvidia-geforce-700-series-specifications-detailed-gtx-780-based-gk110-gk114-power-gtx-760-ti/

Next I'd say Nvidia either needs to provide the GK106 a bunch more OC clocking within the Boost feature, or just drop Boost and give AIB’s the opening to supply it with VRM, voltage sections, and cooling to un-constraint them. As to what can be done with GK104, that's hard to ascertain, knowing they stretched it from mainstream part to enthusiast with clock and Boost they’ll either need to find more clock speed in process improvement or... ?

www.techpowerup.com/173851/NVIDIA-Kepler-Refresh-GPU-Family-Detailed.html

GTX780

GTX770~8970

GTX760Ti>GTX680

GTX760~GTX670

...

The GK110 is the heart of the K20 Tesla piece. It's clocked at 705 MHz and draws 225(?)watts. It's the biggest chip (GK110) Nvidia have ever produced.

I'd very much like to see GK110 in a desktop part from a curiosity standpoint. Will they rip out compute again? If not, how much power will a higher clocked 7.1 billion transistor part draw? :eek:

Going by that and the fact that Tesla K20x is clocked at 735 Mhz, IMO a 900 Mhz GTX780 might be possible or something close to that. Or maybe 850 Mhz for 225w TDP.

I'm thinking they have to run with a form of GK110 in this next spin, at least at first as a Limited Edition Nvidia Factory released card that AIB can dress up with decals (aka GTX690) then how much/many AIB’s will be permitted work their magic might be very curtailed. If Nvidia doesn't I can't see them finding enough of a bump in a GK114 re-spin to have it power a GTX780 and stay close to what a 8970 might bring with it. So we need to keep a close eye on what the Tesla release is pointing too.

The announced list:...as well as the usual vendor options (Seneca, AMAX, IBM, HP, SGI, Penguin, Silicon Mechanics, Asus etc.) - any user running Fermi based Tesla/CUDA would be an upgrade candidate I guess. For reliable numbers you'd have to cross-reference vendors contractsAnything above mainstream for gaming hasn't represented sense since GPU's were invented, hasn't stopped a decade plus of people shelling out of the latest and greatest.

I don't think GK110 was intended as a desktop GPU from the start, any usable SKU's Nvidia can parlay into cash and PR means they are playing with house money- even putting out a 1000 cards ensures that the longest bar/highest number on every graph on every review for a year or more has Nvidia's name on it. From a marketing standpoint Nvidia could sustain a loss on each card sold and it would still represent good business.

Tho I might be curious on what comes after AMD's Sea Islands/R8000s...

So that sends me back to the mainstream GPU, (the real sweet spot of these second coming re-spins) as that's where either side will need to pull the loins' share. Within Nvidias' line-up would you sense the GTX660Ti and GTX660 will get condense under the GK116, with a Ti being bumped to a 256-Bit while the Non-Ti with 192-Bit? While tapering off wafer starts on the GK114 to be just say the GXT870Ti and then a Non-Ti?

You would also have to take into consideration that there could be a second 28nm refresh/model addition before 20nm debuts in mid-2014. I'm picking that both AMD and Nvidia have contingency plans in place just in case 20nm and the following 16nm FinFET processes slip.