Monday, June 8th 2015

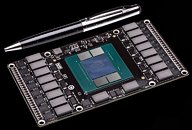

NVIDIA Tapes Out "Pascal" Based GP100 Silicon

Sources tell 3DCenter.org that NVIDIA has successfully taped out its next big silicon based on its upcoming "Pascal" GPU architecture, codenamed GP100. A successor to GM200, this chip will be the precursor to several others based on this architecture. A tape-out means that the company has successfully made a tiny quantity of working prototypes for internal testing and further development. It's usually seen as a major milestone in a product development cycle.

With "Pascal," NVIDIA will pole-vault HBM1, which is making its debut with AMD's "Fiji" silicon; and jump straight to HBM2, which will allow SKU designers to cram up to 32 GB of video memory. 3DCenter.org speculates that GP100 could feature anywhere between 4,500 to 6,000 CUDA cores. The chip will be built on TSMC's upcoming 16 nanometer silicon fab process, which will finally hit the road by 2016. The GP100, and its companion performance-segment silicon, the GP104 (successor to GM204), are expected to launch between Q2 and Q3, 2016.

Source:

3DCenter.org

With "Pascal," NVIDIA will pole-vault HBM1, which is making its debut with AMD's "Fiji" silicon; and jump straight to HBM2, which will allow SKU designers to cram up to 32 GB of video memory. 3DCenter.org speculates that GP100 could feature anywhere between 4,500 to 6,000 CUDA cores. The chip will be built on TSMC's upcoming 16 nanometer silicon fab process, which will finally hit the road by 2016. The GP100, and its companion performance-segment silicon, the GP104 (successor to GM204), are expected to launch between Q2 and Q3, 2016.

49 Comments on NVIDIA Tapes Out "Pascal" Based GP100 Silicon

On the article:

Is it just me or does Nvidia look borderline desperate at this moment to keep consumers away from the not even shown r9 390x?

Making some statement about their next card and how they will follow AMD with HBM memory but jump straight to version 2.0.

They do it this way to get the most money out of people, sure company so max profit blabla but as a consumer you could stand up against it by not buying bs updates.

Its about how the formulate it, if AMD would come out now and said "yeah well, we are going to release a card a little later that will have 100% faster HBM3" you would not think that its a little "dont waste your time on that product, wait a bit and get something much better from us!"?

IIRC, AMD and Hynix started to develop stacked DRAM back in 2010 (but they focused on developing and using it on APU), the idea to put stacked DRAM on discrete GPU first came from NVIDIA.

For system memory latency is what matters most as opposed to graphics memory, where bandwidth matters most. Going from DDR3-1600 with CL7 to DDR3-2400 with CL10, there isn't much latency gained (if any), only bandwidth. Unfortunately that doesn't translate into much of a performance boost in most applications.

On the other hand, GPUs are mostly bandwidth-starved anyway so more is always better. Don't forget, that current-gen GPUs are built around the memory architecture and the bandwidth it can deliver. So with nearly limitless memory bandwidth I'm sure they'll come up with a GPU architecture to put that bandwidth to good use. :rolleyes:

We 'the customers' are not going to force AMD or Nvidia to push large amounts of performance gains with every new release, even with a 50/50 market share. The whole market doesn't work like that, and has never worked like that. Ever since the Riva TNT days we are seeing marginal performance increases and all companies involved always push for a relative performance increase per gen. This makes sense, economically and because the market works very strongly with a 'trickle down' effect for new tech.

HBM is exactly the same as everything else. Fury won't be an astounding performance difference and neither will Pascal. Does that mean HBM will be 'handicapped' to perform less than it could? No - the increased bandwidth is being balanced out with the power of the GPU core. Resulting in a minor performance increase across the board. Overall HBM will relieve some bottlenecks imposed by GDDR5 but all that does is introduce a new bottleneck on the Core.

The occasional 'jumps' that we see are always being pushed back into the relativity of the pricing scheme. Look at the 970. A major performance jump, but they just cut down the silicon and place the fully enabled chip at a much higher price point.

The simple fact is that we want GPU technology to move forward without a ludicrous price attached to it so us lowly regular people can afford them. If you have so much money, maybe you could send everyone in this thread a brand new 980 Ti. I'm sure each person would be forever grateful. Otherwise, leave the gloating and arrogance at home. We really don't care if you can afford the most expensive, latest tech, every time it's released.

The first thought in my mind with all these people who jump on the newest GPU at every gen (it is mostly the same people every time) is: 'your stupidity knows no bounds, and you are proud of showing it'. Kudos for that, I guess? :p

Fun fact, those people are also the ones displaying the *least* amount of actual knowledge about GPU's and gaming in particular. I wonder why. A vast majority of these are now disgruntled Titan / Titan X owners who wonder why that 1k dollar card won't get them 60fps/ultra on 4K. So they buy another...

You can be pedantic if you want, but they didn't invent it, just chose another route.

I don't believe the story, though .. TSMC supposedly have a lot of problems with their 16nmFF process, and the 16nmFF+ that Pascal will use is nowhere close. I think we'll see a small Pascal chip if we're lucky (750ti replacement) around this time next year, then Q3-Q4 for 970-980 replacement, then new Titan SKU in Q1'17. That's being optimistic.

On the other hand I think AMD fully intend to launch the whole Artic Islands range at this time next year (on Samsung / GF 14nmFFLP+).

IMO either Pascal in its entirety or its timing could be a complete disaster for NVIDIA.