Tuesday, August 18th 2015

Intel "Skylake" Die Layout Detailed

At the heart of the Core i7-6700K and Core i5-6600K quad-core processors, which made their debut at Gamescom earlier this month, is Intel's swanky new "Skylake-D" silicon, built on its new 14 nanometer silicon fab process. Intel released technical documents that give us a peek into the die layout of this chip. To begin with, the Skylake silicon is tiny, compared to its 22 nm predecessor, the Haswell-D (i7-4770K, i5-4670K, etc).

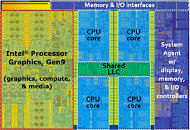

What also sets this chip apart from its predecessors, going all the way back to "Lynnfield" (and perhaps even "Nehalem,") is that it's a "square" die. The CPU component, made up of four cores based on the "Skylake" micro-architecture, is split into rows of two cores each, sitting across the chip's L3 cache. This is a departure from older layouts, in which a single file of four cores lined one side of the L3 cache. The integrated GPU, Intel's Gen9 iGPU core, takes up nearly as much die area as the CPU component. The uncore component (system agent, IMC, I/O, etc.) takes up the rest of the die. The integrated Gen9 iGPU features 24 execution units (EUs), spread across three EU-subslices of 8 EUs, each. This GPU supports DirectX 12 (feature level 12_1). We'll get you finer micro-architecture details very soon.

What also sets this chip apart from its predecessors, going all the way back to "Lynnfield" (and perhaps even "Nehalem,") is that it's a "square" die. The CPU component, made up of four cores based on the "Skylake" micro-architecture, is split into rows of two cores each, sitting across the chip's L3 cache. This is a departure from older layouts, in which a single file of four cores lined one side of the L3 cache. The integrated GPU, Intel's Gen9 iGPU core, takes up nearly as much die area as the CPU component. The uncore component (system agent, IMC, I/O, etc.) takes up the rest of the die. The integrated Gen9 iGPU features 24 execution units (EUs), spread across three EU-subslices of 8 EUs, each. This GPU supports DirectX 12 (feature level 12_1). We'll get you finer micro-architecture details very soon.

82 Comments on Intel "Skylake" Die Layout Detailed

On the CPU side, yeah, Intel can manipulate the market however they want because they have it like that.

they just wont as it would kill x99 without doubling up the E range first.

Now imagine that Intel offers instead of just the 4-core 133mm² w/IGP (rerely used), another SKU 8-core 133mm² noIGP true Mainstream-like, the IGP replaced with something useful at no cost at all, except copy.paste some cores. Simple as that.

Though I don't really want 4 more cores, I'd prefer 2 more cores, and 8 more PCI-E lanes.

unbelievable, we have a gpu that is capable of video encoding & opencl

there is a HEDT line for a reason........ this is mainstream so........

......ITS ALL ABOUT THE IGPU!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

It was clearly a gamble, in some respects, for Intel to make the entry HEDT SKU a hex-core. On one side, a reasonable consumer might welcome it as a long-anticipated update to the HEDT lineup, at a reasonable price of ~$380, same as the quad-core 4820K. On the other hand, others will (without having any intention of buying X99) look at the 5820K and ask Intel / complain to other people that the 4790K should have been hex-core too.

Seriously?

If the 6700K was a hex-core with no iGPU, why would the 5820K and 5930K even exist? For the sole purpose of offering more PCIe lanes? Quad-channel DDR4 (oh look, double the bandwidth, must be double the FPS too)? Intel would be shooting itself in the foot.

1. Extra costs into the 6700K. Can't take a Xeon die like the 5820K and 5930K because it's not LGA2011. Need to make a new hex-core die on LGA1151. In the end, no one ends up buying it because the extra R&D costs warrant a higher price tag, and everyone says "DX12 is coming, FX-8350 offers similar performance for about 1/2 the price". Lost lots of money here.

2. 5820K and its successor just die. I mean, what else are these two supposed to do (in addition to the 5930K, also dead)? Next, people boycott LGA2011 and say that "unless the 5960X's successor is a 10-core, I won't buy anything 2011". Jesus. So what is Intel supposed to do now? Lost more money here.

3. Intel slips back into the Pentium days and becomes no better than AMD. These few years have been about forcing the TDP down (don't look at the 6700K, look at the fact that Broadwell desktop was 65W and the other Skylake SKUs are of lower TDP than Haswell). Six-core would mean 140W on LGA1151. There aren't any stock coolers on LGA2011 because none of Intel's stock coolers (except for that one oddity during the Westmere era that was a tower) can handle that kind of heat output. 140W? Better get better VRMs, because those H81M-P33 MOSFETs aren't going to take on a six-core. And "hey look, AMD actually has stock coolers that can handle their CPUs". Lots of confusion and more money lost.

4. What happens to the other LGA1151 SKUs? Did they suddenly cease to exist? 28 PCIe lanes for the 6700K and...what? Would you want to try and explain this disjointed lineup to anyone? "Oh yeah, the top dog in the LGA1151 family is really, really powerful, but although the rest are all 6th Gen Core, they all suck in comparison."

"Intel deserves this dilemma because they cheated by winning over the OEMs anticompetitively" is not a valid argument in this scenario. Those were pre-Netburst eradication days. Prior to Carrizo, there were plenty of opportunities for AMD in the OEM laptop and desktop market, since Trinity/Richland and to a lesser extent, Kaveri APUs offered much more to the average user than a i3/i5.

1. Intel iGPU supports Intel QuickSync, if you compress videos its godsend, its faster then CPU and its faster then GPU accelerated compression like CUDA and whatsitsname AMD equivalent basically its the fastest way to compress videos.

2. It supports DXVA2 and you can offload video decoding when you watch movies, even bluray and 3D

3. You always have a GPU! Things like your GPU died, your testing something, you tried to overclock your GPU with Bios flash and now it works but no signal... etc (basically its good to have an extra GPU)

4. In future (when we might be already dead), DX12 games will support MANY Graphics card mode (dont confuse with SLI) so every GPU, no mater its maker and company will be able to work together, so every gamer with iGPU will get some FREE FPS Boost

5. This is about Broadwell CPUs only, if you take a look at the benchmarks of all 3 modern CPUs, Skylake, Broadwell and Haswell, when they down clocked or overclock to same speed, in some games Broadwell gets like extra 20+ FPS just because it has iGPU with 120MB of fast eDRAM that also works as L4 cache (if iGPU enabled it allocates up to 50% of eDRAM for L4 cache, if you disable iGPU, everything works as L4 cache)

Right now Broadwell is in fact the FASTEST CPU for gaming! Just think 3.3Ghz Broadwell vs 4Ghz 4790K, both get 82FPS in Metro Redux FHD+Max Quality, 97FPS in Tomb Raider FHD+High Quality

Any smart gamer (not me) will buy a Broadwell CPU from a web site like (Digital Lottery) that has guaranteed overclock of 4Ghz and above and his system going to rape both Skylake and Haswell in every game, and im 90% sure that its going to beat the 2 CPUs that come after Skylake unless they have same iGPU technology with eDRAM

Basically what im saying is that you are 10000% right there is absolutely no need for an iGPU on i7 series of processors what we NEED is 128/256MB of Fast L4 Cache!!!

Intel CAN DO IT, we seen it already with Broadwell, just remove the iGPU and keep the cache or even better Increase it to 256Mb, and keep the same price for the CPU.

I dont know how much every part cost but somehow im sure that a GPU is more expensive then some Cache!

End Rant :)

I think Intel was reeling from 14nm being so difficult and they're looking for ways to offset their costs. Keeping costs down with Skylake was their answer. 10nm is going to be very, very difficult (and costly) to reach.

there are no L4 cache in any other CPU's

You are the one who need to reread posts and try to understand them better.Take it to where you took it out from. I didn't ask intel for the graphics part. I guess no one has ever asked them to integrate it on the die. They force you and screw you very big time.

Scumbags intel.

Oh dear, 40% of the die is dedicated to the iGPU. What about the 7850K?

*gasp* is that 60%? 70%? Before you start going on about how this is an "APU", it really isn't very different from Intel's mainstream "CPUs". Also, since it's apples to apples, try getting video output out of a FX-8350 and a 990FXA-UD3. Hm? Black screen?Hey, I didn't ask AMD for a huge iGPU on die. But look at what I got anyway.

AMD offered this innovation with the idea to accelerate the general performance in all tasks.

Thanks to those scumbags intel, nvidia, microsoft, other "developers" and co, it probably won't happen.