Tuesday, August 18th 2015

Intel "Skylake" Die Layout Detailed

At the heart of the Core i7-6700K and Core i5-6600K quad-core processors, which made their debut at Gamescom earlier this month, is Intel's swanky new "Skylake-D" silicon, built on its new 14 nanometer silicon fab process. Intel released technical documents that give us a peek into the die layout of this chip. To begin with, the Skylake silicon is tiny, compared to its 22 nm predecessor, the Haswell-D (i7-4770K, i5-4670K, etc).

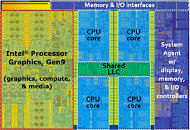

What also sets this chip apart from its predecessors, going all the way back to "Lynnfield" (and perhaps even "Nehalem,") is that it's a "square" die. The CPU component, made up of four cores based on the "Skylake" micro-architecture, is split into rows of two cores each, sitting across the chip's L3 cache. This is a departure from older layouts, in which a single file of four cores lined one side of the L3 cache. The integrated GPU, Intel's Gen9 iGPU core, takes up nearly as much die area as the CPU component. The uncore component (system agent, IMC, I/O, etc.) takes up the rest of the die. The integrated Gen9 iGPU features 24 execution units (EUs), spread across three EU-subslices of 8 EUs, each. This GPU supports DirectX 12 (feature level 12_1). We'll get you finer micro-architecture details very soon.

What also sets this chip apart from its predecessors, going all the way back to "Lynnfield" (and perhaps even "Nehalem,") is that it's a "square" die. The CPU component, made up of four cores based on the "Skylake" micro-architecture, is split into rows of two cores each, sitting across the chip's L3 cache. This is a departure from older layouts, in which a single file of four cores lined one side of the L3 cache. The integrated GPU, Intel's Gen9 iGPU core, takes up nearly as much die area as the CPU component. The uncore component (system agent, IMC, I/O, etc.) takes up the rest of the die. The integrated Gen9 iGPU features 24 execution units (EUs), spread across three EU-subslices of 8 EUs, each. This GPU supports DirectX 12 (feature level 12_1). We'll get you finer micro-architecture details very soon.

82 Comments on Intel "Skylake" Die Layout Detailed

What didn't you undertstand from what I've said and what you argue?

This one:

Personal Supercomputing

Much of a computing experience is linked to software and, until now, software developers have been held back by the independent nature in which CPUs and GPUs process information. However, AMD Fusion APUs remove this obstacle and allow developers to take full advantage of the parallel processing power of a GPU - more than 500 GFLOPs for the upcoming A-Series "Llano" APU - thus bringing supercomputer-like performance to every day computing tasks. More applications can run simultaneously and they can do so faster than previous designs in the same class.

www.amd.com/en-us/press-releases/Pages/amd-fusion-apu-era-2011jan04.aspx

One fanboy around here is ready to ascend to the godlike status of "utterly blind, enslaved fanboy". Spewing PR today, who knows what tomorrow will bring?

The future is not fusion. The future is Sony Xperia S, and it will be the end of us all. I still need you, sanity. It was invaluable to have you at my side through this fruitless battle and now I must retreat to be close to you, sanity. He doesn't understand the meaning of hypocrisy and can't comprehend the fact that all of this Bullshit with a capital B is a phenomenon called marketing. But I must bar myself from continuing to struggle against this unending insanity.

which camp is greener" "which camp has more sh*t on it" argumentThe bottom of the HEDT has always basically matched the top of the mainstream, in terms of core count. Now we've move to the point where the bottom of the HEDT is 6 cores, and I believe the top of the mainstream should be 6 cores as well.

Also, Sandy Bridge saw the discontinuation of such a two-prong strategy. After all, it wasn't the most logical offering; so the i5 (Clarkdale, not Lynnfield) and i3 SKUs were all about the first-time on-die Intel Graphics while the mainstream i7s were HEDT bottom-feeders brought down to LGA1156? It could be said that 1st Gen core wasn't a blueprint for others to follow; it marked a transition from the old Core 2 all-about-the-CPU lineup to the new stack defined by integrated graphics.

Also, six cores with or without iGPU on LGA115x would put an awful amount of heat in a smaller package than LGA2011. It also would have to do away with TIM, thus confirming my argument that Intel would have to put more money into this new i5/i7K design than it is actually worth.

Much like Nvidia gets out the mainstream core before the big die.

the future IS fusion... for ALL platforms, x86, arm, mobile, desktop, supercomputer, every calculation anywhere is simple math & different math is best used on different types of processors

opencl runs everywhere, perfect way to utilize the whole 'apu' from whatever company

People in this thread whine but, if you don't like it, don't buy it! The complaining in this thread is merely astonishing.This. I would roll it into the mainstream platform argument. People who want more cores and no iGPU are power users, not mainstream users. My wife had a Mobility Radeon 265X in her laptop. It has been running on the HD 4000 graphics but she's never noticed a difference. That's because she's like the majority of users out there who would never use it.

We here at TPU are a minority, not a majority. It's amazing how people don't seem to understand that and how the market is driven by profit, not making power users happy.

Seriously, a lot of people need to search up that WCCFTech (I think) article where a i7-5960X engineering sample was first leaked to the public (after, of course, being delidded improperly and having half of its broken die still epoxied to the IHS). That LGA2011-3 package is huge. Not only is it huge, the 8-core die is also HUGE. The size of the die alone is not too far off that of the entire LGA1150 IHS. When we consider that a six-core die would not be too much smaller (since it needs more than 8MB of L3 if it wants to avoid ending up like the X6 1100T), a 6-core mainstream CPU is just not logical on size alone, without making any mention of the iGPU.

But why don't they keep their inadequate graphics "acclerators" on motherboards as it always used to be and actually give customers the right to choose what they want?Nope, AMD will change this coming next year. They only need working CPU on 14nm and Intel's bad practices will be gone forever.

Wow, do you realise that in most countries these Skylake processors are the top of the line what could be afforded. They are bought by people who are either enthusiasts or pretend to be such, or just watch their budgets tightly ?There is the unpleasant feeling with a slow CPU to wait, and wait while it takes its time to process all the required data....... waste of time in enormous scale. If you have the willingness and patience to cope with that.

I just tell you that I'm 99% sure that in 2017 you will start to sing another song. ;)

The bottleneck for most consumers isn't CPU but HDD or internet performance. The bottleneck for gamers is the hardware found in the PlayStation 4 and Xbox One tied to how ridiculous of a monitor(s) they buy (e.g. a 4K monitor is going to put a lot more stress on the hardware than a 1080p monitor).