Monday, October 26th 2015

GDDR5X Puts Up a Fight Against HBM, AMD and NVIDIA Mulling Implementations

There's still a little bit of fight left in the GDDR5 ecosystem against the faster and more energy-efficient HBM standard, which has a vast and unexplored performance growth curve. The new GDDR5X standard offers double the bandwidth per-pin compared to current generation GDDR5, without any major design or electrical changes, letting GPU makers make a seamless and cost-effective transition to it.

In a presentation by a DRAM maker leaked to the web, GDDR5X is touted as offering double the data-rate per memory access, at 64 byte/access, compared to 32 byte/access by today's fastest GDDR5 standard, which is currently saturating its clock/voltage curve at 7 Gbps. GDDR5X breathes a new lease of live to the ageing DRAM standard, offering 10-12 Gbps initially, with a goal of 16 Gbps in the long term. GDDR5X chips will have identical pin layouts to their predecessors, and hence it should cost GPU makers barely any R&D to implement them.When mass-produced by companies like Micron, GDDR5X is touted to be extremely cost-effective compared to upcoming HBM standards, such as HBM2. According to a Golem.de report, both AMD and NVIDIA are mulling GPUs that support GDDR5X, so it's likely that the two could reserve expensive HBM2 solutions for only their most premium GPUs, and implement GDDR5X on their mainstream/performance solutions, to keep costs competitive.

Source:

Golem.de

In a presentation by a DRAM maker leaked to the web, GDDR5X is touted as offering double the data-rate per memory access, at 64 byte/access, compared to 32 byte/access by today's fastest GDDR5 standard, which is currently saturating its clock/voltage curve at 7 Gbps. GDDR5X breathes a new lease of live to the ageing DRAM standard, offering 10-12 Gbps initially, with a goal of 16 Gbps in the long term. GDDR5X chips will have identical pin layouts to their predecessors, and hence it should cost GPU makers barely any R&D to implement them.When mass-produced by companies like Micron, GDDR5X is touted to be extremely cost-effective compared to upcoming HBM standards, such as HBM2. According to a Golem.de report, both AMD and NVIDIA are mulling GPUs that support GDDR5X, so it's likely that the two could reserve expensive HBM2 solutions for only their most premium GPUs, and implement GDDR5X on their mainstream/performance solutions, to keep costs competitive.

69 Comments on GDDR5X Puts Up a Fight Against HBM, AMD and NVIDIA Mulling Implementations

In high-end graphics card, having less demanding memory system means more power room for the GPU.. AMD used this fact with the Fury X... so I think high-end graphics will benefit more from HBM.. specially that HBM is still in it's first gen. second gen. HBM2 with next gen. AMD & NVIDIA is coming which is faster and more memory can be used than first gen. making more room for the GPU...

Plus it's not just power, HBM is also not as hot as GDDR5, specially that GDDR5 requires their own Power MOSFET which also consume power and generate heat

So HBM(specially HBM2) is a winner over GDDR5 in every side except cost... so I think it will stay in the high-end market... and specially Dual GPU cards where even PCB space is a challenge of it's own !!

Another factor like supply chain/avability or massively different cost will force manufacturer to use second choice, which made easier by the already familiar technology. That in turn made HBM the niche one, with corresponding cost overhead.

On the other hand, I could see GDD5X being used on cheaper 14/16nm cards

Where is the fight?

GDDR5X would functionally be on lower end hardware, taking the place of retread architectures. This is similar to how GDDR3 existed for quite some time after GDDR5 was implemented, no?

If that was the case GDDR5X wouldn't compete with HBM, but be a lower cost implementation for lower end cards. HBM would be shuffled from the highest end cards downward, making the competition (if the HBM2 hype is to be believed) only on the brackets which HBM production could not fully serve with cheap chips.

As far as Nvidia versus AMD, why even ask about that? Most assuredly, Pascal and Arctic Islands are shooting for lower temperatures and powers. Upgrading RAM to be more power hungry opposes that goal, so the only place they'll be viable is where GPUs would be smaller, which is just the lower end cards. Again, there is no competition.

I guess I'm agreeing with most other people here. GDDR5X is RDRAM. It's better than what we've got right now, but not better than pursuing something else entirely.

For assurance I'll ask... this can be implement without change to the chips memory controller, meaning existing parts could've got GDDR5X as pretty much plug and play?

Now for Maxwell a year ago would've been late for sure, and/or for Maxwell with its memory compression and overall efficiency they could forgo supposed benefits in the price/margins they intended to be at. Though one would 've thought AMD could've made use of this on Hawaii (390/390X), so why didn't they? Perhaps 8Gb was more the "marketing boon" than the extra bandwith for the power GDDR5X dictates. Probably 4Gb of new GDDR5X was more costly verses 8Gb, used more power, and with 512-Bit it probably didn't see the performance jump to justify it.

I think we might see it with 14/14mmFf parts that hold to 192/256-bit bus a suffice fine on just 4Gb. I think they need to offset the work with the new process while in markets that 4Gb is all that's required. If it's a case of at resolutions that that truly can more than 4Gb, HBM2 has it beat.

This is an alternative to GDDR5 for mainstream/lower cards of upcoming generation, nothing more. Cards can have a smaller memory controller, or cheaper memory controller compared to normal GDDR5 because of the higher bandwidth of these.

Maybe people are too pressed for time to actually read the info and do some basic research. Much easier to skim read (if that) and spend most of the available time writing preconceived nonsense.

1) GDDR5X is lower voltage (1.5 to 1.35, or a 10% decrease). Fact.

2) GDDR5X targets higher capacities. Extrapolated fact based upon the prefetch focus of the slides.

3) Generally speaking, GPU RAM is going to be better utilized, therefore low end cards are going to continue to have more RAM on them as a matter of course. Demonstrable reality.

4) Assume that RAM quantities only double. You're looking at a 10% decrease in voltage, with a 100% increase in count. That's a new power increase of 80% (insanely ballparked based off variable voltage and constant leakage, more likely to be 50-80% increase in reality). Reasonable assumption of net influence.

Therefore, GDDR5X is a net improvement gain per memory cell, but as more cells are required it's a net decrease overall. HBM has yet to show real improvements in performance, but you've placed the cart before the horse there. Fury managed to take less RAM and make it not only fit in a small thermal envelope, but actual perform well despite basically being strapped to a GPU otherwise not really meant for it (yay interposer).

While I respect you pointing out the obvious, perhaps you should ask what underlying issues you might be missing before asking if everyone is a dullard. I can't speak for everyone, but if I explained 100% of my mental processes nobody would ever listen. To be fair though, I can't say this is everyone's line of reasoning.

Using the current Hawaii GPU* as a further example (since is architecturally similar) :

512 bit bus width / 8 * 6 GHz effective memory speed = 384 GB/sec of bandwidth.

Using GDDR5X on a future product with half the IMC's:

256 bit bus width / 8 * 10 -16 GHz effective memory speed = 320 - 512 GB/sec of bandwidth using half the number of IMC's and memory pinout die space.

So you effectively have saved die space and lowered the power envelope on parts whose prime consideration is production cost and sale price (which Kanen referenced). The only way this doesn't make sense is if HBM + Interposer + microbump packaging is less expensive than traditional BGA assembly - something I have not seen mentioned, nor believe to be the case.

* I don't believe Hawaii-type performance is where the product is aimed, but merely used as an example. A current 256-bit level of performance being able to utilize a 128-bit bus width is a more likely scenario.

Next, let's review the quotes.A very true point, yet somewhat backwards. Let's review the slides you criticize others for not reading. In particular, let's review 94a. The way that the memory supposedly doubles bandwidth is a much larger prefetch. That's all fine and dandy, but if I'm reading this correctly that means the goal is to have more RAM to cover the increasing prefetch. This would generally imply that more RAM would produce better results, as more data can be stored with prefetching accounting for an effectively increased bandwidth.

Likewise, everyone wants more RAM. Five years ago a 1 GB card was high end, while today an 8 GB card is high end. Do you really expect that trend to not continue? Do you think somebody out there is going to decide that 2 GB (what we've got now on middle ground cards) is enough? I'd say that was insane, but I'd prefer not to have it taken as an insult. For GDDR5X to perform better than GDDR5 you'd have to have it be comparable, but that isn't what sells cards. You get somebody to upgrade cards by offering more and better, which is easily demonstrable when you can say we doubled the RAM. While in engineering land that doesn't mean squat, it only matters that people want to fork their money over for what is perceived to be better.To the former point, prove it. The statement that overclocking increases performance is...I'm going to call it a statement so obvious as to be useless. The reality is that any data I can find on Fiji suggests that overclocking the core vastly outweighs the benefits of clocking the HBM alone (this thread might be of use:www.techpowerup.com/forums/threads/confirmed-overclocking-hbm-performance-test.214022/ ). If you can demonstrate that HBM overclocking substantially (let's say 5:4, instead of a better 1:1 ratio) performance I'll eat my words. What I've seen is sub 2:1, which in my book says that the HBM bandwidth is sufficient for the cards, despite its being the more limited HBM1.

To the later point, it's not addressing the issue. AMD wanting overall performance to be similar to that of Titan didn't require HBM, as demonstrated by Titan. What it required was an investment in hardware design, rather than re-releasing the same architecture with minor improvements, a higher clock, and more thermal output. I love AMD, but they're seriously behind the ball here. The 7xxx, 2xx, and 3xx cards follow that path to a T. While the 7xxx series was great, Nvidia invested money back into R&D to produce a genuinely cooler, less power hungry, and better overclocking 9xx series of cards. Nvidia proved that Titan level performance could easily be had with GDDR5. Your argument is just silly.

As to the final point, we agree that this is a low end card thing. Between being perfect for retread architectures, cheap to redesign, and being more efficient in direct comparison it's a dream for the middle range 1080p crowd. It'll be cheap enough to give people 4+ GB (double the 2 GB that people are currently dealing with on middle end cards) of RAM, but that's all it's doing better.

Consider me unimpressed with GDDR5X, but hopeful that it continues to exist. AMD has basically developed HBM from the ground up, so they aren't going to use GDDR5X on anything but cheap cards. Nvidia hopefully will make it a staple of their low end cards, so HBM always has enough competition to keep it honest. Do I believe we'll see GDDR5X in any real capacity; no. 94a says that GDDR5X will be coming out around the same time as HBM2. That means they'll be fighting to get a seat at the Pascal table, when almost everything is supposedly already set for HBM2. That's a heck of a fight, even for a much cheaper product.

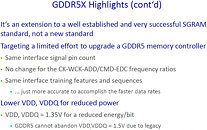

The slide said:

Lower VDD, VDDQ for reduced power

• VDD, VDDQ = 1.35V for reduced energy/bit

• GDDR5 cannot abandon VDD, VDDQ = 1.5V due to legacy

Now as that would imply being more energy-efficient, but can't tell us the amount saved offers a reasonable gain in real world performance.As for memory controller it said:

• The GDDR5 command protocol remains preserved as much as possible

• GDDR5 ecosystem is untouched

Though on the next slide is states:

Targeting a limited effort to upgrade a GDDR5 memory controller.

That sound like existing memory controllers would need some tweaking, and correct no one would see the value of re-working the chips controller when all they need is the chip hold out 6-8 months.

Firstly, whose to say that a chip has a lifetime of 6-8 months? Most GPUs in production have lifetimes much longer than that, and with process node cycles likely to be longer, I'd doubt that GPUs would suddenly have their lifetime shortened.

Secondly, memory controllers and the GDDR5 PHY ( the physical layer between the IMC's and the memory chips) are routinely revised - and indeed, reused from across generations of architectures. One of the more recent cases of a high profile/high sales implementation was that initiated on Kepler ( GTX 680's GK104-400 to GTX 770's GK104-425) and is presently used in Maxwell. AMD undoubtedly reuse their memory controllers and PHY as well. It is quite conceivable (and very likely) that future architectures such as Arctic Islands and Pascal will be mated with the GDDR5/GDDR5X logic blocks in addition to HBM, since increased costs associated with the latter ( interposer, micro-bumping packaging, assembly, and verification) would make a $100-175 card much less viable even if the GPU were combined with a single stack of HBM memory.

1 GB cards were good for 3-5 years or until now, depending on game, but I'd say at least 3 years. I used a HD 5970 for a long time and it has only 1 GB Vram - so don't overestimate how much Vram is needed. That said, 4 or 8 GB will be perfectly fine in 2016, as long as you don't play 4K with highest details on a 4GB card - and even that will depend on game. But 6 GB (with compression, like 980 Ti) or 8 GB (like R9 390X) will suffice.Sadly, this is true. But I don't care about it much - we are here to speak about what is the truth or what is really needed and not what the average users out there think is better. They think a lot and 50-90% of it is wrong...I never said HBM overclocking brings a lot of performance. I just said it DOES, and this is the important thing - because a bandwidth saturated card would never gain any FPS from overclocking the Vram. So HBM is good, it helps - don't forget AMD compression is a lot worse than what NV has on Maxwell, so they NEED lots of bandwidth on their cards. This is why Hawaii has 512 Bit at first with only 1250 clocked Ram and now with 1500 MHz - because it never has enough, and this is why Fiji needs even more, because it is a more powerful chip - so it got HBM. Alternative would have been 1750 MHz clocked GDDR5 on a 512 bit bus like with Hawaii, but that would've sucked too much power and still be inferior in terms of maximum bandwidth.This is not about what AMD should've had, it is about what AMD has (only GCN 1.2 architecture) and what they could do with it. HBM made a big big GCN possible - without it, no, never possible. And this is what I meant. From my perspective it is adressing the issue, even if it's not directly. It helped a older architecture go big and gave AMD a strong performer they otherwise would've missed, because Fiji with GDDR5 would have been impossible - too high TDP, they couldn't have done that, it would've been awkward.

And btw. they missed the money to develop a new architecture like Nvidia, I think.And therefore my argument is the opposite of silly. First try to understand before you run out and call anyone "silly". You are very quick to judge or harass persons, not the first time you've done that - you do it way too frequently, and shouldn't do it at all.I only see HBM1/2 for highend cards now or in 2016 because its too expensive for lower cards - therefore it's (GDDR5X) good for middle to semi highend cards I'd say. I even would bet on that. You really expect a premier technology to be used on middle to semi high end cards? Me not. GDDR5X has a nice gap there to fill, I think.Well that's no problem. If it arrives with HBM2, they can plan for it and produce cards with it (GDDR5X). I don't see why this would be a problem. And as I said, I only see HBM2 on highend or highest end (650$) cards. I think the 400 (if any)/500/650$ cards will have it - so everything cheaper than that will be GDDR5X, and that's not only "cheap cards". I don't see 200-400 as "cheap". Really cheap is 150 or less and this is GDDR5-land (not even GDDR5X) I think.

Here's the post I quoted, paring out the unnecessary bits. I've highlighted the silliness. If I weren't being generous, I'd call garbage statments which are either factually incorrect or useless.As you can see, most of this statement is silly. Allow me to tear into it though.

1) it's a fact that they would be even more bandwidth limited without HBM,

Really? Is it a fact? How then does Nvidia have the performance it has with Titan? You make a statement of false equivocation, based upon a faulty premise. Because HBM is designed to have higher bandwidth it must therefore be responsible for performance. Where are your facts?

2) overclocking HBM increased performance of every Fiji card further

Again, facts. What I've seen is overclocking HBM leads to 50% or less returns on improvement. For example, the cited 8% overclock only returns 4% increased numerical results. Technically overclocking does increase performance, but you're weaseling out of this argument by saying any increase is an increase. When 50% of your added effort is wasted without seeing real improvement then it isn't really a reasonable improvement.

3) Fiji has not too much bandwidth

This goes back to point two. If Fury X (not Fiji in general) was bandwidth limited an 8% increase in clocks would yield somewhere near 8% of improved performance. It doesn't, therefore your point is invalid. Rather than dismissing it, I call it a silly and unsubstantiated point.

4) proven that it can't have enough bandwidth

Same as 3.

5) there would be no Fiji without HBM

Except you're wrong. There would be no Fury inside of the form factor and thermal envelope they chose, that doesn't mean Fiji wouldn't exist. You're equating two entirely separate and unrelated topics without factual basis here.

6) 275W TDP of Fury X

Artificially chosen value by AMD. This is irrelevant to the implementation of HBM (as demonstrated by Nvidia).

7) So not only DID the increased bandwidth help (a lot), it helped making the whole card possible at all

You reiterated all of your previous points in a single sentence. Congratulations, but a house built without a solid foundation is going to collapse rather easily. You've drawn all of these conclusions, in the face of existing data that proves you wrong. My teachers called it cute when I did this in school. My bosses fired me for incompetence. You are denying reality, and therefore are either an idiot or silly. I choose to give you the benefit of doubt and assume silliness. We've all been guilty of that at some point in time.

As to HBM2 not being available/cost effective, may I ask what exactly you expect of GDDR5X? It's an as yet unmanufactured standard, without substantial testing behind it. It may be largely plug and play with older controllers, but it still has to be made by somebody. This means added costs as the process is proven out, additional costs for redesigns of controllers to actually see the benefits of GDDR5X (why would you switch to what has to be a more expensive type of memory while cheaper stuff is more readily available), and supply issues all their own.

What you're arguing for is that in the midst of pushing out HBM, both AMD and Nvidia will push out another new standard. Why? Why would you ever completely retool everything, with less than 12 months to design, test, rework, prototype, and have manufacturing specifications for a product line? It'd be insane to do so.

Let's offer the benefit of doubt again, and acquiesce to your theory (based on nothing) that 90% of cards will not be HBM based. In order to accept that we have to make the assumption that HBM2 is not being produced right now, and will in fact only see production late next year. Why would AMD and Nvidia let that happen? They know that their new processes will finally be out next year. They know that the shrink will produce more performance gains than the last two redesigns, because of its huge magnitude. They know that Pascal and Arctic Islands will be the time that everybody re-evaluates 3-4 year old cards and decides it's time to evaluate an upgrade. Knowing all of this, how do you come to the conclusion that they aren't already starting on HBM2 chip orders (yes, it was the interposer that was the issue with HBM1, I know)?

I refer to your statements as silly, because they make no logical sense. I don't directly call your opinions idiotic, because you've demonstrated a grasp on reality. Our opinions may differ, but you deserve the respect of being proven wrong rather than being dismissed out of hand for for saying things that are unconnected to observable and demonstrable reality. It would help your argument to bring facts to the table though. It's hard to argue point like "overclocking makes things faster" is inaccurate, when you don't go out and at least try to have factual support for your statements.

As to your personal opinions of me, I don't care. You are more than welcome to call me an ass, and there's no reason to defend myself against it. In the last month I've been misinterpreted so as to call everyone from West Virginia as victims of a lack of genetic diversity (further being stretched to personal insultation of a person I don't know), I've been accused of calling southerners all hicks and rednecks (despite never using the term), and I've more than once been proven wrong. None of us, in the US at least, have the right to not be offended. The point of a forum is to raise a substantive argument based upon facts, and have poorer arguments torn apart by reality. It's the only way we can make sure our beliefs are rooted in reality, and not fantasy.