Tuesday, February 28th 2017

NVIDIA Announces the GeForce GTX 1080 Ti Graphics Card at $699

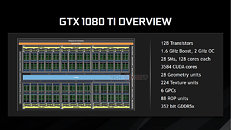

NVIDIA today unveiled the GeForce GTX 1080 Ti graphics card, its fastest consumer graphics card based on the "Pascal" GPU architecture, and which is positioned to be more affordable than the flagship TITAN X Pascal, at USD $699, with market availability from the first week of March, 2017. Based on the same "GP102" silicon as the TITAN X Pascal, the GTX 1080 Ti is slightly cut-down. While it features the same 3,584 CUDA cores as the TITAN X Pascal, the memory amount is now lower, at 11 GB, over a slightly narrower 352-bit wide GDDR5X memory interface. This translates to 11 memory chips on the card. On the bright side, NVIDIA is using newer memory chips than the one it deployed on the TITAN X Pascal, which run at 11 GHz (GDDR5X-effective), so the memory bandwidth is 484 GB/s.

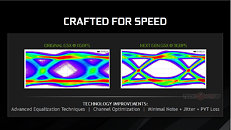

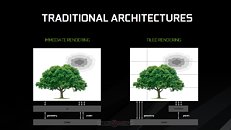

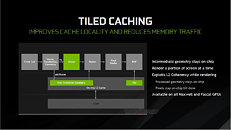

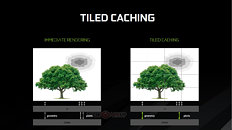

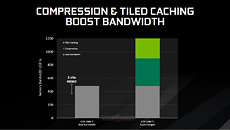

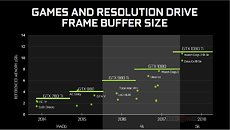

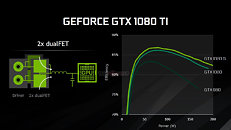

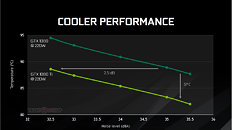

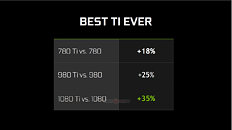

Besides the narrower 352-bit memory bus, the ROP count is lowered to 88 (from 96 on the TITAN X Pascal), while the TMU count is unchanged from 224. The GPU core is clocked at a boost frequency of up to 1.60 GHz, with the ability to overclock beyond the 2.00 GHz mark. It gets better: the GTX 1080 Ti features certain memory advancements not found on other "Pascal" based graphics cards: a newer memory chip and optimized memory interface, that's running at 11 Gbps. NVIDIA's Tiled Rendering Technology has also been finally announced publicly; a feature NVIDIA has been hiding from its consumers since the GeForce "Maxwell" architecture, it is one of the secret sauces that enable NVIDIA's lead.The Tiled Rendering technology brings about huge improvements in memory bandwidth utilization by optimizing the render process to work in square sized chunks, instead of drawing the whole polygon. Thus, geometry and textures of a processed object stays on-chip (in the L2 cache), which reduces cache misses and memory bandwidth requirements.Together with its lossless memory compression tech, NVIDIA expects Tiled Rendering, and its storage tech, Tiled Caching, to more than double, or even close to triple, the effective memory bandwidth of the GTX 1080 Ti, over its physical bandwidth of 484 GB/s.NVIDIA is making sure it doesn't run into the thermal and electrical issues of previous-generation reference design high-end graphics cards, by deploying a new 7-phase dual-FET VRM that reduces loads (and thereby temperatures) per MOSFET. The underlying cooling solution is also improved, with a new vapor-chamber plate, and a denser aluminium channel matrix.Watt-to-Watt, the GTX 1080 Ti will hence be up to 2.5 dBA quieter than the GTX 1080, or up to 5°C cooler. The card draws power from a combination of 8-pin and 6-pin PCIe power connectors, with the GPU's TDP rated at 220W. The GeForce GTX 1080 Ti is designed to be anywhere between 20-45% faster than the GTX 1080 (35% on average).The GeForce GTX 1080 Ti is widely expected to be faster than the TITAN X Pascal out of the box, despite is narrower memory bus and fewer ROPs. The higher boost clocks and 11 Gbps memory, make up for the performance deficit. What's more, the GTX 1080 Ti will be available in custom-design boards, and factory-overclocked speeds, so the GTX 1080 Ti will end up being the fastest consumer graphics option until there's competition.

Besides the narrower 352-bit memory bus, the ROP count is lowered to 88 (from 96 on the TITAN X Pascal), while the TMU count is unchanged from 224. The GPU core is clocked at a boost frequency of up to 1.60 GHz, with the ability to overclock beyond the 2.00 GHz mark. It gets better: the GTX 1080 Ti features certain memory advancements not found on other "Pascal" based graphics cards: a newer memory chip and optimized memory interface, that's running at 11 Gbps. NVIDIA's Tiled Rendering Technology has also been finally announced publicly; a feature NVIDIA has been hiding from its consumers since the GeForce "Maxwell" architecture, it is one of the secret sauces that enable NVIDIA's lead.The Tiled Rendering technology brings about huge improvements in memory bandwidth utilization by optimizing the render process to work in square sized chunks, instead of drawing the whole polygon. Thus, geometry and textures of a processed object stays on-chip (in the L2 cache), which reduces cache misses and memory bandwidth requirements.Together with its lossless memory compression tech, NVIDIA expects Tiled Rendering, and its storage tech, Tiled Caching, to more than double, or even close to triple, the effective memory bandwidth of the GTX 1080 Ti, over its physical bandwidth of 484 GB/s.NVIDIA is making sure it doesn't run into the thermal and electrical issues of previous-generation reference design high-end graphics cards, by deploying a new 7-phase dual-FET VRM that reduces loads (and thereby temperatures) per MOSFET. The underlying cooling solution is also improved, with a new vapor-chamber plate, and a denser aluminium channel matrix.Watt-to-Watt, the GTX 1080 Ti will hence be up to 2.5 dBA quieter than the GTX 1080, or up to 5°C cooler. The card draws power from a combination of 8-pin and 6-pin PCIe power connectors, with the GPU's TDP rated at 220W. The GeForce GTX 1080 Ti is designed to be anywhere between 20-45% faster than the GTX 1080 (35% on average).The GeForce GTX 1080 Ti is widely expected to be faster than the TITAN X Pascal out of the box, despite is narrower memory bus and fewer ROPs. The higher boost clocks and 11 Gbps memory, make up for the performance deficit. What's more, the GTX 1080 Ti will be available in custom-design boards, and factory-overclocked speeds, so the GTX 1080 Ti will end up being the fastest consumer graphics option until there's competition.

160 Comments on NVIDIA Announces the GeForce GTX 1080 Ti Graphics Card at $699

If you look at sold eBay listings, a few Titan X video cards sold for 1k today, which is a pretty big drop. That's considering none of those guys just don't return the cards for a refund. If you wanted to sell your Titan X, you should have sold it yesterday to get full value back.

In terms of correlation, yes, temperature and dBA are (and have always been, since the Big Bang established the laws of physics and thermodynamics) negatively correlated.

Causal relation here though is NOT that the fan slows down as the heat goes up, it IS that as you slow down the fan the heat goes up.

That's why you were told you're reading the graph backwards...

EDIT: The more direct way to read the graph is that as you increase the fan speed (and hence the dBA) the card runs cooler.

Along with my post above, recall that the X axis is typically the control parameter (which here is the fan speed) and the Y axis is the response parameter (which here is the temperature that is realized as response to the fan speed the user decides on).

So, you guys need to learn how to read a graph and reconcile it with the laws of physics and thermodynamics, which I believe everyone here at least has an intuition of ;)

@theGryphon

What NVIDIA is basically saying is the following...

With GTX 1080, it was required to run cooler fan at speeds that create 32.5dB of noise to achieve 94°C and 35.5dB to achieve 87°C.

With GTX 1080Ti, they've achieved same noise levels, but at lower temperatures of 88°C and what's that, 82°C ?

So, technically, they won't make it quieter out of the box, but it can be quieter because they created this temperature gap. You could theoretically lower the fan speed to achieve old temperatures and gain in quieter operation.

It wouldn't surprise me if NVIDIA release something like a 2080 Ti with the full GPU and 12GB RAM + higher clocks when Vega comes out, for significantly better performance. They might then be able to hike the price, too...

For starters, we knew there'd be a Ti version since like forever. Also, this was meant to originally happen in January or thereabouts if you recall.. So the people buying a 1080 the last few months (myself included) knew everything they needed to know. Either they could not wait, or they had decided in advance that the money recquired was outside their budget/sense of reason.

(don't pay attention to the price tags mentioned here.. don't even pay attention to the price tags + Vat. You need add customs [a world exists outside the US] and you need add the extra bucks charged for the 'improved' models that will be coming out*. For millions of people, this card translates to about 1.5k)

* in case you're too far gone into the techie side of the force, most folks don't give a rat's behind about founder editions. They will go buy the EVGA/Gigabyte/Asus model that will be 20-25% faster and signatures be damned :)

I mean, seriously, I won't waste my time on this anymore.

Here. I fix.

How difficult was that?