Tuesday, February 28th 2017

NVIDIA Announces the GeForce GTX 1080 Ti Graphics Card at $699

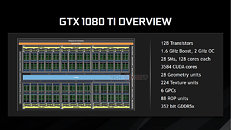

NVIDIA today unveiled the GeForce GTX 1080 Ti graphics card, its fastest consumer graphics card based on the "Pascal" GPU architecture, and which is positioned to be more affordable than the flagship TITAN X Pascal, at USD $699, with market availability from the first week of March, 2017. Based on the same "GP102" silicon as the TITAN X Pascal, the GTX 1080 Ti is slightly cut-down. While it features the same 3,584 CUDA cores as the TITAN X Pascal, the memory amount is now lower, at 11 GB, over a slightly narrower 352-bit wide GDDR5X memory interface. This translates to 11 memory chips on the card. On the bright side, NVIDIA is using newer memory chips than the one it deployed on the TITAN X Pascal, which run at 11 GHz (GDDR5X-effective), so the memory bandwidth is 484 GB/s.

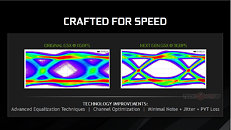

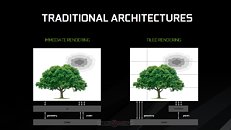

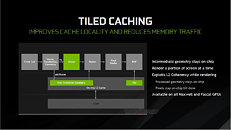

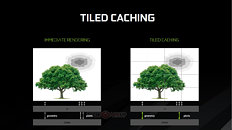

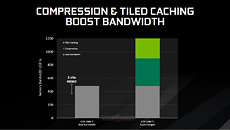

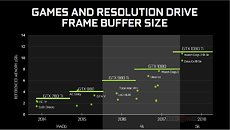

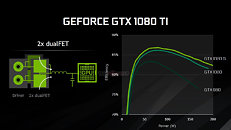

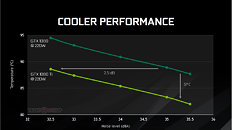

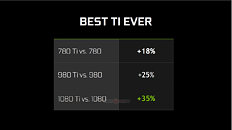

Besides the narrower 352-bit memory bus, the ROP count is lowered to 88 (from 96 on the TITAN X Pascal), while the TMU count is unchanged from 224. The GPU core is clocked at a boost frequency of up to 1.60 GHz, with the ability to overclock beyond the 2.00 GHz mark. It gets better: the GTX 1080 Ti features certain memory advancements not found on other "Pascal" based graphics cards: a newer memory chip and optimized memory interface, that's running at 11 Gbps. NVIDIA's Tiled Rendering Technology has also been finally announced publicly; a feature NVIDIA has been hiding from its consumers since the GeForce "Maxwell" architecture, it is one of the secret sauces that enable NVIDIA's lead.The Tiled Rendering technology brings about huge improvements in memory bandwidth utilization by optimizing the render process to work in square sized chunks, instead of drawing the whole polygon. Thus, geometry and textures of a processed object stays on-chip (in the L2 cache), which reduces cache misses and memory bandwidth requirements.Together with its lossless memory compression tech, NVIDIA expects Tiled Rendering, and its storage tech, Tiled Caching, to more than double, or even close to triple, the effective memory bandwidth of the GTX 1080 Ti, over its physical bandwidth of 484 GB/s.NVIDIA is making sure it doesn't run into the thermal and electrical issues of previous-generation reference design high-end graphics cards, by deploying a new 7-phase dual-FET VRM that reduces loads (and thereby temperatures) per MOSFET. The underlying cooling solution is also improved, with a new vapor-chamber plate, and a denser aluminium channel matrix.Watt-to-Watt, the GTX 1080 Ti will hence be up to 2.5 dBA quieter than the GTX 1080, or up to 5°C cooler. The card draws power from a combination of 8-pin and 6-pin PCIe power connectors, with the GPU's TDP rated at 220W. The GeForce GTX 1080 Ti is designed to be anywhere between 20-45% faster than the GTX 1080 (35% on average).The GeForce GTX 1080 Ti is widely expected to be faster than the TITAN X Pascal out of the box, despite is narrower memory bus and fewer ROPs. The higher boost clocks and 11 Gbps memory, make up for the performance deficit. What's more, the GTX 1080 Ti will be available in custom-design boards, and factory-overclocked speeds, so the GTX 1080 Ti will end up being the fastest consumer graphics option until there's competition.

Besides the narrower 352-bit memory bus, the ROP count is lowered to 88 (from 96 on the TITAN X Pascal), while the TMU count is unchanged from 224. The GPU core is clocked at a boost frequency of up to 1.60 GHz, with the ability to overclock beyond the 2.00 GHz mark. It gets better: the GTX 1080 Ti features certain memory advancements not found on other "Pascal" based graphics cards: a newer memory chip and optimized memory interface, that's running at 11 Gbps. NVIDIA's Tiled Rendering Technology has also been finally announced publicly; a feature NVIDIA has been hiding from its consumers since the GeForce "Maxwell" architecture, it is one of the secret sauces that enable NVIDIA's lead.The Tiled Rendering technology brings about huge improvements in memory bandwidth utilization by optimizing the render process to work in square sized chunks, instead of drawing the whole polygon. Thus, geometry and textures of a processed object stays on-chip (in the L2 cache), which reduces cache misses and memory bandwidth requirements.Together with its lossless memory compression tech, NVIDIA expects Tiled Rendering, and its storage tech, Tiled Caching, to more than double, or even close to triple, the effective memory bandwidth of the GTX 1080 Ti, over its physical bandwidth of 484 GB/s.NVIDIA is making sure it doesn't run into the thermal and electrical issues of previous-generation reference design high-end graphics cards, by deploying a new 7-phase dual-FET VRM that reduces loads (and thereby temperatures) per MOSFET. The underlying cooling solution is also improved, with a new vapor-chamber plate, and a denser aluminium channel matrix.Watt-to-Watt, the GTX 1080 Ti will hence be up to 2.5 dBA quieter than the GTX 1080, or up to 5°C cooler. The card draws power from a combination of 8-pin and 6-pin PCIe power connectors, with the GPU's TDP rated at 220W. The GeForce GTX 1080 Ti is designed to be anywhere between 20-45% faster than the GTX 1080 (35% on average).The GeForce GTX 1080 Ti is widely expected to be faster than the TITAN X Pascal out of the box, despite is narrower memory bus and fewer ROPs. The higher boost clocks and 11 Gbps memory, make up for the performance deficit. What's more, the GTX 1080 Ti will be available in custom-design boards, and factory-overclocked speeds, so the GTX 1080 Ti will end up being the fastest consumer graphics option until there's competition.

160 Comments on NVIDIA Announces the GeForce GTX 1080 Ti Graphics Card at $699

- No DVI port: a nice clean backpanel :)

- Founders Edition available next week :)

BTW: "Founders edition" sounds soooo Karl May to me... (maybe only Germans will understand... :-) or let's say "The last of the mohicans"

I don't have anything else to say, other than 'wow'... :shadedshu:

and the clock speed difference is even more than 400mhz. rx480 runs at 1266mhz boost and gtx1060 tends to stay above 1800mhz for all but excessive load.

furyx-s clocked to around 1.15-1.2ghz. your gtx980 at 1.4ghz is already pretty good, i think on average they went to 1.4-1.45ghz. i am surprised it'd match furyx at that clock though, fury should still beat it in most cases. fiji is simply much larger.

Why the joy at no DVI?

I still use the DVI connection in mine and never mind DP, mini or proper.

Knowing full well in advance how each and every one of you here is going to come and correct me, lol, i can tell you that for visual signal only?

If my DVI gives me a 100%, the DP connection gives me about 75-80%. A bit fuzzier, a bit less crisp, the whites not so intense as they were with DVI; like someone took the contrast down a notch or two. No, i didn't forget to set the monitor right (choose PC rather than 'TV'), no i didn't forget to set the frequency to 144; yes, said comparison with settings default, no calibrating; before or after.

DVI-d is a piss poor Chinaman cable that came bundled with my monitor (one in my specs). DP cable cost a fortune, we're talking big brand used in high-end audiovisual equipment. Ended up giving it away.

As said before, generally X-axis is the control parameter (the source, input) and Y-axis is the response parameter (the result, output). Changing parameter in X results in a change in Y parameter.

In this case the graph depicts COOLER PERFORMANCE at dissipasing 220 Watt of heat. Here the performance of a cooler is characterized by how cool the temperature and how quiet the cooling system is.

For a given cooler design (there's two in the graph, 1080 cooler and 1080 Ti cooler) and constant heat load, the resultant junction temperature (Y-axis) is correlated to the noise generated by the cooling system (here it is assumed that noise is correlated to fan speed).

- By varying the noise (fan speed) the resultant temperature will vary too.

- Take sample at low noise (low fan speed) : with 32.5 dB noise, 1080 cooler results in ~95C, 1080 Ti cooler results in 88C.

- Take sample at high noise (high fan speed) : with 35.5 dB noise, 1080 cooler results in ~87C, 1080 Ti cooler results in 82C.

- Notice that as fan speed goes up (noise increaased) the temperature lowers, intuitive isn't it?

- Notice at the same noise level, 1080 Ti cooler results in lower temperature than 1080 cooler, this is the point that the graph is trying to get across.

I sometimes wonder if this kind of error is the cause of some baffling decision from governments and companies.

You're move AMD.

You're move AMD.

Seriously, it's you're move AMD.

Anyway, looking forward to seeing custom AIB cards/reviews.

Your move Fluffmeister.

Price is surprisingly good, so how long it takes them to release titan X black with full gp102 and maybe 12Gbps gddr5x.

its has 6 ROP disabled, we still know that ROP tied with memory controller like GTX 970 was

which 4 ROPs disabled in GTX 970 causing last 32 bit portion need to be 'hike' along with

another ROP which connected to Memory controller (3.5+0.5 GB) IIRC

Now Nvidia learn their lesson, to avoid GTX 970 fiasco, they simply completely disabled last 32 bit MC along with 6 ROP

hence thats what you see now 11 GB via 352 bit bus, if they attempt to 'normalize' 12 GB (11+1) advertising, it will revive old stigma to them .

You'reYour apologies were accepted.in general though, 1080ti is better than expected. with very few details, rumors were around more cut down chip, using gddr5 and higher price. none of that came to pass. performance close to titan xp is not bad at all.

DP is a digital signal. It either getside there or doesnt. I have some of the cheapest DP cables I could find driving a 4k and 2460x1440 monitor...DP is equal or better in every way last I understood.It matches. Math above you.

How about adding something like *at constant GPU load. If Nividia were really smart they would've simply put the noise delta on the X axis otherwise this is what people can interpret & they're not wrong either ~