Wednesday, July 19th 2017

Intel Quietly Reveals 12-core i9-7920X 2.9 GHz Base Core Clock

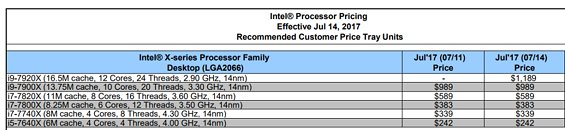

Intel has quietly revealed base clocks for their upcoming 12-core, 24-thread Core i9-7920X processor. This particular Intel model materializes (at least for now) the only 12-core processor in Intel's X299 HEDT platform line-up on the LGA 2066 socket. A report from Videocardz pegs the new 12-core processor as having a base clock of 2.9 GHz, a full 400 MHz slower than the company's 10-core, 20-thread i9-7900X. The L3 cache amount appears as well, though it's an expected 16.5 MB (which amounts to around 1.375 MB per core.)

The chip also brought a pricing confirmation for $1,189 in tray quantities (which means final consumer prices will be higher.) On paper, this doesn't trade favorably with the competition's 12-core Threadripper offering, where AMD will be offering the same amount of cores and threads for $799 (final consumer pricing at launch) with a much more impressive 3.5 GHz base clock. Consumers will say whether a $400 price difference for going Intel over AMD is worth it for the same number of cores and threads, though it remains to be seen whether AMD's frequency advantage will translate to performance while maintaining power consumption at acceptable levels (which, from what we've seen from AMD's Ryzen, should, in theory, be true.)

Source:

Videocardz

The chip also brought a pricing confirmation for $1,189 in tray quantities (which means final consumer prices will be higher.) On paper, this doesn't trade favorably with the competition's 12-core Threadripper offering, where AMD will be offering the same amount of cores and threads for $799 (final consumer pricing at launch) with a much more impressive 3.5 GHz base clock. Consumers will say whether a $400 price difference for going Intel over AMD is worth it for the same number of cores and threads, though it remains to be seen whether AMD's frequency advantage will translate to performance while maintaining power consumption at acceptable levels (which, from what we've seen from AMD's Ryzen, should, in theory, be true.)

49 Comments on Intel Quietly Reveals 12-core i9-7920X 2.9 GHz Base Core Clock

Now excuse me while I go pass out from the excitement of this announcement... and your earth-shattering comment about other people's comments.

In other news, Ryzen continues to give Intel's entire line a solid thumping. Intel is tripping and fumbling over themselves to play catch-up. Give it another four months and upgrading any PC is going to be a real joy and won't kill the wallet.

I really hope AMD fixes the CCX latency by isolating certain interdependent tasks onto individual CCX units, that way the dual/quad CCX models will really shine on the gaming performance side, because otherwise, I'm just going to have to wait out for 6-core 1151 chips to get pressed down to the current 7700k pricing, because I really don't like the gaming performance hit based on my workloads.

Shame

That said, the price has to come down... At some point...

Intel did this during Core 2

...at least for consumer

- The vector unit can do one type of operation on multiple data elements, while a normal ALU or FPU can usually do up to one operation per clock. It has similarities with how GPUs work.

- A 256-bit vector unit can do one 256-bit operation, two 128-bit operations, four 64-bit operations, eight 32-bit operations, etc.

- AVX(Sandy Bridge+) and AVX2(Haswell+) supports up to 256-bit, while AVX-512 supports up to 512-bits operations.

- AVX supports normal operations like addition, multiplication, etc., but also operations like shuffle, min/max, conditional masks.

- Performance: Since vector operations replaces huge numbers of conventional instructions, the speedup can be massive. 10-50× speedup is not unusual. Especially operations such as conditional moves, min/max etc. would otherwise require huge amounts of instructions with several conditionals creating branches and stalls, so you can reduce hundreds of clock cycles down to a few.

- Requirements:

- Vector operations only work on what we call vectors, which are streams of data. So the data has to be aligned in memory, and you would have to do the same operation to each group of data. Many workloads can't be efficiently vectorized, or at least not without a major effort. Some examples:

- Games: no

- Compression: maybe, algorithm dependent

- Video encoding: maybe, algorithm dependent

- Web server: no

- JavaScript in your web browser: no

- Mathematical simulations: usually yes

- There is also a overhead cost of moving the data into vector registers, so it's only worth it if you want to do multiple vector operations on the data.

- Development cost: Code have to be specifically designed to utilize vector operations*. In C/C++ we use special intrinsics, basically macros mapped almost directly to assembly instead of "normal" code. It's not something you can just enable for your existing code, you have to code the specific low-level operations. The memory alignment of the vector data has to be planned, so that part of the application has to be specifically designed for this purpose in mind.

(* automatic vectorization do exist, but that's just in some edge cases not worth mentioning).