Monday, December 11th 2017

NVIDIA's Latest Titan V GPU Benchmarked, Shows Impressive Performance

NVIDIA pulled a rabbit out of its proverbial hat late last week, with the surprise announcement of the gaming-worthy Volta-based Titan V graphics card. The Titan V is another one in a flurry of Titan cards from NVIDIA as of late, and while the healthiness of NVIDIA's nomenclature scheme can be put to the sword, the Titan V's performance really can't.

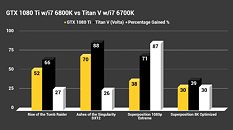

In the Unigine Superposition benchmark, the $3000 Titan V managed to deliver 5,222 points in the 8K Optimized preset, and 9,431 points on the 1080p Extreme preset. Compare that to an extremely overclocked GTX 1080 Ti running at 2,581 MHz under liquid nitrogen, which hit 8,642 points in the 1080p Extreme preset, and the raw power of NVIDIA's Volta hardware is easily identified. An average 126 FPS is also delivered by the Titan V in the Unigine Heaven benchmark, at 1440p as well. Under gaming workloads, the Titan V is reported to achieve from between 26% and 87% improvements in raw performance, which isn't too shabby, now is it?Poring through a Reddit discussion on the Titan V's prowess, the amount of benchmarks already in the wild is overwhelming, but a clear picture is easy to get: the Titan V is the world's most powerful gaming card at the moment, delivering a better experience in every setting, game, and workload (be it VR gaming or rendering) than any other GPU.

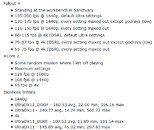

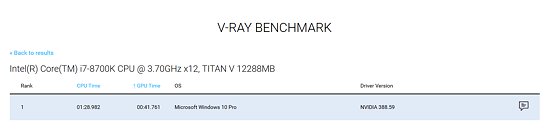

In Futuremark's VR Mark "Blue Room" benchmark, for instance, the Titan V easily delivers a score of 4,400 points - compared to the benchmark's own base premium high-end PC scores, that's a 1,428 points increase, delivering an above 90 FPS experience, something a GTX 1080 Ti wouldn't be able to achieve under the same settings. On the TimeSpy benchmark, the stock Titan V delivers 11,539 points, around 1,000 points more than the average 10,500 points a GTX 1080 Ti would achieve, paired with the same processor (there are higher 1080 Ti scores, yes; there are also lower.)The Titan V achieves an average of 65 FPS on max settings at 1440p; an average of 157 FPS on Gears of War 4 on Ultra settings at the same resolution; 76 FPS Average on 1440p, Crazy Preset of the Ashes of The Singularity Benchmark; and a slew of other gaming results that you'd do better in poring through yourself, including Deus Ex: mankind Divided, Fallout 4, XCOM 2, and others.We also have to remember that the Titan V can either be seen as the most expensive gaming graphics card that NVIDIA has ever sold, or as the best price/performance Volta-based computing graphics card. In general compute workloads the Titan V shines again, eking out victory after victory against NVIDIA's other gaming-capable offerings such as the GTX 1080 Ti. This is by no means an extensive coverage, but the Titan V has been benchmarked as delivering 41 seconds GPU time in the V-Ray benchmark, against the 107 seconds that a GTX 1080 Ti managed to deliver (with an equivalent CPU score). On SpecViewPerf 12.1, the Titan V delivers better performance than NVIDIA's professional Quadro P6000 (which goes for $5,000) across all workloads save one. This seems to be the best price-performance ratio for this graphics card, not gaming; so if you're looking for the best possible compute performance and the best gaming experience on the side, the Titan Volta is the only solution.

Sources:

Reddit User @hellotanjent, Joker Productions YouTube, Reddit User @Nekrosmas

In the Unigine Superposition benchmark, the $3000 Titan V managed to deliver 5,222 points in the 8K Optimized preset, and 9,431 points on the 1080p Extreme preset. Compare that to an extremely overclocked GTX 1080 Ti running at 2,581 MHz under liquid nitrogen, which hit 8,642 points in the 1080p Extreme preset, and the raw power of NVIDIA's Volta hardware is easily identified. An average 126 FPS is also delivered by the Titan V in the Unigine Heaven benchmark, at 1440p as well. Under gaming workloads, the Titan V is reported to achieve from between 26% and 87% improvements in raw performance, which isn't too shabby, now is it?Poring through a Reddit discussion on the Titan V's prowess, the amount of benchmarks already in the wild is overwhelming, but a clear picture is easy to get: the Titan V is the world's most powerful gaming card at the moment, delivering a better experience in every setting, game, and workload (be it VR gaming or rendering) than any other GPU.

In Futuremark's VR Mark "Blue Room" benchmark, for instance, the Titan V easily delivers a score of 4,400 points - compared to the benchmark's own base premium high-end PC scores, that's a 1,428 points increase, delivering an above 90 FPS experience, something a GTX 1080 Ti wouldn't be able to achieve under the same settings. On the TimeSpy benchmark, the stock Titan V delivers 11,539 points, around 1,000 points more than the average 10,500 points a GTX 1080 Ti would achieve, paired with the same processor (there are higher 1080 Ti scores, yes; there are also lower.)The Titan V achieves an average of 65 FPS on max settings at 1440p; an average of 157 FPS on Gears of War 4 on Ultra settings at the same resolution; 76 FPS Average on 1440p, Crazy Preset of the Ashes of The Singularity Benchmark; and a slew of other gaming results that you'd do better in poring through yourself, including Deus Ex: mankind Divided, Fallout 4, XCOM 2, and others.We also have to remember that the Titan V can either be seen as the most expensive gaming graphics card that NVIDIA has ever sold, or as the best price/performance Volta-based computing graphics card. In general compute workloads the Titan V shines again, eking out victory after victory against NVIDIA's other gaming-capable offerings such as the GTX 1080 Ti. This is by no means an extensive coverage, but the Titan V has been benchmarked as delivering 41 seconds GPU time in the V-Ray benchmark, against the 107 seconds that a GTX 1080 Ti managed to deliver (with an equivalent CPU score). On SpecViewPerf 12.1, the Titan V delivers better performance than NVIDIA's professional Quadro P6000 (which goes for $5,000) across all workloads save one. This seems to be the best price-performance ratio for this graphics card, not gaming; so if you're looking for the best possible compute performance and the best gaming experience on the side, the Titan Volta is the only solution.

71 Comments on NVIDIA's Latest Titan V GPU Benchmarked, Shows Impressive Performance

More likely than not, the performance increase will be far lower and prices could be even higher due to lack of competition from AMD. Apple has shown that its loyal customers are willing to pay $1,000-1,200 USD for iPhone X. There is little reason why NV cannot price GTX2080 at $699-749 and move the GTX2080Ti to $899-999 given that Vega guzzles power and barely performs as fast as 1.5-year-old GTX1080. I expect the 2018-2019 generation to be the most expensive one as AMD's deficit on the high-end is too great to overcome, leaving NV with full power to raise prices again. We should probably expect GTX2070 ~ GTX1080Ti and GTX2080 to outperform the 1080Ti by 15-25% and the 2080Ti card to launch in 2019. This has pretty much been the way NV has operated since 2012 Kepler.

45% IMPROVEMENT FROM 1200$ TO 3000$ IMPRESIVE.

THAT'S IMPRESSIVE DONKEY EARS FOR ANYONE WHO SAY THAT.

Are you blind, are you normal at all. 45% improvement or 50% or 55% after 18 months over graphic card worth 1200$ to charge 3000$?

I don't see nothing impressive there, only diagnose.

For this price I could buy new Kawasaki 2018 multipurpose or Off road.

And next year or for 5 years no one will launch 50% better model, are you aware of that.

One word- F A I L!

I expected to NVIDIA show up with some 16GB HBM model at least 60-70% stronger than TITAN XP, to match with TITAN XP SLI and replace him with price. This, buyers should be jelous for people who resist for this and ask help from doctor.

This is school example of Asian robbery like people earn 1K dollars for 5 days.

Even with so good job you need to work like horse 15 days to pay him and you have 10 days to earn for food and everything else.

Impressive win over 2 years old model with three time more expensive graphic card. Success, pure success.

Soon we will back to moaning why the GTX 1180 has poor FP64 performance.

This card is not expected to compete with any other gaming card out there, and destroys previous Titans when you include apps that take advantage of fp64 and deep learning AI.

This is the first card that they have released since Fermi that is actually meant for compute. So who exactly asked for this ? Because the average customer sure didn't , they never gave a shit about any of this.Literally no one will ever say that. FP64 isn't even in vogue for machine learning.

FP16 is where it's at. As you can see GV100 gets you more than 25 TFLOPS of that and those Tensor Cores run on mixed FP16 and FP32 as well.

Luckily the average consumer isn't forced to buy anything, I guess much to the despair of many again... but hey there is a pro market for a reason too. And some people just want GTX 1080 Ti performance 6 MONTHS earlier, and thats what Nv gave with the first Pascal Titan for example... blah blah blah.You'll be surprised, but I just hate it when people say shit is "gimped" at compute and the like, it tickles my funny bone.

or many others video that shows how 980ti oc compare to 1070 oc

New 1080ti review probably didn't have the 980ti retested and just used old value that don't include driver optimization anyway. And 980ti losing to Fury X is the furthest from truth (even beating Fury X in Wolfenstein 2).

Edit: new video show the Titan V can mine Ethereum at 77MH/s @ 220w, so this is like a do all best all card atm but only affordable to Tony Stark

forum.beyond3d.com/threads/nvidia-volta-speculation-thread.53930/page-46

Their claims are mostly based on two games - Doom and Sniper Elite 4:

- Doom and its engine (especially with Vulkan) is technically awesome and scales extremely well (provided that 200fps cap is not reached which does get problematic even at high resolutions with ultra-highend).

- Sniper Elite 4 is so far the only DX12 game that gets clear benefit from the lower level API across the board and it does not hurt that it also scales extremely well.

Now look at other tests they did:

- AOTS (DX12 and Async technical showcase) scales badly, even once they use something else beyond their usual High settings that are CPU limited. This, by the way, speaks directly against their DX12/Async claims.

- Hellblade scales well despite being Unreal Engine game using DX11.

- Wildlands, For Honor, Destiny are likely limited by something else (Destiny highest settings graphs show that well enough).

From my own experience, even 1080Ti gets CPU limited often enough at 1440p with highest settings. Titan V is around 40% faster which roughly matches the additional horsepower UHD needs compared to 1440p. CPU limitations are real and often enough engine or game themselves prove to be limiting.

In some cases, Titan V actually is too fast for now.