Friday, April 6th 2018

In Aftermath of NVIDIA GPP, ASUS Creates AREZ Brand for Radeon Graphics Cards

Graphics card manufacturers are gradually starting to align their gaming brands with NVIDIA to get admission into the exclusive GeForce Partner Program (GPP). Although there isn't any official confirmation on behalf of the NVIDIA AIB partners, small but significant changes are starting to become evident. The first example comes from Gigabyte's Aorus gaming line. Gigabyte currently offers the Gaming Box external graphics enclosure with a GeForce GTX 1070, GTX 1080, or a Radeon RX 580. If we look at the packaging closely, we can clearly see that the RX 580 box lacks the Aorus branding. However, Gigabyte isn't alone though. MSI is apparently in favor of GPP too as they remove all their Radeon Gaming X models from their global website. Take the Radeon RX 580 for instance. The RX 580 models from the Armor lineup are the only ones present. Surprisingly the US website still carries the Gaming X models.

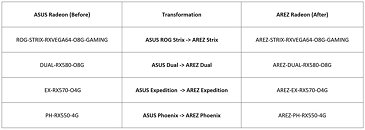

The latest rumor suggests that ASUS is the third AIB partner to jump on the GPP bandwagon. The Taiwanese manufacturer is allegedly creating the AREZ brand to accommodate their Radeon products. The AREZ moniker probably alludes to the Ares series of dual-GPU graphics cards historically centered around AMD GPUs. If this rumor is true, the Strix, Dual, Phoenix, and Expedition Radeon models are going to fall under the new AREZ branding. ASUS might even go as far as dropping their name from the AREZ models entirely.Update 17/04/2018: ASUS has officially announced the 'AREZ' brand here.

Source:

VideoCardz

The latest rumor suggests that ASUS is the third AIB partner to jump on the GPP bandwagon. The Taiwanese manufacturer is allegedly creating the AREZ brand to accommodate their Radeon products. The AREZ moniker probably alludes to the Ares series of dual-GPU graphics cards historically centered around AMD GPUs. If this rumor is true, the Strix, Dual, Phoenix, and Expedition Radeon models are going to fall under the new AREZ branding. ASUS might even go as far as dropping their name from the AREZ models entirely.Update 17/04/2018: ASUS has officially announced the 'AREZ' brand here.

137 Comments on In Aftermath of NVIDIA GPP, ASUS Creates AREZ Brand for Radeon Graphics Cards

1) Polaris CLEARLY was a midrange card from the start, and the XBOX One shows that it is possible to scale it up at least a bit more if they wanted to.

2) Vega on the other hand was blatantly meant for: Mobile, Compute, AI, and then Gaming (in that order). Small Vega gpu's are more efficient than Pascal by a decent margin, and Vega competes with Volta in compute even though it probably costs half as much to make.

Captain Tom is correct, in that Polaris had a small die size in mind from the start. If AMD wanted to make a larger Polaris they could have easily started with a larger die size and de-activated defective cores as needed for lower end SKUs.

The only thing that could change this is if AMD is able to use it's infinity fabric with it's GPUs. Only then would they be able to scale up their GPUs and at that point Nvidia would truely be screwed because MCMs are cheaper, have better yields, and you can easily scale them up.

Overall AMD has always had their best products when they were smaller dies. It has been common knowledge forever that underclocked and undervolted AMD cards gain immensely in the Efficiency Department, and it keeps getting more obvious every successive generation.

A gpu using infinity fabric to make a 7nm 4x3096-sp mega card would be fantastic! Let's hope they pull it off before Nvidia launches the GTX 1280 for $1099 lol.

That is why Fury and Vega saw the light and why they needed HBM / more efficient memory. We know today that HBM does not extract greater performance for gaming and AMD missed the boat entirely with GDDR5X. Their timing was bad and it was bad because they were out of options on further expanding GCN. If AMD should have stepped back from the high end it was during the Fury release. That way they would have been able to make bank versus Pascal and they would have only missed the answer to the 980ti. Now, they miss out on almost two generations instead of one and not just on the 1080ti but also the 1080 which is a large chunk of the marketplace with very good margins. They lost to Maxwell because it provided the efficiency already, and Pascal was another leap forward that even Vega isn't the right answer for, even after its countless delays and adjusted promises. Polaris brought Maxwell efficiency a year too late, and it sold because it had nice allround performance at a decent price, not because it was a superb step forward. You don't 'win' battles in the midrange, you just move lots of units. AMD could have also just re-used Hawaii for that segment; they were already rebranding and kicking down GPUs a full tier anyway to keep the product stack filled up.

So yes, today's reality is that we see most of the Vega GPUs land in Frontier and MI25, but its really not because AMD wanted to all along, its because they are forced to do so to at least make SOME money out of it.

Navi is just another example of the desperate search for more performance on GCN without breaking out of limitations and power/heat budgets. They can't push more out of a single die, so they use multiple of them. While in essence the idea is similar to Ryzen, the comparison doesn't really convince me, because soon AMD will have 3 radically different types of cards out there that they have to develop and support. Hardly efficient. They also go back to the drawing board in a big way while their competitor is continually scaling the same architecture and fine tuning it further, while it still has the MCM option to turn to after that.

We can always hope for the best, but AMD simply hasn't got the time to keep screwing up GPU anymore.

Ultimately though AMDs goal was to get scalability into its GPU sector... it's coming and when it does, that's when the real fight starts. Vega being a compute card first is very fast as a gaming card. I think Navi will be the start of correct push for a gaming card.

Side note though, it still feels like Vega is being held back, from a driver and software implementation standpoint. Only time will tell though.

It's really funny (and worrisome at the same time) to watch people making stuff up and rewriting history rather than admit they're sometimes wrong.

For bonus points, here's how Vega competes with Pascal irl: www.phoronix.com/scan.php?page=article&item=12-opencl-98&num=1

Regardless, frequency means nothing with the small die size, as I pointed out earlier.You must have missed the 15w Vega laptop chips. Clearly Vega has excellent performance per watt when it's in it's sweet spot. The same thing was found out when people were undervolting their Vega 64s. People were able to cut 1/3 off the power consumption at the same clocks, putting it at around the same perf/watt as pascal.

Nvidia scaling their architecture is great but there is a limit to how big you can make a chip or how high you can get the frequency, as evidenced by Intel having issues getting over the 5GHz bump for some time now. Nvidia is very close to the reticle limit and once you hit that you literally cannot make the chip bigger.

You had better hope AMD keeps competing or else we all will be enjoying our $700 GTX 1160s.That's what everyone is assuming although there is no direct confirmation of that. I don't see any reason why those assumptions would be wrong either. GPU die sizes are much bigger than CPUs and thus have a much larger benefit from MCM tech like infinity fabric since yields decrease exponentially as die size increases. If AMD does release an MCM GPU, it will have very good yields, will be completely scalable, and it will be cheaper. After all, both AMD and Nvidia still have to pay for dective dies on a wafer even if they aren't usable.Vega's DX11 performance is pretty good, especially when the devs take advantage of primitive shaders. The problem isn't the architecture in this case, it's the geometry pipeline. Nvidia cards are simply able to render more polygons, that is if the game doesn't use primitive shaders.Once again, completely wrong. Vega launched first on Desktop, first targeting professionals with the frontier edition and then the gaming version. Mobile came last It's ironic in the same paragraph that you complain about people making stuff up yet you are doing just that.

0/10 troll

Heck, Nvidia has a good enough online store that it can sell cards completely direct. The AIBs know this.

Now I'm not saying Nvidia would get rid of AIBs altogether but it will most certainly squeeze every last bit it can get out of these guys, whether that be through more Nvidia only cards or agreements to never take a dime of AMD marketing money.

If I've learned anything from the last 10 years of massive white collar crime, it's that companies will keep going until the law stops them.

This will affect my next purchase.

Not to say I doubt there was pressure on those who opt'd to go along with it, but it doesnt change the fact I dont like where this path goes.

That said, I wonder if this program also extends to ASUS laptops as well. Does this mean only ASUS laptops with Nvidia hardware will get the ROG branding? I guess we'll find out.That's what Navi should be for. If not, we're SOL.

There's a reason Nvidia also stated that MCM is the future and why they are also currently researching it. It's going to allow the continuation of moore's law for a little while longer.

- Nvidia's way of scaling their architecture is indeed great and what you are saying about how big a chip can be is exactly the problem GCN met during Hawaii and its the problem that Vega didn't manage to solve either. Meanwhile, there is a 35% performance gap at similar die sizes so now AMD has to resort to multiple dies, while Nvidia can postpone that for another full generation if they want to.

Or, perhaps we might even see a much bigger die area like what AMD did with Threadripper. Especially with HBM, the board has lots of space anyway. So there are tons of options, but ONLY if you have a highly efficient architecture that doesn't surpass TDP budgets for each segment. People simply will not accept a 400W GPU in this day and age, just as they won't accept hot and loud ones, let alone move all that hot air out of the ever smaller form factors on the marketplace. So efficiency is king for every single use case.

- You compare frequency limitations, but back when Maxwell was released, did you for one second consider the next gen would pass the 2 Ghz barrier for Nvidia? I for sure as hell didn't.

- I am not that worried about competition in the GPU space. I will say this, as I have said often; I think RTG would be much better served in the hands of a different company that can truly focus on its GPU effort instead of the happy marriage that is APU / custom chip design, because let's face it, for a true gaming GPU, those are all the wrong priorities and it shows. AMD is not doing anyone a service for the past few years and there is nothing on the horizon that is ready to dethrone Nvidia. Its up to AMD/RTG's priorities and management that we now have a high end abandoned for nearly two years...