Monday, September 17th 2018

NVIDIA RTX 2080 / 2080 Ti Results Appear For Final Fantasy XV

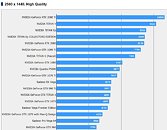

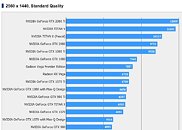

The online results database for the Final Fantasy XV Benchmark has been partially updated to include NVIDIA's RTX 2080 and 2080 Ti. Scores for both standard and high quality settings at 2560x1440 and 3840x2160 are available. While the data for 1920x1080 and lite quality tests are not.

Taking a look at the RTX 2080 Ti results, show it beating out the GTX 1080 Ti by 26% and 28% in the standard and high quality tests respectively, at 2560x1440. Increasing the resolution to 3840x2160, again shows the RTX 2080 Ti ahead, this time by 20% and 31% respectively. The RTX 2080 offers a similar performance improvement over the GTX 1080 at 2560x1440, where it delivers a performance improvement of 28% and 33% in the same standard and high quality tests. Once again, increasing the resolution to 3840x2160 results in performance being 33% and 36% better than the GTX 1080. Overall, both graphics cards are shaping up to be around 30% faster than the previous generation without any special features. With Final Fantasy XV getting DLSS support in the near future, it is likely the performance of the RTX series will further improve compared to the previous generation.

Source:

Final Fantasy XV

Taking a look at the RTX 2080 Ti results, show it beating out the GTX 1080 Ti by 26% and 28% in the standard and high quality tests respectively, at 2560x1440. Increasing the resolution to 3840x2160, again shows the RTX 2080 Ti ahead, this time by 20% and 31% respectively. The RTX 2080 offers a similar performance improvement over the GTX 1080 at 2560x1440, where it delivers a performance improvement of 28% and 33% in the same standard and high quality tests. Once again, increasing the resolution to 3840x2160 results in performance being 33% and 36% better than the GTX 1080. Overall, both graphics cards are shaping up to be around 30% faster than the previous generation without any special features. With Final Fantasy XV getting DLSS support in the near future, it is likely the performance of the RTX series will further improve compared to the previous generation.

39 Comments on NVIDIA RTX 2080 / 2080 Ti Results Appear For Final Fantasy XV

uk.hardware.info/reviews/8113/crossfire-a-sli-anno-2018-is-it-still-worth-it

Less and less Game support, dx12 doesn't help, poor performance gain.

you basically get 5-15% better total FPS by doubling GPU (1080tix2)in most games except a few outliers. 5-15% fps for 100% price I don't think its worth it. plus I quote them

"Furthermore, micro-stuttering remains an unresolved problem. It varies from game to game, but in some cases 60 fps with CrossFire or SLI feels a lot less smooth than the same frame rate on a single GPU. What's more, you're more likely to encounter artefacts and small visual bugs when you're using multiple GPUs as sometimes the distribution of the graphic workload doesn't seem to be entirely flawless."

So you get 5-15%, but you get a framerate that feels not smooth. better just spend the Extra cash on the best in line Single GPU to get the best performances.

hope intel will develop faster their gpu and amd will throw something on market ...so previous gen card prices go lower than i'll upgrade but not sooner..

even now cheapest used 1080 i can find is ~400$ and rx580 ~300 (those used in mining rigs are slightly cheaper but over-killed maybe..)

So, much higher price for a much worse vfm cannot become a market success if customers are rational persons, eh?

For a chip to be 25% faster than another on average it must be way faster than 25% when fully loaded. Simply because it cannot be any faster while neither is fully loaded. I'm just stated the obvious here, if you were truly interested in Turing (as opposed to the "I know without reading" attitude), you would have read Anand's in depth analysis.

And if the above seems overly complicated to you, think about cars: can you shorten the travel time between town A and B by 25% by using a car that's only 25% faster than the reference?