Thursday, September 27th 2018

NVIDIA Fixes RTX 2080 Ti & RTX 2080 Power Consumption. Tested. Better, But not Good Enough

While conducting our first reviews for NVIDIA's new GeForce RTX 2080 and RTX 2080 Ti we noticed surprisingly high non-gaming power consumption from NVIDIA's latest flagship cards. Back then, we reached out to NVIDIA who confirmed that this is a known issue which will be fixed in an upcoming driver.

Today the company released version 411.70 of their GeForce graphics driver, which, besides adding GameReady support for new titles, includes the promised fix for RTX 2080 & RTX 2080 Ti.

We gave this new version a quick spin, using our standard graphics card power consumption testing methodology, to check how things have been improved.

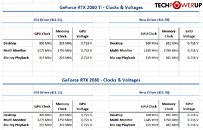

As you can see, single-monitor idle power consumption is much better now, bringing it to acceptable levels. Even though the numbers are not as low as Pascal, the improvement is great, reaching idle values similar to AMD's Vega. Blu-ray power is improved a little bit. Multi-monitor power consumption, which was really terrible, hasn't seen any improvements at all. This could turn into a deal breaker for many semi-professional users looking at using Turing, not just for gaming, but productivity with multiple monitors. An extra power draw of more than 40 W over Pascal will quickly add up into real Dollars for PCs that run all day, even though they're not used for gaming most of the time.We also tested gaming power consumption and Furmark, for completeness. Nothing to report here. It's still the most power efficient architecture on the planet (for gaming power).The table above shows monitored clocks and voltages for the non-gaming power states and it looks like NVIDIA did several things: First, the memory frequency in single-monitor idle and Blu-ray has been reduced by 50%, which definitely helps with power. Second, for the GTX 2080, the idle voltages have also been lowered slightly, to bring them in line with the idle voltages of RTX 2080 Ti. I'm sure there's additional under-the-hood improvements to power management, internal ones, that are not visible to any monitoring.

Let's just hope that multi-monitor idle power gets addressed soon, too.

Today the company released version 411.70 of their GeForce graphics driver, which, besides adding GameReady support for new titles, includes the promised fix for RTX 2080 & RTX 2080 Ti.

We gave this new version a quick spin, using our standard graphics card power consumption testing methodology, to check how things have been improved.

As you can see, single-monitor idle power consumption is much better now, bringing it to acceptable levels. Even though the numbers are not as low as Pascal, the improvement is great, reaching idle values similar to AMD's Vega. Blu-ray power is improved a little bit. Multi-monitor power consumption, which was really terrible, hasn't seen any improvements at all. This could turn into a deal breaker for many semi-professional users looking at using Turing, not just for gaming, but productivity with multiple monitors. An extra power draw of more than 40 W over Pascal will quickly add up into real Dollars for PCs that run all day, even though they're not used for gaming most of the time.We also tested gaming power consumption and Furmark, for completeness. Nothing to report here. It's still the most power efficient architecture on the planet (for gaming power).The table above shows monitored clocks and voltages for the non-gaming power states and it looks like NVIDIA did several things: First, the memory frequency in single-monitor idle and Blu-ray has been reduced by 50%, which definitely helps with power. Second, for the GTX 2080, the idle voltages have also been lowered slightly, to bring them in line with the idle voltages of RTX 2080 Ti. I'm sure there's additional under-the-hood improvements to power management, internal ones, that are not visible to any monitoring.

Let's just hope that multi-monitor idle power gets addressed soon, too.

53 Comments on NVIDIA Fixes RTX 2080 Ti & RTX 2080 Power Consumption. Tested. Better, But not Good Enough

X:confused:Although you're not taking into account the increased performance.

I'd be interesting how it reacts or if there is any impact. Power usage go up or does it clock down.

2080 is 45% faster than V64, 78/54=1.444x

Extra 30W more is almost nothing in a long term. People who shell out at the very least $500 for a GPU and also for an extra monitor couldn't care less about this extra wattage.

980 TI: 28nm

1080 TI: 16nm = 0.57x shrink

2080 TI: 12nm = 0.75x shrink

Also that all caps herp derp is a *fantastic* argument

Just another good reason to stay on pascal in sted of these overpriced rtx cards. I will stick to my gtx 1080 ti for sure.

From the charts above, multi-monitor:

Vega - 17W

2080Ti - 58W

But mentioning Vega "in certain context" did it's job.

So, yea, nearly like Vega, if you meant 3 times Vega, or almost 4 times Vega.

PS

And I don't believe the spin to be accidental, sorry.

The problem with AMD's power consumption was that vega 64, for instance, drew WAY more power (and ran way hotter) then a 1080, hell more then a 1080ti, and only performed at 1080 levels some of the time.

That is not an issue here, as the 2080ti is drawing less power then a vega 64 while curb-stomping it performance wise. Now, if AMD came out with a magic GPU that performed at 2080ti level but only pulled 2080 power, then nvidia would be criticized for being so inefficient by comparison.Yes, cost isnt the issue, lower OC headroom and extra heat are the problems.

BTW... you guys realize Perf/Watt is still up 18%, right?

To me.

Is it clear to IceShroom? A rhetorical question.

Vega 64's temperature limit is 85C, GTX 1080's temperature limit is 83C. Is 2C really so much higher that it's causing that much of a problem?

Vega 64 Temperatures of different power/performance presets:

(From here)

GTX 1080 Founders Edition temperatures, stock and overclocked:

(From here)