Thursday, February 14th 2019

AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

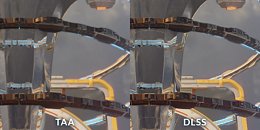

A report via PCGamesN places AMD's stance on NVIDIA's DLSS as a rather decided one: the company stands for further development of SMAA (Enhanced Subpixel Morphological Antialiasing) and TAA (Temporal Antialising) solutions on current, open frameworks, which, according to AMD's director of marketing, Sasa Marinkovic, "(...) are going to be widely implemented in today's games, and that run exceptionally well on Radeon VII", instead of investing in yet another proprietary solution. While AMD pointed out that DLSS' market penetration was a low one, that's not the main issue of contention. In fact, AMD decides to go head-on against NVIDIA's own technical presentations, comparing DLSS' image quality and performance benefits against a native-resolution, TAA-enhanced image - they say that SMAA and TAA can work equally as well without "the image artefacts caused by the upscaling and harsh sharpening of DLSS."

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

Source:

PCGamesN

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

170 Comments on AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

- DXR is not limited to reflections, shadows and AO. Nvidia simply provides a more-or-less complete solutions for these three. You are able do anything else RT yuo want with DXR. While not DX12 - and not DXR - the ray-traced Q2 on Vulkan clearly shows that full-on raytracing can be done somewhat easily.

- While RTX/DXR are named raytracing, they do also accelerate pathtracing.

- OctaneRender is awesome but does not perform miracles. Turing does speed up the non-realtime path-/raytracing software where it is implemented by at least 2, often 3-5 times compared to Pascal. And this is early implementations.Nvidia as well as various developers have said RT can start occurs after generating G-Buffer. Optimizing that start point was one of the bigger boosts in BFV patch.

Rendering is a pipelined process; you have the various stages of geometry processing (vertex shaders, geometry shaders and tessellation shaders), then fragment("pixel shaders" in Direct3D terms) processing and finally post-processing. In rasterized rendering, the rasterization itself it technically only the transition between geometry and fragment shading; it converts vertices from 3D space to 2D space, performs depth sorting and culling, before the fragment shader starts putting in textures etc.

In a fully raytraced rendering, all the geometry will still be the same, but the rasterization step between geometry and fragments are gone, and the fragment processing will have to be rewritten to interface with the RT cores. All the existing hardware is still needed, except for the tiny part which does the rasterization. So all the "cores"/shader processors, TMUs etc. are still used during raytracing.

So in conclusion, he is 100% wrong about claiming a "true" raytraced GPU wouldn't be able to do rasterization. He thinks the RT cores does the entire rendering, which of course is completely wrong. The next generations of GPUs will continue to increase the number of "shader processors"/cores, as they are still very much needed for both rasterization and raytracing, and it's not a legacy thing like he claims.

"true" raytraced GPU? You mean like full of RT core only?

Think of it more like this; the RT cores are accelerating one specific task, kind of like AVX extensions to x86. Code using AVX will not be AVX only, and while AVX will be doing a lot of the "heavy lifting", the majority of the code will still be "normal" x86 code.

Wasn't this slide already posted in one of the threads?

Sounds logical.

It doesn't look great. I really can't see the improvement like you guys say.

Variable refresh rate monitor - VESA Adaptive-Sync standard, which is part of DisplayPort 1.2a; was always a working open standard. It was just Nvidia wanted to use/push there proprietary (added hardware) implementation to jump out in front before VESA (open coalition of monitor and GPU representatives) ultimately finalized and agreed upon standard that requires (no huge additional hardware/cost), but just enabling features already developed in the DisplayPort 1.2a.

AMD and the industry had been working to commence the "open standard", it was just Nvidia saw that not progressing to their liking, and threw their weight to licensing such "add-in" proprietary work-around to monitor manufacture earlier than VESA coalition was considering the roll-out. Now that those early monitor manufacture are seeing sales of those G-Sync monitors not as lucrative (as the once had been to the early adopter community). Nvidia see's themselves on the losing end, decided to slip back in the fold, and unfortunately there's no repercussions to the lack of support they delivered upon the VESA coalition.

Much like the Donald on the "birther" crap, it was "ok" to do it and spout the lies, until one day he finally could no-long pass muster and wasn't expedient to his campaign, and declared he would no talk about it again! I think that's how Jen Hsun Huang hopes G-Sync will just pass...

G-Sync was announced in October 2013. G-Sync monitors started to sell early 2014.

Freesync was announced in January 2014. VESA Adaptive Sync was added to DP 1.2a in mid-2014. Freesync monitors started to sell early 2015.

Just like how phsyx & hairworks is still being used today, and how everybody have a g-sync monitor

Every Nvidia innovations is guaranteed to be a success

Now NV is joining the club with Freesync (this compatible stuff is so damn funny ) since they have seen they are going nowhere with the price. :)